预约演示

更新于:2025-05-07

Amblyopia

弱视

更新于:2025-05-07

基本信息

别名 AMBLYOPIA、AMBLYOPIA STRABISMIC、Amblyopia + [48] |

简介 A nonspecific term referring to impaired vision. Major subcategories include stimulus deprivation-induced amblyopia and toxic amblyopia. Stimulus deprivation-induced amblyopia is a developmental disorder of the visual cortex. A discrepancy between visual information received by the visual cortex from each eye results in abnormal cortical development. STRABISMUS and REFRACTIVE ERRORS may cause this condition. Toxic amblyopia is a disorder of the OPTIC NERVE which is associated with ALCOHOLISM, tobacco SMOKING, and other toxins and as an adverse effect of the use of some medications. |

关联

5

项与 弱视 相关的药物靶点 |

作用机制 mAChRs拮抗剂 |

非在研适应症 |

最高研发阶段批准上市 |

首次获批国家/地区 日本 |

首次获批日期1968-07-20 |

靶点- |

作用机制- |

非在研适应症- |

最高研发阶段批准上市 |

首次获批国家/地区 日本 |

首次获批日期1966-04-09 |

300

项与 弱视 相关的临床试验ChiCTR2500100071

Randomized, controlled, single-blind, single-center clinical trial of efficacy and safety of amblyopia treatment software based on eye dominance shift in the treatment of children with anisometric amblyopia

开始日期2025-04-21 |

申办/合作机构- |

NCT06928727

Ophtalmological Characteristics of Patients With Craniosynostosis Followed at the Amiens University Hospital

Craniosynostosis are cranial deformations due to the premature closure of one or more cranial sutures. These deformations affect approximately one in 2.500 births. In the vast majority of cases, craniosynostoses are isolated (non-syndromic) and their origin is not always known. On the other hand, 20% of these deformations are said to be syndromic.

Craniosynostosis has morphological (associated dysmorphism) and functional (growth conflict between the skull and the brain) repercussions. Ophthalmological disorders are frequent: refractive disorders, oculomotor disorders, optic nerve damage, sensory damage.

This study aims to describe the ophthalmological clinical characteristics associated with craniosynostosis in patients followed at the Amiens University Hospital. This is a retrospective study based on the analysis of patient records followed in our center.

Craniosynostosis has morphological (associated dysmorphism) and functional (growth conflict between the skull and the brain) repercussions. Ophthalmological disorders are frequent: refractive disorders, oculomotor disorders, optic nerve damage, sensory damage.

This study aims to describe the ophthalmological clinical characteristics associated with craniosynostosis in patients followed at the Amiens University Hospital. This is a retrospective study based on the analysis of patient records followed in our center.

开始日期2025-04-04 |

NCT06913400

Progression of Stereopsis Recovery in Strabismic Patients: A One-Month and Three-Month Post-Surgery Evaluation

This prospective longitudinal observational study aims to assess stereopsis recovery in strabismic patients at one month and three months post-corrective surgery. The study will be conducted at Mayo Hospital, Lahore, and Sajjad Eye Center, Burewala, with a sample size of 34 using a purposive sampling technique. Eligible participants include patients aged ≥5 years undergoing strabismus surgery for esotropia, exotropia, or vertical deviations, with best-corrected visual acuity (BCVA) of 6/12 in both eyes. Patients with previous strabismus surgery, amblyopia, neurological conditions, or sensory strabismus will be excluded.

开始日期2025-03-20 |

申办/合作机构- |

100 项与 弱视 相关的临床结果

登录后查看更多信息

100 项与 弱视 相关的转化医学

登录后查看更多信息

0 项与 弱视 相关的专利(医药)

登录后查看更多信息

9,014

项与 弱视 相关的文献(医药)2025-12-01·Child's Nervous System

Orthoptic findings in trigonocephaly patients after completed visual development

Article

作者: den Ottelander, Bianca K ; Yang, Sumin ; Telleman, Marieke A J ; Gaillard, Linda ; Mathijssen, Irene M J ; Loudon, Sjoukje E

2025-07-01·American Journal of Ophthalmology

Epidemiologic Study of Pediatric Uveitis and its Ophthalmic Complications Using the Korean National Health Insurance Claim Database

Article

作者: Bromeo, Albert John ; Hong, Eun Hee ; Do, Diana ; Akhavanrezayat, Amir ; Tran, Anh Ngoc Tram ; Khatri, Anadi ; Thng, Zheng Xian ; Karaca, Irmak ; Park, Sung Who ; Shin, Yong Un ; Kang, Min Ho ; Nguyen, Quan Dong ; Or, Chi Mong Christopher ; Kim, Jiyeong

2025-07-01·Ophthalmology Science

Vision Loss and Blindness in the United States: An Age-Adjusted Comparison by Sex and Associated Disease Category

Article

作者: Eppich, Kaleb ; Blakley, Macey S ; Bugg, Victoria A ; Hartnett, M Elizabeth ; Lum, Flora ; Greene, Tom

58

项与 弱视 相关的新闻(医药)2025-04-25

·生物谷

人类视觉功能依赖并行视觉通路高效的信息处理能力。其中,大细胞(M)通路加工运动和粗糙的视觉信息,而小细胞(P)通路加工颜色和空间细节。2025年4月7日,中国科学院生物物理研究所张朋课题组和与复旦大学附属眼耳鼻喉医院文雯团队在《Advanced Science》发表题为"Improving Adult Vision Through Pathway-Specific Training in Augmented Reality"的研究论文。研究开发了创新的增强现实(AR)训练方法,能够在日常生活场景中实施视觉通路选择性的干预,显著和持久的增强健康成人和弱视患者的视觉能力。图:AR视觉训练显著提升成人的视力、立体视觉和小细胞通路敏感性AR系统通过高清摄像头采集、GPU实时处理,OLED头戴显示屏呈现修改后的自然场景视频。为了特异性增强小细胞通路的功能,系统将低空间频率信息的相位打乱成为快速闪烁噪声,同时保留高空间频率细节信息;进一步通过降低优势眼高频信息的信噪比,增强非优势眼在小细胞通路的眼优势。研究发现,短期的AR训练能够显著提升健康受试者的视力与高空间频率敏感度,恢复双眼平衡并长期维持;弱视患者使用轻量化的AR眼镜进行为期一周的居家训练后,弱视眼的视力、眼优势及立体视功能均取得显著进步,并表现出极高的训练依从性。这项AR训练技术为弱视、青光眼和发展性阅读障碍等视觉功能障碍的康复提供了全新的解决方案,对健康人群的视觉功能增强同样具有重要的应用前景。中国科学院生物物理所高弋戈博士研究生和复旦大学附属眼耳鼻喉科医院周钰莲眼科医师为论文共同第一作者,中国科学院生物物理所张朋研究员、复旦大学附属眼耳鼻喉科医院文雯主任为论文共同通讯作者,中国科学院生物物理所何勍助理研究员为论文共同作者。该研究得到了科技创新2030-"脑科学与类脑研究"重大项目、国家自然科学基金、上海市科技创新行动计划专项资金和苏州集视医疗科技有限公司的支持。文章链接:https://doi-org.libproxy1.nus.edu.sg/10.1002/advs.202415877本文仅用于学术分享,转载请注明出处。若有侵权,请联系微信:bioonSir 删除或修改!

专利侵权

2025-04-17

买科研服务(代计算、代测试、超算机时),送老李校长“科研理工男士情感课”第一性原理计算解决50年悬而未决难题:半导体中铜为何扩散更快?Ab initio及第一性原理入门参考书介绍《海贼王》告诉你,做科研为什么不能闭门造车……985博导亲测:用DeepSeek写国自然本子,3天完成30天工作量来自公众号:大屯路15号本文以传播知识为目的,如有侵权请后台联系我们,我们将在第一时间删除。责编 | 侯文茹弱视是一种常见的视觉发育障碍,通常表现为单眼视力下降,即便矫正了屈光不正,视力仍无法达到正常水平。以往研究普遍认为弱视眼的视觉信号在传递过程中发生了衰减,但在介观尺度上参与前馈、侧向连接与反馈处理的皮层微环路的具体变化,以及双眼相互作用的机制仍不明确。近日,中国科学院生物物理研究所张朋团队与复旦大学附属眼耳鼻喉科医院文雯团队合作,利用超高分辨率7特斯拉(7T)功能磁共振成像(fMRI)和频率标记脑电图(EEG)技术,揭示了人类弱视患者视觉皮层微环路层级上的神经活动异常。图:弱视眼信号在传入皮层时已经受损,双眼互相抑制失衡、整合减弱,信号延迟增加。该研究发现,在弱视患者的大脑视觉皮层初级区域(V1区),来自弱视眼的视觉信号在输入层(主要负责接收丘脑信息的皮层亚层)已显著减弱,并前馈传递至下游视觉区,提示弱视的异常起源于更早阶段的视觉信息输入缺陷。这也印证了团队此前关于弱视患者皮层下视觉核团功能改变的研究成果。双眼侧向抑制机制失衡进一步导致V1区表层信号丢失,强势眼(好眼)强烈抑制了弱视眼的信号传递,而弱视眼对好眼的抑制则显著下降。脑电频率标记数据进一步表明,眼间抑制的失衡伴随着双眼视觉信息整合能力的显著下降。此外弱视眼视觉信号不仅幅度下降,而且传递速度明显变慢,整体视觉处理效率降低。这项研究首次以亚毫米和毫秒级的精度描绘了弱视患者视觉皮层微环路的异常变化,揭示了关键期内异常视觉经验如何塑造人类皮层微环路功能。研究成果对理解弱视的神经机制具有重要意义,并为弱视治疗提供了新的理论基础。例如,针对输入层神经元功能的强化训练和改善双眼信息整合、纠正失衡抑制的视觉训练,可能成为未来弱视治疗的新方向。该研究论文于2025年4月11日发表于《Imaging Neuroscience》期刊。中国科学院生物物理研究所王跃博士、钱晨灿副研究员、高弋戈博士和复旦大学附属眼耳鼻喉科医院周钰莲医师为共同第一作者,中国科学院生物物理研究所张朋研究员、钱晨灿副研究员、复旦大学附属眼耳鼻喉科医院文雯主任医师为共同通讯作者,浙江大学电气工程学院张孝通教授为共同作者。该研究得到了科技创新2030-"脑科学与类脑研究"重大项目、国家自然科学基金、中国科学院青年创新促进会、温州医科大学眼视光学和视觉科学国家重点实验室项目、上海市科技创新行动计划专项资金的支持。文章链接:https://doi-org.libproxy1.nus.edu.sg/10.1162/imag_a_00561

专利侵权

2024-12-26

导言

在过去几年里,数字医疗公司面临重重挑战。初创公司的资金流入减少,而拥有已获批产品的公司则仍在努力增加收入和扩大患者群。不过,当前创新势头依然强劲,用于诊断、治疗和远程监控患者的新型数字医疗产品正进入更加成熟的全球市场。审批和报销途径的不断增加,也为未来的成功提供了更多机会。一些厂商还将单个产品类型组合成“解决方案”,并提供面向患者和医生的操作界面,从而增加了被医疗系统采用的可能性。在研究领域,生物制药公司一直在药物试验中使用可穿戴传感器和数字化测量,以更好地了解药物收益并降低风险。

近期,IQVIA Institute发布Digital Health Trends 2024: Implications For Research and Patient Care报告,深入探讨了数字医疗市场各细分领域的发展趋势,并结合数字诊断与数字疗法(DTx),以及数字护理(DCs)等日趋成熟的治疗产品领域,关注用于减轻健康症状的消费者应用程序和非处方数字疗法。

点击观看视频,速览报告精华

本文摘选部分内容与读者分享,完整报告请扫描下方二维码获取。

数字医疗前景

1.1

数字医疗领域投资

数字医疗可以有多种定义,美国FDA将数字医疗技术(DHTs)广义地描述为“使用计算平台、连接、软件和/或传感器,用于医疗保健和相关用途的系统”。在本报告中,主要指的是改善健康的移动设备(如多用途智能手机、平板电脑、虚拟现实设备、消费类可穿戴设备和家庭虚拟助手)的使用,以及与之相关的应用程序、生物识别传感器、连接设备、分析算法和软件平台。

过去两年半中,数字健康领域的交易数量和投资总额持续下降,目前已接近2019年的水平(图1)。在疫情推动医疗行业迅速数字化的高峰期,2021年的年度风险投资总额曾达到593亿美元。然而到2023年,这一数字已锐减至原先的一半,仅为228亿美元,不仅交易数量减少,单笔交易的平均金额也有所下降。尽管2024年第一季度,其季度融资总额和数字健康交易数量仍处于低位,但2024年第二季度已显示出回暖迹象,融资总额和单笔交易的平均金额均有所提升。

图1:数字健康领域季度投资回顾(十亿美元)

除风险投资外,生命科学公司也在持续加码数字健康领域,这将对患者护理和科学研究带来深远影响。其重点投资方向一直是为医生提供诊断和治疗决策支持的解决方案,但投资范围除此以外还包括:在药物研发试验中使用传感器捕捉数字化终点;开发用于改善患者健康的数字疗法;推进支持并加速诊断与治疗的数字健康评估工具;以及推出远程患者监测工具,帮助临床医生和研究人员提升患者治疗效果。

值得注意的是,在经历了热门新技术的热潮、冷静期以及由此获得的经验教训之后,市场通常会推出更贴合利益相关方需求且更具商业可行性的产品。对于数字健康领域而言,研发人员如今对市场痛点有了更深刻的理解,并推出了更有吸引力的下一代产品。医生们也因过往的使用经验,对新产品如何融入诊疗及患者护理有了更清晰的认识。

当前,我们已经看到数字健康产品开发者正逐步转向商业前景更明确的新型商业模式,同时监管和报销方面的阻碍也有所降低。这不仅使开发者能够在产品生命周期的早期实现营收,还能在持续改进产品的过程中逐步扩大市场。此外,产品开发者还通过横向和纵向整合其他公司资源,打造更符合现有医疗路径、能够有效解决用户痛点的产品套件。

1.2

患者护理领域的数字工具

数字解决方案越来越多,现如今,从预防性自主护理到风险评估、分诊、诊断、治疗和远程患者监测,在整个患者旅程中,可借助数字解决方案为患者和医生提供帮助,从而加速患者旅程并改善治疗结局(图2)。

图2:数字健康领域的诊断、治疗和检测

消费者应用程序(App)趋势

2.1

App的增长

对于健康应用程序开发者而言,移动应用程序商店是重要的分销渠道。这些平台上推出的应用程序涵盖了数字健康的多个细分领域,个人可通过应用商店下载安装养生和健身应用程序、疾病自主管理应用程序、数字疗法应用程序,甚至是风险筛查应用程序,医生则通过应用商店获取临床决策支持工具或经批准的数字诊断应用程序等。

尽管应用程序的数量保持在相似的水平,但根据IQVIA AppScript目录中一系列知名健康类应用程序的研究,就会发现情况有所不同。按照使用类别,对该数据库中的应用程序进行分析,可以了解当前可能影响患者自主护理和治疗的优质应用程序的现状(图3)。

图3:数字健康应用程序,按种类和特定疾病划分

在患者的整个就医过程中,数字健康应用程序可分为两大类:一类侧重于“健康管理”,便于跟踪和调整健身行为、生活方式、压力和饮食;另一类则专门侧重于“健康状况管理”,可以治疗疾病,向患者提供信息以帮助他们自我管理疾病或状况,实现就医,或通过药物提醒等方式实现支持疗法。自2015年以来,应用程序的组合不断转向健康状况管理,截至2024年6 月,超过一半的应用程序(51%)专注于这一领域,高于2015年的28%。在疫情之后,运动和健身类应用程序再次受到关注,在2023年中期占比短暂上升到27%,但增长速度已再次放缓。

自2021年以来,专注于特定疾病的应用程序数量增加了近两倍,达到8,217个,目前占所有应用程序的26%。心理健康和行为障碍仍然是最大的细分市场,占所有特定疾病应用程序的四分之一,数字疗法已证明能够影响此类患者行为和治疗疾病症状。糖尿病和心血管疾病得到了疾病自我管理应用、药物管理应用和远程患者监测传感器(这三个领域目前约占特定疾病应用的一半)的支持。

在其他的重点领域中,关注眼部问题(主要与视力有关,如近视、弱视和青光眼)以及听觉问题(如耳鸣)的应用程序明显增加。皮肤病应用程序也在增加,其中约有22%使用人工智能,18%与牛皮癣有关。而在疫情后,呼吸系统应用程序的数量有所下降。

2.2

生命科学公司投资

在应用商店中的一些消费者应用程序是由生命科学公司创建,或是通过生命科学公司的投资而产生的。这些应用程序包括各种用于帮助患者更好地应对疾病,并改善患者的治疗、依从性和治疗结局的移动App。

2011年至2022年期间,生物制药、医疗器械和消费者健康公司共参与创建了775个App,2020年,由生命科学公司推出的数字健康App的数量达到峰值,此后该数量有所下降(图4)。

图4:生命科学公司历年投资并创建的App数量

2.3

非处方数字疗法

经验证,一些属于FDA酌情执法范围的消费者App即使并未直接提供正式的治疗干预,已被证明也能够改善患者的健康和治疗结局,并且可以通过App商店或网络平台进行商业销售。这些“护理支持”类App可以“帮助患者更好地管理特定疾病或医疗状况护理”,但并不能用于治疗疾病。这些App通常会提供教育内容,或用作数字课程,或采用循证方法来帮助患者预防和管理疾病相关常见症状。这些App可能具备提醒、激励性指导等功能,并可提供提示和教育材料以改变行为(例如,支持锻炼、禁戒等养生行为),改善患者的自主管理,帮助患者通过培训发展应对技能。

数字疗法及其在护理中的应用

除了健康App之外,还有一些数字产品通过软件来帮助治疗、诊断和监测患者(图5)。为了治疗特定的疾病或病症,医疗机构可能会建议患者接受数字疗法,包括提供医疗干预的健康软件,或者将患者转诊到数字护理(DC)机构,这些数字护理机构会使用数字工具来改善其治疗、预防或疾病管理计划。

图5:2024年商用或研发中的数字疗法、数字护理及其他数字工具

3.1

数字疗法的批准

大多数数字疗法是作为医疗器械进行监管的。如需获得数字疗法的相关市场授权和报销,就必须在特定的临床适应症中证明数字疗法具有积极的疗效,并通过开展临床试验和其他研究,生成证据以支持该疗效。许多数字疗法也通过处方予以提供,这类数字疗法通常被称为处方数字疗法(PDT)。在一些国家,低风险的治疗App和在线课程可能免于监管,而那些已生成证据以证明改善患者结局的数字疗法被称为非处方数字疗法(NDTs),不过目前大多数App和在线课程都有一定访问“限制”。

处方数字疗法和数字护理疗程均侧重于心理健康问题治疗,其中一些是其他疾病(如糖尿病和癌症)的并发症(图6)。处方数字疗法和数字护理疗程还用于多种神经系统疾病,帮助患者克服慢性疼痛或功能障碍问题,并在糖尿病和肌肉骨骼问题等疾病中为行为矫正提供辅助。

图6:数字疗法和数字护理关注的疾病领域

基于传感器的数字化测量

主观描述人类行为和体验是可能的,但客观评估和测量人类行为和体验却是一项挑战。疾病评估量表、患者调查表、表现测试等工具被创造出来以衡量个体行为、健康状态、临床结局和经验的变化。然而,其中一些传统方法和工具仍依赖于临床医生或患者对行为的主观观察,因此不同的测试方式可能产生不同的结果和数值。

如今,可穿戴设备和移动设备上装有各种传感器部件,为数字生物标志物的创建提供了便利。通过消费者设备(如智能手表和健身追踪器)以及临床级设备(如活动监测器)可以持续(或重复)收集生理及行为数据。随着这些可穿戴和移动设备又配备了新型传感器,现在可通过被动方式跟踪各种信号。然后,通过算法、数学公式和其他正规方法(如心电图)对这些信号进行解读,从而测量生理过程和行为(图7)。因此,数字生物标志物现在已经能够揭示细微的人类动作、行为、表现能力和模式,并且为诸多医学专业带来了价值。

图7:创建数字生物标志物的传感器、信号和方法

声明

原创内容的最终解释权以及版权归IQVIA艾昆纬中国所有。如需转载文章,请发送邮件至iqviagcmarketing@iqvia.com。

分析

对领域进行一次全面的分析。

登录

或

Eureka LS:

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

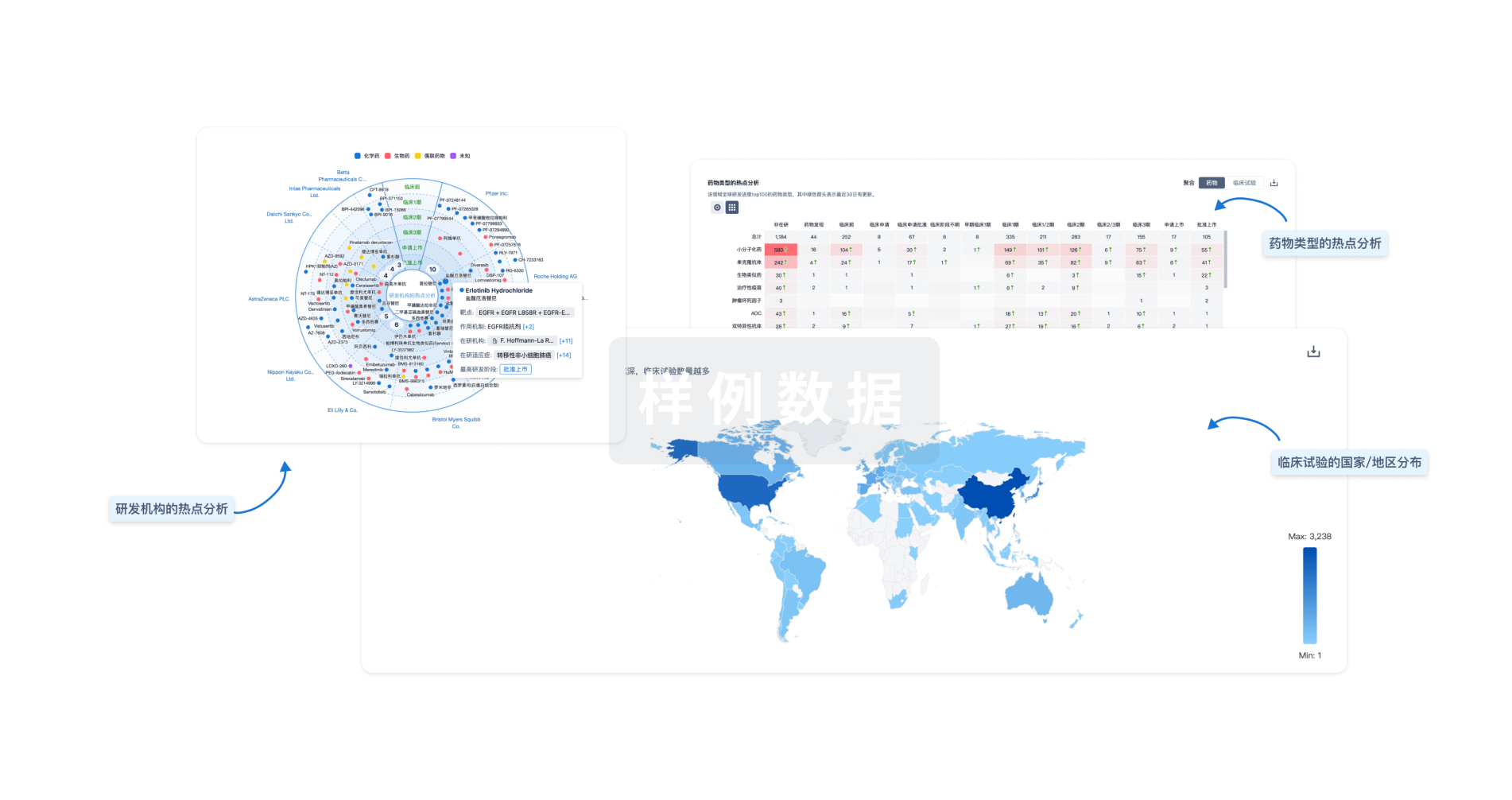

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用