预约演示

更新于:2025-09-11

Stanford University

更新于:2025-09-11

概览

标签

肿瘤

其他疾病

血液及淋巴系统疾病

小分子化药

蛋白水解靶向嵌合体(PROTAC)

自体CAR-T

疾病领域得分

一眼洞穿机构专注的疾病领域

暂无数据

技术平台

公司药物应用最多的技术

暂无数据

靶点

公司最常开发的靶点

暂无数据

| 排名前五的药物类型 | 数量 |

|---|---|

| 小分子化药 | 68 |

| 蛋白水解靶向嵌合体(PROTAC) | 33 |

| 诊断用放射药物 | 13 |

| 化学药 | 11 |

| T细胞疗法 | 5 |

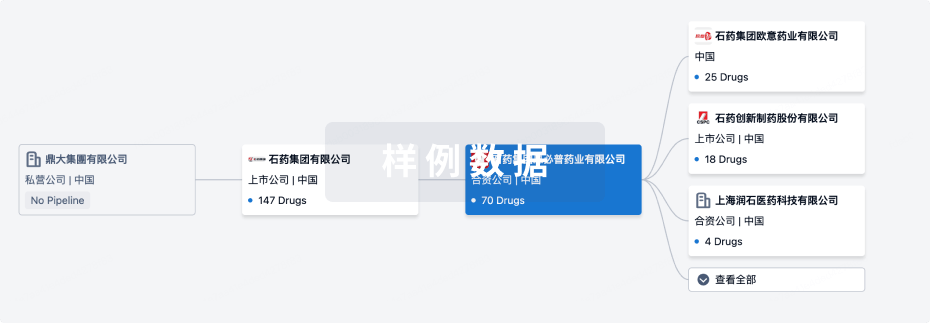

关联

169

项与 Stanford University 相关的药物靶点 |

作用机制 CTLA4抑制剂 |

非在研适应症 |

最高研发阶段批准上市 |

首次获批国家/地区 美国 |

首次获批日期2011-03-25 |

作用机制 Opioid receptors拮抗剂 |

在研机构 |

原研机构 |

非在研适应症 |

最高研发阶段批准上市 |

首次获批国家/地区 美国 |

首次获批日期2006-04-13 |

作用机制 透明质酸调节剂 |

原研机构- |

非在研适应症 |

最高研发阶段批准上市 |

首次获批国家/地区- |

首次获批日期- |

2,837

项与 Stanford University 相关的临床试验NCT05945147

Feasibility Study Comparing a Ketamine and Midazolam Infusion to a Midazolam-Only Infusion for Complex Regional Pain Syndrome

This study will assess the feasibility of administering ketamine plus midazolam or midazolam alone, when infused over 5 days in an outpatient setting, to adults with complex regional pain syndrome (CRPS).

开始日期2099-01-01 |

申办/合作机构 |

NCT04027569

PROMs To Improve Care: A Pragmatic RCT on Standardized and Patient Specific PROMs on Outcomes After Hand Surgery

To examine the impact of using 2 validated PROMs during the care of an orthopaedic condition on shared decision making, patient centered care, and patient outcomes.

开始日期2040-11-01 |

申办/合作机构 |

NCT04291170

Comparison of Upper Extremity and Lower Extremity Function and Quick DAS

Patient reported outcome measure (PROM) are a method by which to assess outcomes from a patient perspective. The QuickDASH is a commonly used PROM. QDASH was validated against grip strength and ability work, however not against its ability to actually measure what it sets forth to measure (ie: patient's ability to use a hammer, carry a shopping bag, wash a wall, etc). The purpose of this study is to correlate the self-reported QDASH with patients' ability to perform the functions on the QDASH and compare to a control group who completes the task on a lower extremity PROM, KOOS JR.

开始日期2040-09-01 |

申办/合作机构 |

100 项与 Stanford University 相关的临床结果

登录后查看更多信息

0 项与 Stanford University 相关的专利(医药)

登录后查看更多信息

185,422

项与 Stanford University 相关的文献(医药)2026-01-01·APPETITE

Meaningfully reducing consumption of meat and animal products is an unsolved problem: A meta-analysis

Article

作者: Green, Seth Ariel ; Mathur, Maya B ; Smith, Benny

Which interventions produce the largest and most enduring reductions in consumption of meat and animal products (MAP)? We address this question with a theoretical review and meta-analysis of randomized controlled trials that measured MAP consumption at least one day after intervention. We meta-analyze 35 papers comprising 41 studies, 112 interventions, and approximately 87,000 subjects. We find that these papers employ four major strategies to change behavior: choice architecture, persuasion, psychology (manipulating the interpersonal, cognitive, or affective factors associated with eating MAP), and a combination of persuasion and psychology. The pooled effect of all 112 interventions on MAP consumption is quite small (standardized mean difference (SMD) = 0.07 (95 % CI: [0.02, 0.12]), indicating an unsolved problem. Interventions aiming to reduce only consumption of red and processed meat were more effective (SMD = 0.25; 95 % CI: [0.11, 0.38]), but it remains unclear whether such interventions also decrease consumption of other forms of MAP. We conclude that while existing approaches do not provide a proven remedy to MAP consumption, designs and measurement strategies have generally been improving over time, and many promising interventions await rigorous evaluation.

2026-01-01·JOURNAL OF AFFECTIVE DISORDERS

The Emotion Regulation Questionnaire-Short Form (ERQ-S): Psychometric properties in a clinical sample

Article

作者: Preece, David A ; Gross, James J ; Heekerens, Johannes B

BACKGROUND:

Emotion regulation is essential for healthy affective functioning. The Emotion Regulation Questionnaire (Gross and John, 2003) has been the most widely used measure of emotion regulation, and recently a 6-item short form was introduced for time-limited settings, the Emotion Regulation Questionnaire-Short Form (ERQ-S; Preece et al., 2023). It is designed to measure habitual use of cognitive reappraisal and expressive suppression. We evaluated the psychometric performance of the ERQ-S in a clinical sample.

METHODS:

531 individuals with various mental health diagnoses completed the ERQ-S and other psychometric measures of clinically-relevant constructs.

RESULTS:

The ERQ-S demonstrated a theoretically congruent two-factor structure, good internal consistency, and expected patterns of associations with other constructs, supporting its validity.

CONCLUSIONS:

Our data suggest the ERQ-S exhibits strong psychometric properties in clinical populations. Its brevity makes it a practical tool for emotion regulation assessments in time-limited settings.

2026-01-01·Neural Regeneration Research

Enhanced neurogenesis after ischemic stroke: The interplay between endogenous and exogenous stem cells

Article

作者: Wang, Yuhe ; Wu, Jun ; Geng, Ruxu ; Wang, Renzhi ; Bao, Xinjie

Ischemic stroke is a significant global health crisis, frequently resulting in disability or death, with limited therapeutic interventions available. Although various intrinsic reparative processes are initiated within the ischemic brain, these mechanisms are often insufficient to restore neuronal functionality. This has led to intensive investigation into the use of exogenous stem cells as a potential therapeutic option. This comprehensive review outlines the ontogeny and mechanisms of activation of endogenous neural stem cells within the adult brain following ischemic events, with focus on the impact of stem cell-based therapies on neural stem cells. Exogenous stem cells have been shown to enhance the proliferation of endogenous neural stem cells via direct cell-to-cell contact and through the secretion of growth factors and exosomes. Additionally, implanted stem cells may recruit host stem cells from their niches to the infarct area by establishing so-called “biobridges.” Furthermore, xenogeneic and allogeneic stem cells can modify the microenvironment of the infarcted brain tissue through immunomodulatory and angiogenic effects, thereby supporting endogenous neuroregeneration. Given the convergence of regulatory pathways between exogenous and endogenous stem cells and the necessity for a supportive microenvironment, we discuss three strategies to simultaneously enhance the therapeutic efficacy of both cell types. These approaches include: (1) co-administration of various growth factors and pharmacological agents alongside stem cell transplantation to reduce stem cell apoptosis; (2) synergistic administration of stem cells and their exosomes to amplify paracrine effects; and (3) integration of stem cells within hydrogels, which provide a protective scaffold for the implanted cells while facilitating the regeneration of neural tissue and the reconstitution of neural circuits. This comprehensive review highlights the interactions and shared regulatory mechanisms between endogenous neural stem cells and exogenously implanted stem cells and may offer new insights for improving the efficacy of stem cell-based therapies in the treatment of ischemic stroke.

3,497

项与 Stanford University 相关的新闻(医药)2025-09-10

Usher Syndrome Society opens Translational Research Grants supporting preclinical studies and mechanism-based therapeutic development for Usher syndrome.

NEEDHAM, MA, UNITED STATES, September 10, 2025 /

EINPresswire.com

/ -- The

Usher Syndrome Society

(USH Society), a nonprofit organization that raises awareness and research funding to find therapies for Usher syndrome, announces a request for applications for Usher syndrome research grants. The “

Usher Syndrome Society Translational Research Grants

” are intended to support translational research on Usher syndrome in either Preclinical Research and/or Mechanism-based Therapeutic Development. The USH Society has committed to funding $100,000 per year for up to two years for research projects that involve well-documented research collaborations across sensory modalities and scientific disciplines. The submissions will be evaluated by the USH Society Scientific Advisory Board (SAB), which is comprised of world-renowned hearing and vision scientists. The Request for Application must be submitted electronically as a single PDF file to nancy@ushersyndromesociety.org by 5:00 p.m. EDT on October 24, 2025. For more information visit:

https://www.ushersyndromesociety.org/2026-translational-research-grant-request-for-applications/

Usher syndrome is a progressive genetic condition affecting the retina and inner ear, leading to combined vision loss and hearing loss in approximately 400,000 people worldwide. Usher syndrome is the leading genetic cause of combined deafness and blindness.

The USH Society is committed to supporting Usher syndrome research at labs with promising work and specific funding needs that accelerate any type of Usher syndrome research towards treatments and a cure.

About the Usher Syndrome Society:

The USH Society is a nonprofit founded to address the urgent need to preserve the sight and hearing of those living with Usher syndrome. Recognizing that the fastest ways to accelerate research are through public education and dedicated funding, the Usher Syndrome Society leverages photojournalism, film, storytelling, and educational events to raise awareness, rally support, and advance treatments. The USH Society is determined to change the future of Usher syndrome.

About the Scientific Advisory Board:

The USH Society SAB is chaired by Jeffrey R. Holt, PhD, Professor of Otolaryngology and Neurology at Boston Children’s Hospital and Harvard Medical School. Other members: Teresa Nicolson, PhD, Professor of Otolaryngology, Head & Neck Surgery at Stanford University; Bence Gyorgy, MD, PhD, Head of Clinical Translation at the Institute of Molecular and Clinical Ophthalmology in Basel, Switzerland, among others.

Nancy Corderman

Usher Syndrome Society

nancy@ushersyndromesociety.org

Visit us on social media:

LinkedIn

Instagram

Facebook

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability

for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this

article. If you have any complaints or copyright issues related to this article, kindly contact the author above.

2025-09-10

The poster presentation, titled Safety, Tolerability and Distribution of Topical Laquinimod Eye Drops, an Innovative Immunomodulator Targeting Aryl Hydrocarbon Receptor: The LION Study, emphasizes, among other findings, that topical laquinimod was well tolerated with no toxicity or drug related adverse events at doses resulting in therapeutically relevant concentrations of laquinimod in the posterior parts of the eye.

“These results support further investigation of laquinimod’s therapeutic potential in inflammatory eye diseases. There is unmet need for effective, steroid-sparing topical anti-inflammatory agents with favorable safety profiles that can reach the posterior segment”, said Dr. Dalia Mohammed El Feky, a Visiting Scholar at the Byers Eye Institute, Stanford University School of Medicine, Palo Alto, CA.

The results from the LION study show that daily dose levels of either 0.6, 1.2 mg and 1.8 mg resulted in dose related intraocular concentrations of laquinimod, which reached a therapeutically relevant level in both the vitreous humor and anterior chamber. Patients scheduled to undergo pars plana vitrectomy for various indications were administered laquinimod daily as eye drops during a 14-day preoperative period.

Laquinimod administered as eye drops at the chosen daily dose levels was safe and well tolerated for the period of administration studied, and no dose-limiting toxicities were reported in any of the subjects.

“Laquinimod, has a distinct immunomodulatory mechanism compared to conventional immunosuppressants. Its effects go beyond simple suppression, as it promotes a durable shift from an autoimmune-prone landscape to a self-tolerant, balanced state and enhances neuronal protection and repair mechanisms in addition to its anti-inflammatory properties. Given these unique immunological and neuroprotective effects along with its ability to reach the posterior segment, laquinimod holds a significant promise for investigation in patients with uveitis as well as patients with macular edema secondary to inflammation (MESI)” said Quan Đông Nguyen, MD, MSc, FAAO, FARVO, FASRS, Professor of Ophthalmology, Medicine and Pediatrics at the Byers Eye Institute and the Stanford University School of Medicine (Palo Alto, USA) and Principal Investigator of the LION Study.

Previously this year, on June 27, the top-line results from the LION study were discussed by Dr. El Feky as an oral presentation at the 2025 International Ocular Inflammation Society (IOIS) Congress, the largest scientific meeting in the field of uveitis and ocular inflammation in the world, in Rio de Janeiro, Brazil.

Active Biotech’s focus for the laquinimod program is now directed towards identifying the best development partner for the continued clinical development of laquinimod in eye disorders.

The abstract is available online on https://aao.apprisor.org/apsSession.cfm?id=PO718

临床结果临床1期

2025-09-10

NEW YORK, Sept. 10, 2025 (GLOBE NEWSWIRE) -- TG Therapeutics, Inc. (NASDAQ: TGTX), today announced the upcoming schedule of presentations highlighting BRIUMVI® (ublituximab-xiiy) data in patients with relapsing forms of multiple sclerosis (RMS), at the 2025 European Committee for Treatment and Research in Multiple Sclerosis (ECTRIMS) annual meeting, being held September 24 - 26, 2025 in Barcelona, Spain. Abstracts are now available online and can be accessed on the ECTRIMS meeting website or at the following link: ECTRIMS 2025 PROGRAMME. Details of the upcoming presentations are outlined below.

TG PRESENTATIONS: Oral Presentation: Long-term Efficacy and Safety of Ublituximab in Relapsing Multiple Sclerosis: Results from Six Years of ULTIMATE I and II Open-label Extension

Presentation Date/Time: Wednesday, September 24th at 14:55 – 15:05 CEST (8:55am ET – 9:05am ET) Session: Free Communication 2: Therapeutic interventions - from trials to real-world evidence – Lecture Hall 117 Abstract Number: ECTRIMS25-1571 Lead Author: Dr. Bruce Cree - Weill Institute for Neurosciences, University of California, San Francisco, CA, United States

ePoster Presentation: Safety and Tolerability of a Modified Ublituximab Dosing Regimen: Updates from the ENHANCE Study

Date/Time: ePoster will be made available on ECTRIMS platform beginning Wednesday, September 24th and will remain available for 3 months after the congress ends Abstract Number/ePoster Number: ECTRIMS25-1575/P1699 Lead Author: Barry A. Singer, MD - Director, The MS Center for Innovations in Care - Missouri Baptist Medical Center, St. Louis, MO, United States

ePoster Presentation: Real-World Clinical Experience from ENABLE: the First Phase 4 Observational Study for Patients with Relapsing Multiple Sclerosis Initiating Ublituximab

Presentation Date/Time: ePoster will be made available on ECTRIMS platform beginning Wednesday, September 24th and will remain available for 3 months after the congress ends Abstract Number/ePoster Number: ECTRIMS25-1597/P1833 Lead Author: Dr. Carrie Hersh, DO - Lou Ruvo Center for Brain Health, Cleveland Clinic, Las Vegas, NV, United States

Following the presentations, the data presented will be available on the Publications page, located within the Pipeline section, of the Company’s website at www.tgtherapeutics.com/publications.cfm.

ABOUT THE ULTIMATE I & II PHASE 3 TRIALS ULTIMATE I & II are two randomized, double-blind, double-dummy, parallel group, active comparator-controlled clinical trials of identical design, in patients with RMS treated for 96 weeks. Patients were randomized to receive either BRIUMVI, given as an IV infusion of 150 mg administered in four hours, 450 mg two weeks after the first infusion administered in one hour, and 450 mg every 24 weeks administered in one hour, with oral placebo administered daily; or teriflunomide, the active comparator, given orally as a 14 mg daily dose with IV placebo administered on the same schedule as BRIUMVI. Both studies enrolled patients who had experienced at least one relapse in the previous year, two relapses in the previous two years, or had the presence of a T1 gadolinium (Gd)-enhancing lesion in the previous year. Patients were also required to have an Expanded Disability Status Scale (EDSS) score from 0 to 5.5 at baseline. The ULTIMATE I & II trials enrolled a total of 1,094 patients with RMS across 10 countries. These trials were led by Lawrence Steinman, MD, Zimmermann Professor of Neurology & Neurological Sciences, and Pediatrics at Stanford University. Additional information on these clinical trials can be found at www.clinicaltrials.gov (NCT03277261; NCT03277248).

ABOUT BRIUMVI® (ublituximab-xiiy) 150 mg/6 mL Injection for IV BRIUMVI is a novel monoclonal antibody that targets a unique epitope on CD20-expressing B-cells. Targeting CD20 using monoclonal antibodies has proven to be an important therapeutic approach for the management of autoimmune disorders, such as RMS. BRIUMVI is uniquely designed to lack certain sugar molecules normally expressed on the antibody. Removal of these sugar molecules, a process called glycoengineering, allows for efficient B-cell depletion at low doses.

BRIUMVI is indicated in the U.S. for the treatment of adults with RMS, including clinically isolated syndrome, relapsing-remitting disease, and active secondary progressive disease and in the EU and UK for the treatment of adult patients with RMS with active disease defined by clinical or imaging features.

A list of authorized specialty distributors can be found at www.briumvi.com.

IMPORTANT SAFETY INFORMATION Contraindications: BRIUMVI is contraindicated in patients with:

Active Hepatitis B Virus infection A history of life-threatening infusion reaction to BRIUMVI

WARNINGS AND PRECAUTIONS

Infusion Reactions: BRIUMVI can cause infusion reactions, which can include pyrexia, chills, headache, influenza-like illness, tachycardia, nausea, throat irritation, erythema, and an anaphylactic reaction. In MS clinical trials, the incidence of infusion reactions in BRIUMVI-treated patients who received infusion reaction-limiting premedication prior to each infusion was 48%, with the highest incidence within 24 hours of the first infusion. 0.6% of BRIUMVI-treated patients experienced infusion reactions that were serious, some requiring hospitalization.

Observe treated patients for infusion reactions during the infusion and for at least one hour after the completion of the first two infusions unless infusion reaction and/or hypersensitivity has been observed in association with the current or any prior infusion. Inform patients that infusion reactions can occur up to 24 hours after the infusion. Administer the recommended pre-medication to reduce the frequency and severity of infusion reactions. If life-threatening, stop the infusion immediately, permanently discontinue BRIUMVI, and administer appropriate supportive treatment. Less severe infusion reactions may involve temporarily stopping the infusion, reducing the infusion rate, and/or administering symptomatic treatment.

Infections: Serious, life-threatening or fatal, bacterial and viral infections have been reported in BRIUMVI-treated patients. In MS clinical trials, the overall rate of infections in BRIUMVI-treated patients was 56% compared to 54% in teriflunomide-treated patients. The rate of serious infections was 5% compared to 3% respectively. There were 3 infection-related deaths in BRIUMVI-treated patients. The most common infections in BRIUMVI- treated patients included upper respiratory tract infection (45%) and urinary tract infection (10%). Delay BRIUMVI administration in patients with an active infection until the infection is resolved.

Consider the potential for increased immunosuppressive effects when initiating BRIUMVI after immunosuppressive therapy or initiating an immunosuppressive therapy after BRIUMVI.

Hepatitis B Virus (HBV) Reactivation: HBV reactivation occurred in an MS patient treated with BRIUMVI in clinical trials. Fulminant hepatitis, hepatic failure, and death caused by HBV reactivation have occurred in patients treated with anti-CD20 antibodies. Perform HBV screening in all patients before initiation of treatment with BRIUMVI. Do not start treatment with BRIUMVI in patients with active HBV confirmed by positive results for HB surface antigen (HBsAg) and anti-HB tests. For patients who are negative for HBsAg and positive for HB core antibody [HBcAb+] or are carriers of HBV [HBsAg+], consult a liver disease expert before starting and during treatment.

Progressive Multifocal Leukoencephalopathy (PML): Although no cases of PML have occurred in BRIUMVI-treated MS patients, JC virus infection resulting in PML has been observed in patients treated with other anti-CD20 antibodies and other MS therapies.

If PML is suspected, withhold BRIUMVI and perform an appropriate diagnostic evaluation. Typical symptoms associated with PML are diverse, progress over days to weeks, and include progressive weakness on one side of the body or clumsiness of limbs, disturbance of vision, and changes in thinking, memory, and orientation leading to confusion and personality changes.

MRI findings may be apparent before clinical signs or symptoms; monitoring for signs consistent with PML may be useful. Further investigate suspicious findings to allow for an early diagnosis of PML, if present. Following discontinuation of another MS medication associated with PML, lower PML-related mortality and morbidity have been reported in patients who were initially asymptomatic at diagnosis compared to patients who had characteristic clinical signs and symptoms at diagnosis.

If PML is confirmed, treatment with BRIUMVI should be discontinued.

Vaccinations: Administer all immunizations according to immunization guidelines: for live or live-attenuated vaccines, at least 4 weeks and, whenever possible, at least 2 weeks prior to initiation of BRIUMVI for non-live vaccines. BRIUMVI may interfere with the effectiveness of non-live vaccines. The safety of immunization with live or live-attenuated vaccines during or following administration of BRIUMVI has not been studied. Vaccination with live virus vaccines is not recommended during treatment and until B-cell repletion.

Vaccination of Infants Born to Mothers Treated with BRIUMVI During Pregnancy: In infants of mothers exposed to BRIUMVI during pregnancy, assess B-cell counts prior to administration of live or live-attenuated vaccines as measured by CD19+ B-cells. Depletion of B-cells in these infants may increase the risks from live or live-attenuated vaccines. Inactivated or non-live vaccines may be administered prior to B-cell recovery. Assessment of vaccine immune responses, including consultation with a qualified specialist, should be considered to determine whether a protective immune response was mounted.

Fetal Risk: Based on data from animal studies, BRIUMVI may cause fetal harm when administered to a pregnant woman. Transient peripheral B-cell depletion and lymphocytopenia have been reported in infants born to mothers exposed to other anti-CD20 B-cell depleting antibodies during pregnancy. Advise females of reproductive potential to use effective contraception during BRIUMVI treatment and for 6 months after the last dose.

Reduction in Immunoglobulins: As expected with any B-cell depleting therapy, decreased immunoglobulin levels were observed. Decrease in immunoglobulin M (IgM) was reported in 0.6% of BRIUMVI-treated patients compared to none of the patients treated with teriflunomide in RMS clinical trials. Monitor the levels of quantitative serum immunoglobulins during treatment, especially in patients with opportunistic or recurrent infections, and after discontinuation of therapy, until B-cell repletion. Consider discontinuing BRIUMVI therapy if a patient with low immunoglobulins develops a serious opportunistic infection or recurrent infections, or if prolonged hypogammaglobulinemia requires treatment with intravenous immunoglobulins.

Liver Injury: Clinically significant liver injury, without findings of viral hepatitis, has been reported in the postmarketing setting in patients treated with anti-CD20 B-cell depleting therapies approved for the treatment of MS, including BRIUMVI. Signs of liver injury, including markedly elevated serum hepatic enzymes with elevated total bilirubin, have occurred from weeks to months after administration.

Patients treated with BRIUMVI found to have an alanine aminotransaminase (ALT) or aspartate aminotransferase (AST) greater than 3x the upper limit of normal (ULN) with serum total bilirubin greater than 2x ULN are potentially at risk for severe drug-induced liver injury.

Obtain liver function tests prior to initiating treatment with BRIUMVI, and monitor for signs and symptoms of any hepatic injury during treatment. Measure serum aminotransferases, alkaline phosphatase, and bilirubin levels promptly in patients who report symptoms that may indicate liver injury, including new or worsening fatigue, anorexia, nausea, vomiting, right upper abdominal discomfort, dark urine, or jaundice. If liver injury is present and an alternative etiology is not identified, discontinue BRIUMVI.

Most Common Adverse Reactions: The most common adverse reactions in RMS trials (incidence of at least 10%) were infusion reactions and upper respiratory tract infections.

Physicians, pharmacists, or other healthcare professionals with questions about BRIUMVI should visit www.briumvi.com.

The full Summary of Product Characteristics approved in the European Union (EU) for BRIUMVI can be found here Briumvi | European Medicines Agency (europa.eu).

ABOUT BRIUMVI PATIENT SUPPORT in the U.S. BRIUMVI Patient Support is a flexible program designed by TG Therapeutics to support U.S. patients through their treatment journey in a way that works best for them. More information about the BRIUMVI Patient Support program can be accessed at www.briumvipatientsupport.com.

ABOUT TG THERAPEUTICS TG Therapeutics is a fully integrated, commercial stage, biopharmaceutical company focused on the acquisition, development and commercialization of novel treatments for B-cell diseases. In addition to a research pipeline including several investigational medicines, TG Therapeutics has received approval from the U.S. Food and Drug Administration (FDA) for BRIUMVI® (ublituximab-xiiy) for the treatment of adult patients with relapsing forms of multiple sclerosis, including clinically isolated syndrome, relapsing-remitting disease, and active secondary progressive disease, as well as approval by the European Commission (EC) in Europe, the Medicines and Healthcare Products Regulatory Agency (MHRA) in the United Kingdom, Swissmedic in Switzerland, and Australia’s Therapeutic Goods Administration (TGA) for BRIUMVI to treat adult patients with RMS who have active disease defined by clinical or imaging features. For more information, visit www.tgtherapeutics.com, and follow us on X (formerly Twitter) @TGTherapeutics and on LinkedIn.

BRIUMVI® is a registered trademark of TG Therapeutics, Inc.

Cautionary Statement This press release contains forward-looking statements that involve a number of risks and uncertainties. For those statements, we claim the protection of the safe harbor for forward-looking statements contained in the Private Securities Litigation Reform Act of 1995.

Any forward-looking statements in this press release are based on management's current expectations and beliefs and are subject to a number of risks, uncertainties and important factors that may cause actual events or results to differ materially from those expressed or implied by any forward- looking statements contained in this press release. In addition to the risk factors identified from time to time in our reports filed with the U.S. Securities and Exchange Commission (SEC), factors that could cause our actual results to differ materially include the below.

Such forward looking statements include but are not limited to statements regarding the results of the long term safety and efficacy from the ULTIMATE I & II Phase 3 studies, the ENHANCE Phase 3b study, the ENABLE Phase 4 Observational Study, and BRIUMVI as a treatment for relapsing forms of multiple sclerosis (RMS). Additional factors that could cause our actual results to differ materially include the following: the risk that the data from the ULTIMATE I & II long term open label extension, ENHANCE, or ENABLE trials that we announce or publish may change, or the product profile of BRIUMVI may be impacted, as more data or additional endpoints are analyzed; the risk that data may emerge from future clinical studies or from adverse event reporting that may affect the safety and tolerability profile and commercial potential of BRIUMVI; the risk that any individual patient’s clinical experience in the post-marketing setting, or the aggregate patient experience in the post-marketing setting, may differ from that demonstrated in controlled clinical trials such as ULTIMATE I and II; our ability to successfully market and sell BRIUMVI in RMS; the Company’s reliance on third parties for manufacturing, distribution and supply, and a range of other support functions for our commercial and clinical products, including BRIUMVI, and the ability of the Company and its manufacturers and suppliers to produce and deliver BRIUMVI to meet the market demand for BRIUMVI; the failure to obtain and maintain requisite regulatory approvals, including the risk that the Company fails to satisfy post-approval regulatory requirements; the uncertainties inherent in research and development; and general political, economic and business conditions. i Further discussion about these and other risks and uncertainties can be found in our Annual Report on Form 10-K for the fiscal year ended December 31, 2024 and in our other filings with the U.S. Securities and Exchange Commission.

Any forward-looking statements set forth in this press release speak only as of the date of this press release. We do not undertake to update any of these forward-looking statements to reflect events or circumstances that occur after the date hereof. This press release and prior releases are available at www.tgtherapeutics.com. The information found on our website is not incorporated by reference into this press release and is included for reference purposes only.

CONTACT:

Investor Relations: Email: ir@tgtxinc.com Telephone: 1.877.575.TGTX (8489), Option 4

Media Relations: Email: media@tgtxinc.com Telephone: 1.877.575.TGTX (8489), Option 6

临床3期临床结果上市批准临床2期

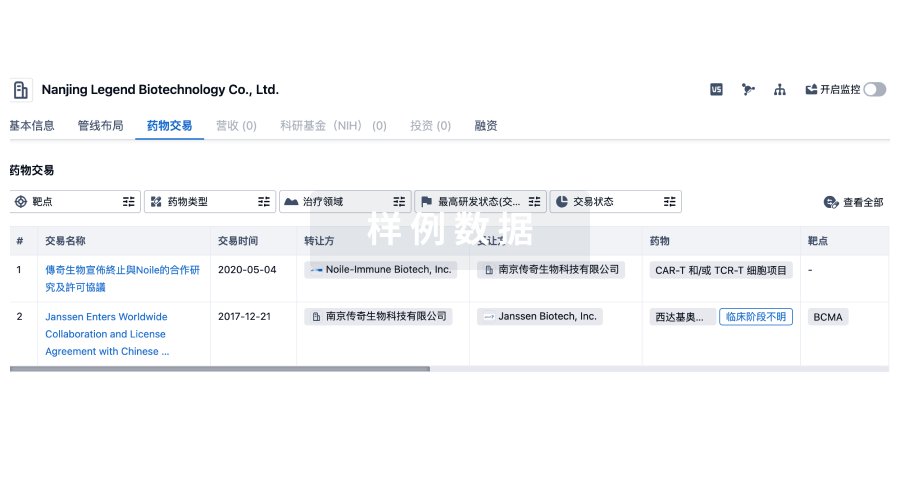

100 项与 Stanford University 相关的药物交易

登录后查看更多信息

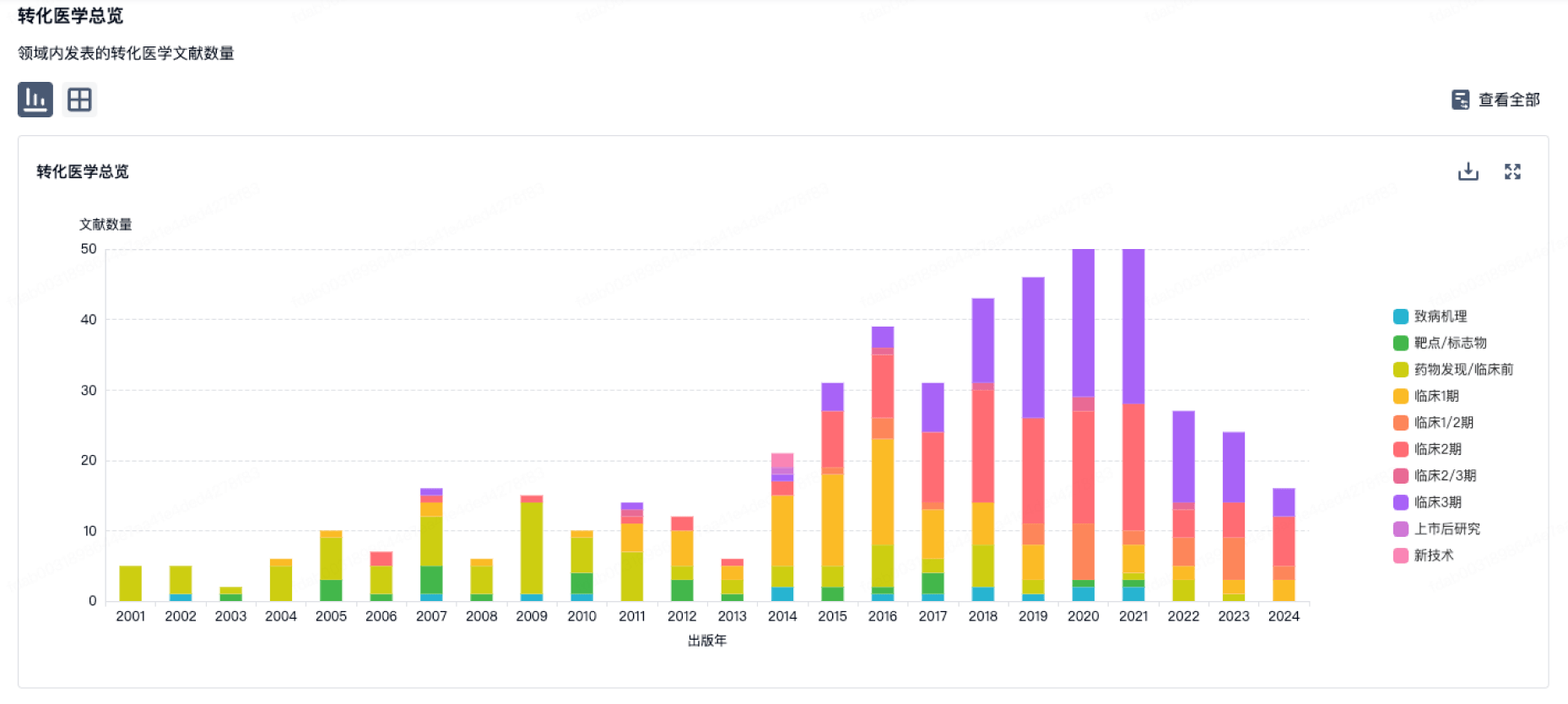

100 项与 Stanford University 相关的转化医学

登录后查看更多信息

组织架构

使用我们的机构树数据加速您的研究。

登录

或

管线布局

2025年10月05日管线快照

管线布局中药物为当前组织机构及其子机构作为药物机构进行统计,早期临床1期并入临床1期,临床1/2期并入临床2期,临床2/3期并入临床3期

药物发现

15

123

临床前

临床1期

14

16

临床2期

临床3期

1

72

其他

登录后查看更多信息

当前项目

| 药物(靶点) | 适应症 | 全球最高研发状态 |

|---|---|---|

Rotavirus vaccine I321(Stanford University) | 轮状病毒感染 更多 | 临床3期 |

[18F]F-AraG ( DCK x DGUOK ) | 晚期恶性实体瘤 更多 | 临床2期 |

伊匹木单抗 ( CTLA4 ) | 不可切除的黑色素瘤 更多 | 临床2期 |

QBS-10072S ( SLC7A5 ) | 脑膜肿瘤 更多 | 临床2期 |

羟甲香豆素 ( Hyaluronic acid ) | 原发性硬化性胆管炎 更多 | 临床2期 |

登录后查看更多信息

药物交易

使用我们的药物交易数据加速您的研究。

登录

或

转化医学

使用我们的转化医学数据加速您的研究。

登录

或

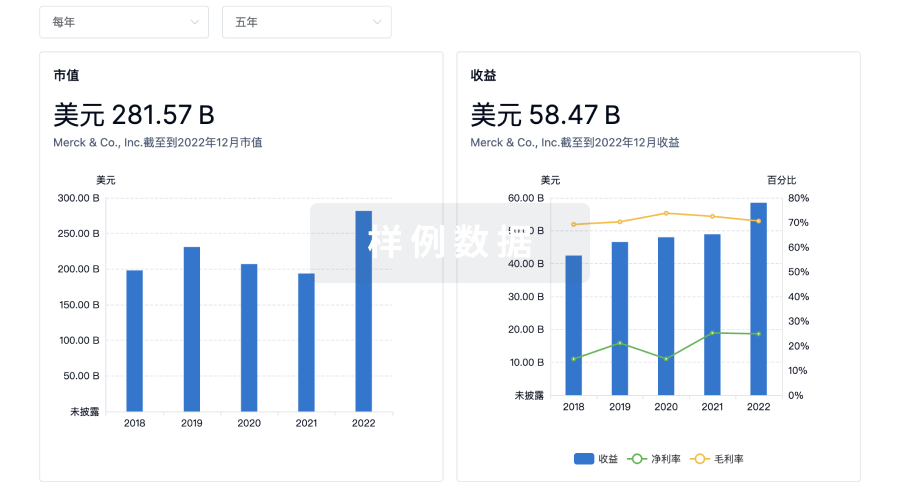

营收

使用 Synapse 探索超过 36 万个组织的财务状况。

登录

或

科研基金(NIH)

访问超过 200 万项资助和基金信息,以提升您的研究之旅。

登录

或

投资

深入了解从初创企业到成熟企业的最新公司投资动态。

登录

或

融资

发掘融资趋势以验证和推进您的投资机会。

登录

或

Eureka LS:

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用