预约演示

更新于:2025-09-09

Xihua University

更新于:2025-09-09

概览

标签

泌尿生殖系统疾病

心血管疾病

血液及淋巴系统疾病

小分子化药

其他体内诊断药物

疾病领域得分

一眼洞穿机构专注的疾病领域

暂无数据

技术平台

公司药物应用最多的技术

暂无数据

靶点

公司最常开发的靶点

暂无数据

| 排名前五的药物类型 | 数量 |

|---|---|

| 小分子化药 | 5 |

| 其他体内诊断药物 | 1 |

关联

5

项与 西华大学 相关的药物作用机制 β2-adrenergic receptor激动剂 |

原研机构 |

最高研发阶段申请上市 |

首次获批国家/地区- |

首次获批日期- |

100 项与 西华大学 相关的临床结果

登录后查看更多信息

0 项与 西华大学 相关的专利(医药)

登录后查看更多信息

2,952

项与 西华大学 相关的文献(医药)2026-02-01·SPECTROCHIMICA ACTA PART A-MOLECULAR AND BIOMOLECULAR SPECTROSCOPY

Gold nanoparticle-decorated hydrogen-bonded organic frameworks (HOFs@Au) as efficient SERS substrates and photocatalytic tools for ultrasensitive detection and degradation of bisphenol contaminants

Article

作者: Yang, Xiaoyu ; Liu, Yao ; He, Yi ; Yang, Xiao ; Zhang, Runzi

With the increasing concern for ecological environmental and food safety, the development of synergistic systems integrating efficient bisphenol trace sensing and green photocatalytic degradation has emerged as a current research focus. In this study, a novel surface-enhanced Raman scattering (SERS) sensing-degradation integrated platform was successfully developed for the detection and degradation of bisphenol through the uniform modification of hydrogen-bonded organic framework nanorods loaded with gold nanoparticles (HOFs@Au). Based on the remarkable molecular enrichment effect of the porous structure of HOFs and the strong localized surface plasmon resonance (LSPR) effect from the AuNPs, the composite system exhibited excellent trace detection performance. Experimental results revealed that the HOFs@Au not only enabled trace SERS detection of bisphenol A (BPA), bisphenol S (BPS), and bisphenol F (BPF), but also demonstrated excellent photocatalytic degradation performance. Based on the advantages of HOFs@Au, the SERS sensor demonstrated a detection limit as low as 10-8 M, a high enhancement factor (EF) of 2.3 × 105 and photodegradation removal efficiency as high as 80 %, providing a novel solution for precise monitoring and green remediation of bisphenol contaminants. The system shows great potential for applications in ecological environmental risk early warning and food safety monitoring.

2026-01-01·SPECTROCHIMICA ACTA PART A-MOLECULAR AND BIOMOLECULAR SPECTROSCOPY

Fluorescent imine-linked covalent organic framework turn off sensor for sensitive Co2+ ions detection

Article

作者: Liu, Siqing ; Yang, Fan ; Li, Panjie ; Luo, Xiaojun ; Fei, Deting ; Wang, Qiwei ; Yang, Mengdie ; Zhang, Yunxin

The development of innovative materials capable of detecting heavy metal ions is a crucial goal in the field of environmental remediation. However, achieving high selectivity for specific metal ions remains a challenge. This study designs a novel imine-based COF via Schiff base condensation for efficient detection of cobalt ions (Co2+) in environmental samples. The π-conjugation system provides excellent fluorescence properties. The COF contains tertiary amine and imine structures with nitrogen and oxygen atoms, enabling coordination with Co2+ as a Lewis base. This interaction disrupts the conjugated structure, causing fluorescence quenching. The experimental results demonstrate that our proposed method stands out in Co2+ detection, distinguished by its remarkable simplicity, exceptional sensitivity, rapid response time, and easy operability. The novel imine-based COF sensor exhibits high sensitivity (limit of detection = 8.5 × 10-13 M), excellent selectivity and stability in fluorescence detection, enabling accurate identification of Co2+ in complex environments. This research not only presents an efficient and practical approach for the detection of Co2+ but also offers novel theoretical perspectives on the design and application of imine-based COF. The findings lay a solid foundation for the development of COF-based environmental monitoring technologies.

2026-01-01·TALANTA

A colorimetric DNAzyme biosensor based on G-triplex/hemin for detecting ofloxacin in food

Article

作者: Wang, Chong ; Huang, Yukun ; Xu, Chi ; Huang, Xiaojun ; Chen, Xianggui ; Mahmood Khan, Imran ; Pan, Xiaomei ; Tang, Jie ; Ran, Wenchao

Ofloxacin, a synthetic antimicrobial drug, exhibits significant therapeutic potential in combating various bacterial infections. The exploration of ofloxacin's antimicrobial properties and the unique catalytic activity of G-triplex(G3) DNAzymes demonstrate the continuous efforts to develop cutting-edge biosensors for bacterial detection. G3 outperforms G4 due to a short, easily generated and dissociated sequence, which allows G3 multimeric sequences to fold more accurately than G4 ones of equivalent length, resulting in higher activity for the formed G3/hemin. This study constructed a novel colorimetric DNAzyme biosensor based on G3/hemin for ofloxacin in food. Aptamer-functionalized Fe3O4 magnetic nanoparticles acted as magnetic separation and detection probes, while G3/hemin served as DNAzyme. Under the optimum condition, the presence of ofloxacin initiated the catalytic oxidation to yield a colored product to quantify the ofloxacin concentration with the linear range of 50-300 nmol/L. Under the optimized conditions, a limit of detection of 15.25 nmol/L was obtained by directly detecting the fluorescent signals, which shows obvious superiority over the previously reported methods. This method offers the advantages of simplicity, rapid detection, and high recovery rates in standardization experiments involving actual samples such as beef jerky and ham sausage, paving the way for its widespread implementation in the fields of biomedical diagnostics, environmental monitoring, and food safety.

1

项与 西华大学 相关的新闻(医药)2022-07-17

·研发客

除了专利,与疫苗生产密切相关的商业秘密也是疫苗原研公司竞争优势的砝码。目前无论是欧盟还是美国,知识产权法中都没有具体规定允许竞争对手或者国家强制获取商业秘密。除非是制药公司主动分享,否则想要获得知识产权持有人的许可会非常艰难。6月17日,世界贸易组织(WTO)发布了关于《与贸易有关的知识产权协定》(TRIPS)的部长级决议,允许对南非、印度、中国等发展中国家豁免新冠疫苗成分和生产工艺的专利,适格成员可以通过强制许可制度及行政命令、紧急法令、政府授权等进行实施。据商务部网站消息,中国驻WTO大使李成钢在2022年5月WTO总理事会上表示,“中国是全球抗疫的重要贡献者和新冠疫苗知识产权豁免的坚定支持者”,并且他表态,在一些关键问题尚未解决的情况下,中国“将不寻求利用这一决定所提供的灵活性”。这表明我国很可能后续不会对国外公司的新冠疫苗专利启动强制许可,本土公司将不能获得相关专利授权。对于有新冠疫苗在研的国内公司而言,专利强制许可意味着什么?专利豁免了,就能提高疫苗的可及性吗?研发客联合己任律师事务所举办了专利系列研讨会,围绕这个问题展开讨论。己任律师事务所的何菁律师认为该协议对中国疫苗行业的影响有限。“首先我国的新冠疫苗以灭活疫苗为主,而灭活疫苗的专利密度较低,对我国疫苗企业的损害可控。再则,我国大型疫苗生产企业在国外的新冠疫苗专利布局尚不深入,也未在国外大面积铺开生产。”不过,在威斯克生物副总经理曹晶晶博士看来,“在疫苗领域,发达国家在海外市场有先发优势,创新离不开国际合作与许可。目前中国企业生产的疫苗在海外市场的认可度不高,准入经验较少,营销、物流和基础设施薄弱,如果中国企业不能获得专利豁免,长期来看可能无法和南非印度的疫苗生产企业公平竞争。”“可预见的是,此次WTO豁免新冠疫苗专利,势必会对国内相关企业的平台发展和未来产品的出口产生潜在的影响。在这样的背景下,我们的政策制定者和疫苗企业需要思考和准备建立一个新的全球的战略机制来保护自己,激励我们的产业。”曹晶晶博士说。“国内的公共卫生专家和研究药品可及性的专家需要对此进行系统的研究,同时,我国专利强制许可制度也要进行相应完善。” 对外经济贸易大学法学院的纪文华教授表示。专利豁免不等于上市无忧专利究竟是不是影响疫苗生产能力和可及性的主要因素?“由于许多发展中国家缺乏产业基础,即便拿到专利授权也不一定能够生产出合格的产品,尤其是对于涉及到许多新技术的mRNA疫苗,还需要拿到专利中没有体现的涉及技术诀窍的商业秘密才可以。豁免专利的实际意义有多大还值得商榷。”何菁律师表示。制药公司通常会为其研发的疫苗设置一系列知识产权进行保护,专利是较常受到关注的部分,保护的内容包括疫苗的成分、生产工艺和给药装置等。而与疫苗有关的另一项重要的知识产权是商业秘密,商业秘密往往才是体现原研公司竞争优势的砝码,所涉及的内容与疫苗的生产密切相关,但这些信息均是不公开的。对于已有的几种新冠疫苗,mRNA疫苗因为涉及到全新的技术,在生产方面最具挑战。虽然外界了解其生产步骤和所需的材料,但生产出合格的成品所需的步骤和技术的组合是经过大量实验验证和试错过的,这些由专门的操作人员掌握的信息运用在疫苗活性成分的纯化、配制、填充、取样和测试等过程。只有每个步骤达到一定的检测标准,才能最终确保疫苗的一致性和纯度。生产过程中还涉及特殊的精密设备,如生产mRNA疫苗所需的脂质纳米包裹颗粒需要定制一种微过滤装置,这种设备通过精确控制混合、流速、浓度和温度来生产合格的原材料。此外,从临床试验中收集的数据也被制药公司视为商业秘密,此类信息受到各个国家数据保护法规的保护。如TRIPS的第39条就规定了WTO成员需保证提交给监管机构的测试数据不可进行不公平的商业使用和披露。疫苗作为复杂的生物制品,其生产所需步骤、方法、设备、工程师在整个流程控制过程的经验和试验数据一起构成了商业秘密,连同专利为疫苗的知识产权提供了全方位的保护。如今新冠疫苗专利豁免的决定已经通过,“但即使对于少数已经具有研发和生产能力的药企,也需要存在经济可行性、政治推动力的情况下,才可以真正让产品可及。积极的一面是,本次专利豁免的进展和成效,对于未来国际层面讨论IP保护与公共健康问题、应对重大卫生危机,有范例性和制度性的影响。”纪文华教授表示。获得完整的疫苗技术很难新冠大流行后,业界许多力量在积极推动疫苗的普及,但在世界范围内疫苗的分配和接种情况依然不容乐观。大流行发生两年后,许多发展中国家只有不到15%的人接种疫苗,而在一些高收入国家人们正在接种第四剂疫苗。最低收入国家的人群可能还需要几年的时间才能接种上疫苗。不同收入国家的疫苗接种情况 图片来源|CGD为了让发展中国家获得生产疫苗的相关技术, WHO在2020年5月专门设立了针对新冠病毒的技术访问池(C-TAP), WHO呼吁制药公司自愿分享与新冠有关的疫苗、药物、诊断等知识产权和数据。然而该计划收效甚微,多数制药公司拒绝通过类似计划分享疫苗技术。对于专利来说,有强制许可机制。在2001年发布的《TRIPS协定和公共卫生多哈宣言》(下称《多哈宣言》)中提到,WTO成员国面对一些公共卫生需求问题,专利强制许可是TRIPS 协定灵活性的表现之一,所有WTO成员有权在必要时使用。然而,如前文所述,疫苗与更容易通过逆向工程复制的小分子药物不同,专利的强制许可远远不够。有观点认为,在疫苗领域“制造过程就是产品”。而专利所有者没有义务根据专利的强制许可提供超出专利说明书以外的任何附加信息。目前无论是欧盟还是美国,知识产权法中都没有具体规定允许竞争对手或者国家强制获取商业秘密。除非是制药公司主动分享,否则想要获得知识产权持有人的许可会非常艰难。例如,加拿大的Biolyse有较强的疫苗生产实力,计划向发展中国家提供疫苗,但其向强生申请获得授权了解腺病毒疫苗核心技术时遭到了拒绝。商业秘密强制许可的可行性TRIPS协定和《多哈宣言》规定了与保护公众健康有关的重要原则和目标。TRIPS协定第7条规定,“知识产权的保护和执法应有助于促进技术创新以及技术的转让和传播……以有利于社会和经济福利的方式……成员在制定或修改其法律法规时,可以采取必要措施保护公众健康”。《多哈宣言》第4段指出,TRIPS 协定不会也不应阻止成员采取措施保护公众健康,并且应该支持WTO成员享有保护公众健康,特别是促进人人获得药物的权利。在新冠大流行期间,这些规定可能为强制许可商业秘密奠定基础,通过国际技术转让促进疫苗生产来保护公众健康。此外,TRIPS 协定没有包含禁止商业秘密强制许可的具体条款,将处理这一问题的灵活性留给了各国政府。一些国家已颁布相关法令。例如,2020年3月23日,法国实施了第2020-290号紧急状态法令,赋予总理颁发强制许可的权利,政府使用任何专利或专利申请,以及与疫苗相关的商业秘密。在面临公共健康危机时,政府的紧急状态法令可能为商业秘密的强制许可提供依据,面临的新问题就是这种强制许可该如何执行。虽然许多疫苗项目得到了公共资金的支持,但制药公司为此所付出的工作同样需要被谨慎对待。不论是专利还是商业秘密,在考虑强制许可的可行性时,需平衡好疫苗可及性与原研企业的利益,同时制定合理的转让方案。总第1658期访问研发客网站可浏览更多文章www.PharmaDJ.com

紧急使用授权小分子药物疫苗合作信使RNA

100 项与 西华大学 相关的药物交易

登录后查看更多信息

100 项与 西华大学 相关的转化医学

登录后查看更多信息

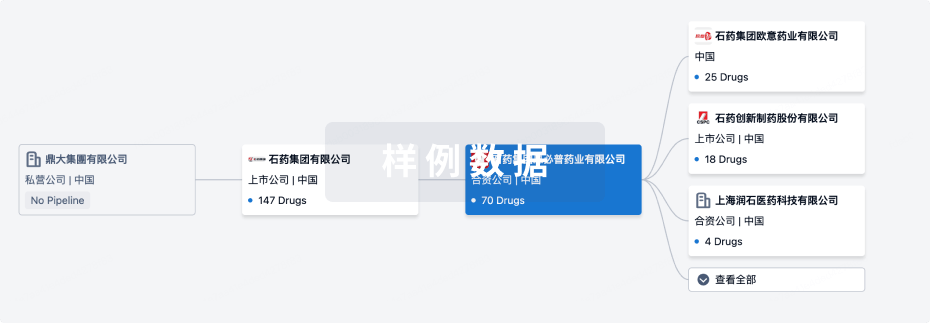

组织架构

使用我们的机构树数据加速您的研究。

登录

或

管线布局

2025年09月14日管线快照

管线布局中药物为当前组织机构及其子机构作为药物机构进行统计,早期临床1期并入临床1期,临床1/2期并入临床2期,临床2/3期并入临床3期

药物发现

1

4

临床前

登录后查看更多信息

当前项目

| 药物(靶点) | 适应症 | 全球最高研发状态 |

|---|---|---|

Compound 58(西华大学) ( SIRT5 ) | 急性肾损伤 更多 | 临床前 |

盐酸去甲乌药碱 ( β2-adrenergic receptor ) | 心脏衰竭 更多 | 临床前 |

XHU-58 ( SIRT5 ) | 急性肾损伤 更多 | 临床前 |

CSN5 inhibitor(Xihua University) ( COPS5 ) | 肿瘤 更多 | 临床前 |

CN116589612 专利挖掘 | 溶血 更多 | 药物发现 |

登录后查看更多信息

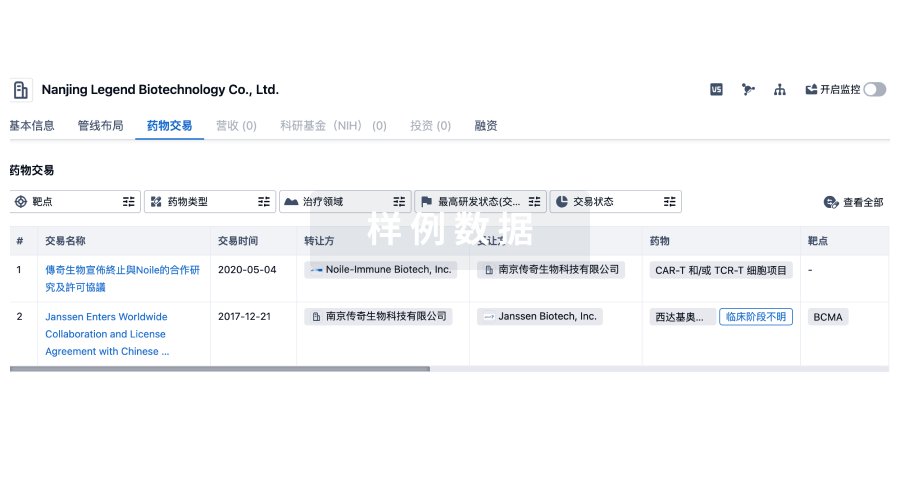

药物交易

使用我们的药物交易数据加速您的研究。

登录

或

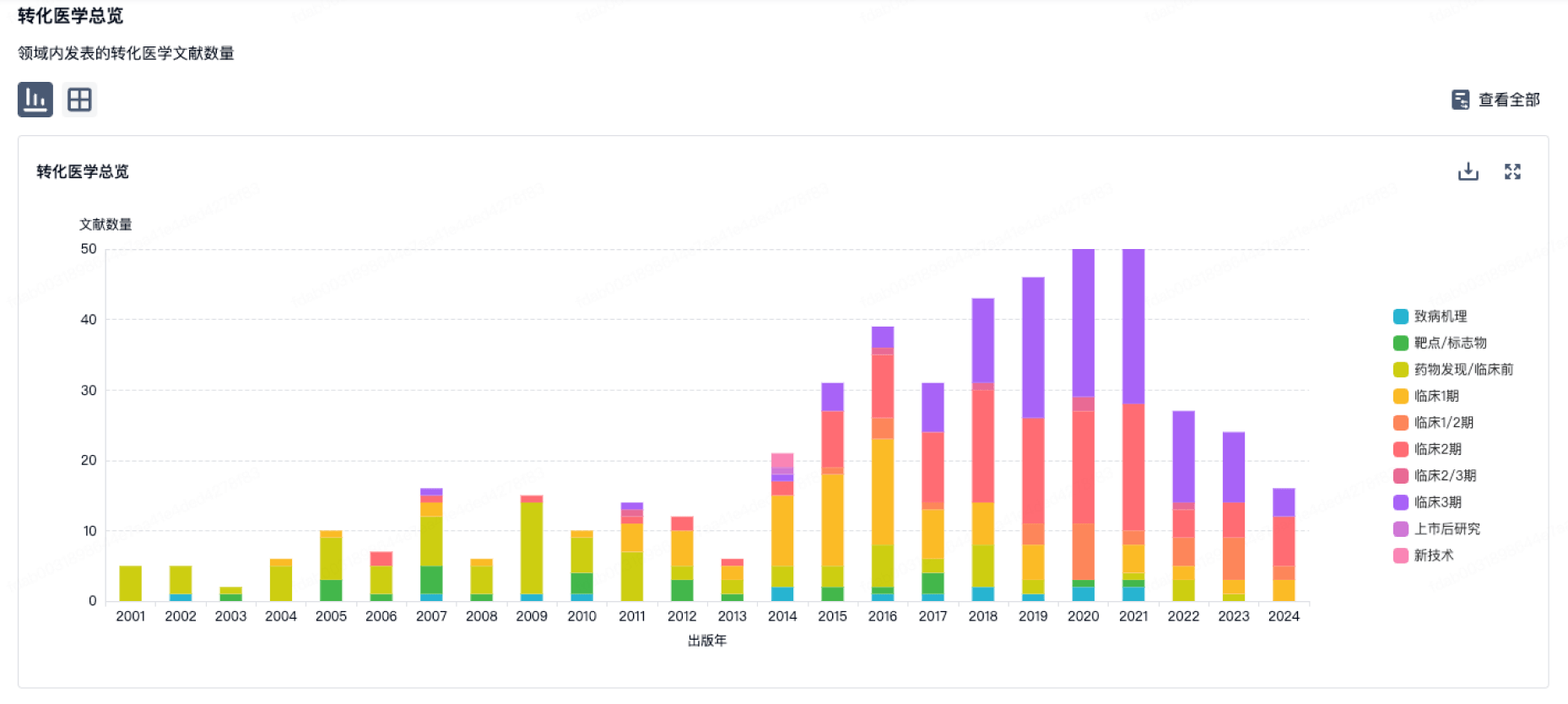

转化医学

使用我们的转化医学数据加速您的研究。

登录

或

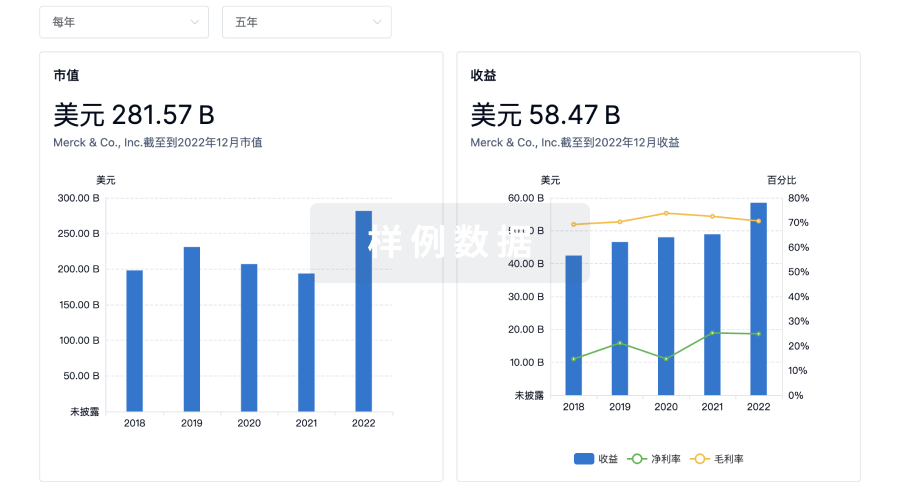

营收

使用 Synapse 探索超过 36 万个组织的财务状况。

登录

或

科研基金(NIH)

访问超过 200 万项资助和基金信息,以提升您的研究之旅。

登录

或

投资

深入了解从初创企业到成熟企业的最新公司投资动态。

登录

或

融资

发掘融资趋势以验证和推进您的投资机会。

登录

或

Eureka LS:

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用