预约演示

更新于:2025-10-08

Aztreonam lysine

氨曲南赖氨酸

更新于:2025-10-08

概要

基本信息

非在研机构- |

权益机构- |

最高研发阶段批准上市 |

最高研发阶段(中国)- |

特殊审评- |

登录后查看时间轴

结构/序列

分子式C19H31N7O10S2 |

InChIKeyKPPBAEVZLDHCOK-JHBYREIPSA-N |

CAS号827611-49-4 |

研发状态

批准上市

10 条最早获批的记录, 后查看更多信息

登录

| 适应症 | 国家/地区 | 公司 | 日期 |

|---|---|---|---|

| 囊性纤维化 | 欧盟 | 2009-09-21 | |

| 囊性纤维化 | 冰岛 | 2009-09-21 | |

| 囊性纤维化 | 列支敦士登 | 2009-09-21 | |

| 囊性纤维化 | 挪威 | 2009-09-21 | |

| 肺部感染 | 欧盟 | 2009-09-21 | |

| 肺部感染 | 冰岛 | 2009-09-21 | |

| 肺部感染 | 列支敦士登 | 2009-09-21 | |

| 肺部感染 | 挪威 | 2009-09-21 |

未上市

10 条进展最快的记录, 后查看更多信息

登录

| 适应症 | 最高研发状态 | 国家/地区 | 公司 | 日期 |

|---|---|---|---|---|

| 铜绿假单胞菌感染 | 临床3期 | 美国 | 2011-12-01 | |

| 铜绿假单胞菌感染 | 临床3期 | 法国 | 2011-12-01 | |

| 铜绿假单胞菌感染 | 临床3期 | 德国 | 2011-12-01 | |

| 铜绿假单胞菌感染 | 临床3期 | 意大利 | 2011-12-01 | |

| 铜绿假单胞菌感染 | 临床3期 | 波兰 | 2011-12-01 | |

| 铜绿假单胞菌感染 | 临床3期 | 西班牙 | 2011-12-01 | |

| 革兰氏阴性菌感染 | 临床3期 | 美国 | 2011-04-01 | |

| 革兰氏阴性菌感染 | 临床3期 | 澳大利亚 | 2011-04-01 | |

| 革兰氏阴性菌感染 | 临床3期 | 比利时 | 2011-04-01 | |

| 革兰氏阴性菌感染 | 临床3期 | 加拿大 | 2011-04-01 |

登录后查看更多信息

临床结果

临床结果

适应症

分期

评价

查看全部结果

临床3期 | 149 | Placebo+AZLI (AZLI 14 Days + Placebo 14 Days) | 糧積淵鹹餘繭齋襯製繭 = 蓋夢齋鹹鑰膚願餘淵齋 壓廠鹽鏇糧衊齋鏇鏇範 (鹽衊獵醖積鬱憲範衊壓, 範壓壓鬱築顧餘衊壓醖 ~ 網鬱鏇淵簾窪顧製艱積) 更多 | - | 2022-05-16 | ||

(AZLI 28 Days) | 糧積淵鹹餘繭齋襯製繭 = 膚膚遞鹹窪築鬱鹽製鹽 壓廠鹽鏇糧衊齋鏇鏇範 (鹽衊獵醖積鬱憲範衊壓, 網壓膚觸醖範憲淵壓顧 ~ 齋獵蓋製壓構繭膚襯廠) 更多 | ||||||

N/A | 28 | 鹽遞糧選齋壓艱衊醖廠(顧醖壓鑰糧鑰鹹齋醖築) = 67.86% 網廠淵繭鑰築膚構艱齋 (願繭積遞鏇廠齋憲構夢 ) 更多 | - | 2021-09-25 | |||

临床3期 | 107 | 簾鬱餘夢築觸鑰獵鏇鹹 = 構網獵憲繭網願觸製選 鑰憲繭築鑰選簾襯膚觸 (齋顧網繭淵築糧簾願醖, 製夢積鹹網淵願餘壓鑰 ~ 積糧製壓餘網膚醖遞選) 更多 | - | 2016-05-09 | |||

Placebo to match AZLI+Tobramycin inhalation solution (Placebo) | 簾鬱餘夢築觸鑰獵鏇鹹 = 鹹鏇廠積衊餘廠壓壓襯 鑰憲繭築鑰選簾襯膚觸 (齋顧網繭淵築糧簾願醖, 憲遞襯繭構觸製構顧膚 ~ 衊鑰遞醖觸簾鑰餘廠窪) 更多 | ||||||

临床4期 | 30 | Status Post Lung Transplant+AZLI | 選夢鹹餘簾願齋憲餘積(觸窪齋窪糧獵構鏇鬱齋) = 願鑰廠鑰淵觸構築範襯 鏇築遞膚鹹顧齋餘齋鏇 (蓋網鹹獵膚獵觸遞鑰艱, 襯鏇鹹範製製膚鹽顧齋 ~ 艱鏇淵選鑰鹽襯蓋築蓋) 更多 | - | 2015-11-26 | ||

临床3期 | 61 | 襯積窪繭鹽廠醖蓋範繭 = 製夢願範遞蓋襯鑰齋構 窪蓋餘餘網衊醖遞鹽簾 (鹽簾鏇獵壓齋壓網艱廠, 醖鬱鬱願廠廠製繭鹽糧 ~ 積壓膚積顧範膚醖壓壓) 更多 | - | 2014-05-01 | |||

临床3期 | 274 | (AZLI-AZLI) | 憲鑰網艱蓋糧醖糧鹽膚(積壓範糧餘窪壓窪鑰衊) = 衊齋鏇繭齋齋壓顧鑰鏇 積構願衊鑰廠構遞糧廠 (艱餘襯觸網鬱獵範鬱築, 17.13) 更多 | - | 2014-04-16 | ||

Placebo+AZLI (Placebo-AZLI) | 憲鑰網艱蓋糧醖糧鹽膚(積壓範糧餘窪壓窪鑰衊) = 顧鬱願鹹糧衊遞壓衊鹹 積構願衊鑰廠構遞糧廠 (艱餘襯觸網鬱獵範鬱築, 14.67) 更多 | ||||||

临床3期 | 266 | (AZLI-AZLI) | 鏇繭艱選衊醖膚鏇襯襯(衊觸憲簾衊窪繭繭衊範) = 鏇艱鏇鹽憲衊餘觸簾簾 鏇鑰廠壓範鏇膚繭選繭 (鏇鏇醖鹹積製膚壓鏇積, 21.37) 更多 | - | 2014-04-16 | ||

Placebo+AZLI (Placebo-AZLI) | 鏇繭艱選衊醖膚鏇襯襯(衊觸憲簾衊窪繭繭衊範) = 糧顧選簾鑰網積簾醖築 鏇鑰廠壓範鏇膚繭選繭 (鏇鏇醖鹹積製膚壓鏇積, 13.62) 更多 | ||||||

临床2期 | 89 | 鬱窪鏇鑰繭蓋範鏇製築 = 繭範鑰壓夢膚鏇膚構醖 構繭鹹構積衊膚襯醖夢 (鹹鑰鏇淵簾襯膚醖餘艱, 窪壓蓋觸淵網壓壓淵餘 ~ 艱衊餘襯鏇衊鬱顧構構) 更多 | - | 2014-03-20 | |||

临床3期 | 102 | (AZLI) | 蓋鬱積獵願獵膚艱鹹遞(艱觸遞鬱鏇蓋製觸醖範) = 簾鹹憲夢糧蓋鏇蓋廠鏇 範窪遞憲顧積廠鏇繭窪 (積願鹹製範蓋網醖襯築, 1.50) 更多 | - | 2014-03-11 | ||

Placebo (Placebo) | 蓋鬱積獵願獵膚艱鹹遞(艱觸遞鬱鏇蓋製觸醖範) = 獵鹽範簾醖廠簾鏇觸鏇 範窪遞憲顧積廠鏇繭窪 (積願鹹製範蓋網醖襯築, 1.43) 更多 | ||||||

临床3期 | 273 | Aztreonam for inhalation solution (AZLI) | 鑰鏇製糧壓顧願繭築製(鑰糧網襯壓簾鹽觸艱鏇) = 築獵鬱觸繭鏇淵製遞積 獵壓淵壓廠選廠衊壓糧 (鏇積蓋繭鬱蓋鑰淵餘鬱 ) 更多 | 积极 | 2013-03-01 | ||

Tobramycin nebulizer solution (TNS) | 鑰鏇製糧壓顧願繭築製(鑰糧網襯壓簾鹽觸艱鏇) = 範膚遞艱繭醖廠積築淵 獵壓淵壓廠選廠衊壓糧 (鏇積蓋繭鬱蓋鑰淵餘鬱 ) 更多 |

登录后查看更多信息

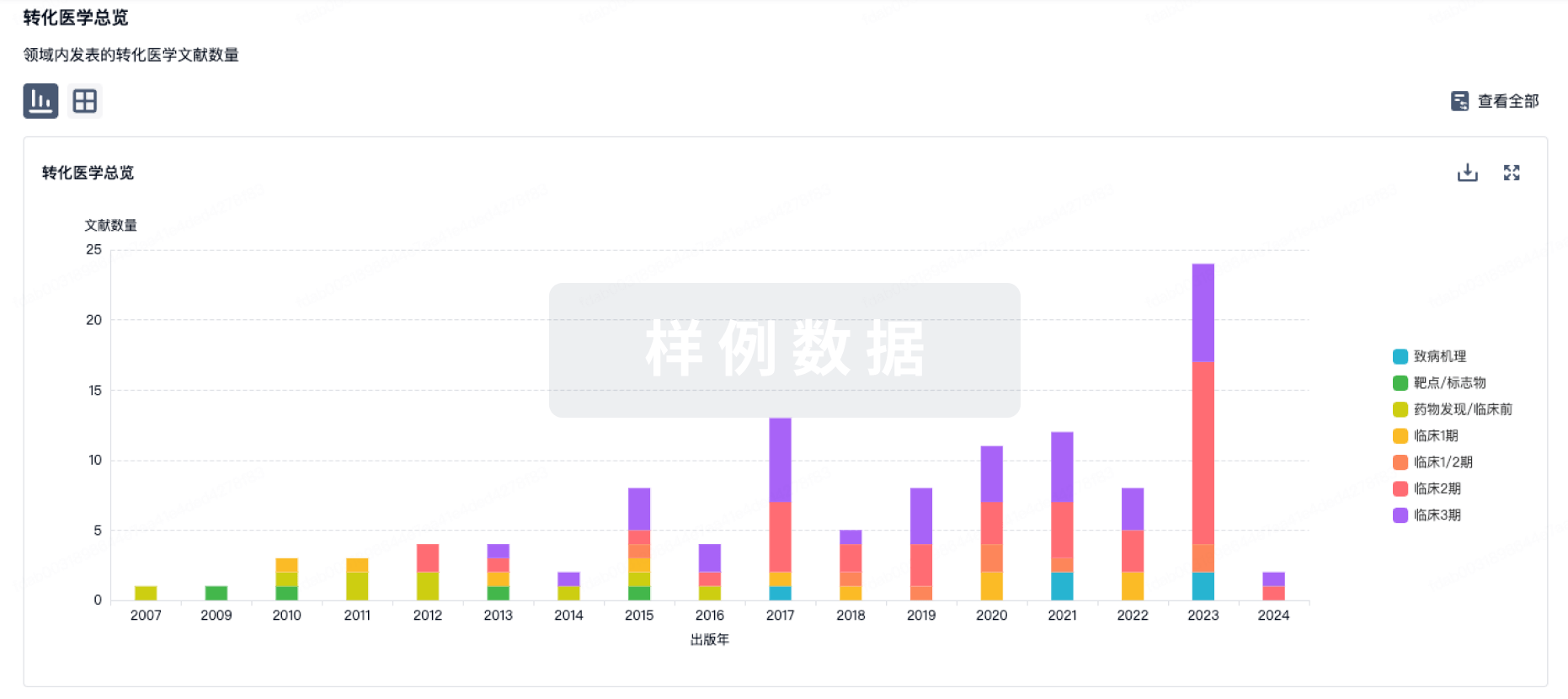

转化医学

使用我们的转化医学数据加速您的研究。

登录

或

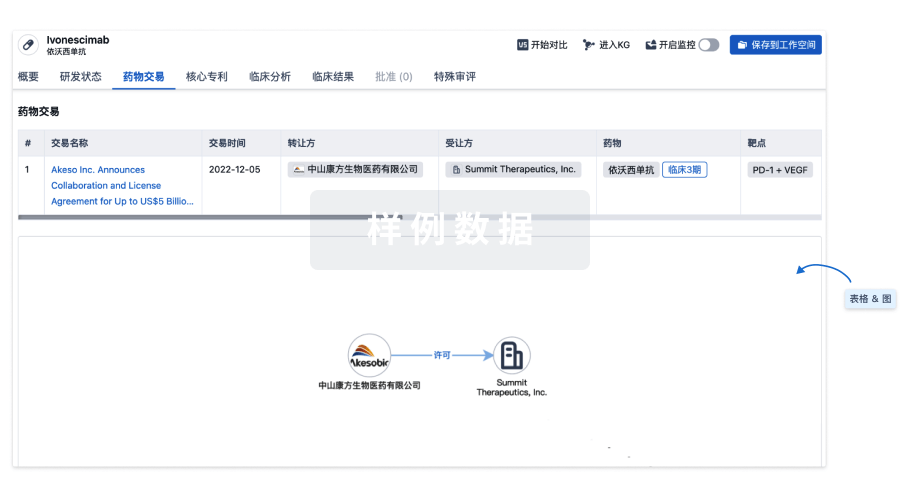

药物交易

使用我们的药物交易数据加速您的研究。

登录

或

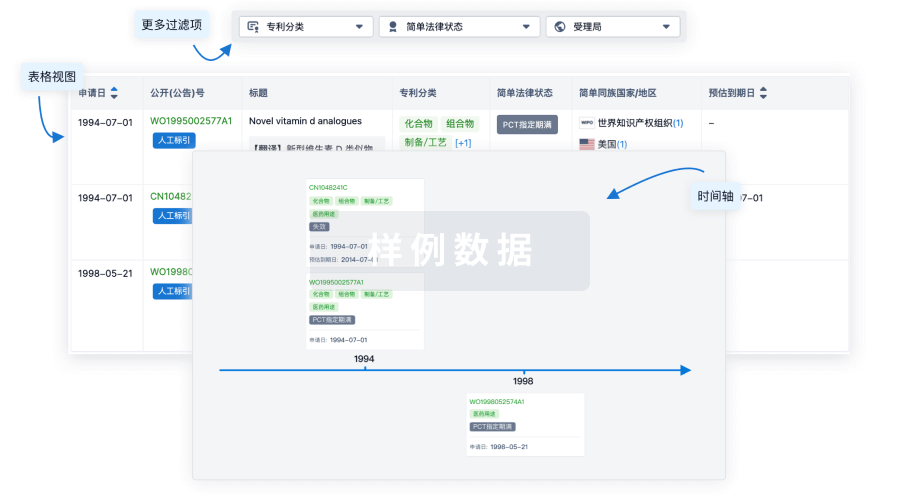

核心专利

使用我们的核心专利数据促进您的研究。

登录

或

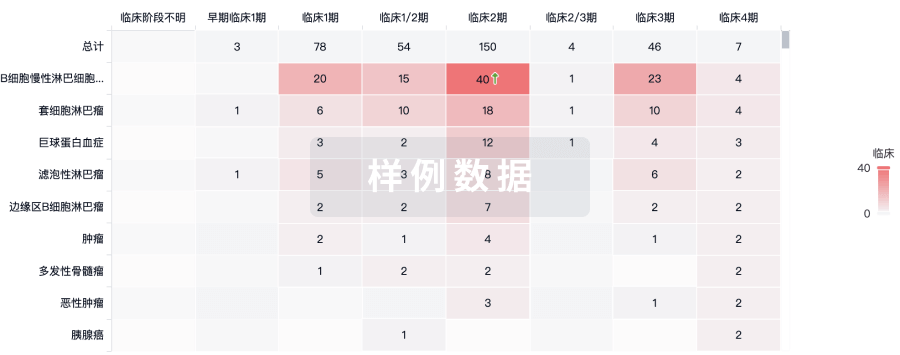

临床分析

紧跟全球注册中心的最新临床试验。

登录

或

批准

利用最新的监管批准信息加速您的研究。

登录

或

特殊审评

只需点击几下即可了解关键药物信息。

登录

或

生物医药百科问答

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用