预约演示

更新于:2025-09-09

Syngenta AG

更新于:2025-09-09

概览

疾病领域得分

一眼洞穿机构专注的疾病领域

暂无数据

技术平台

公司药物应用最多的技术

暂无数据

靶点

公司最常开发的靶点

暂无数据

| 疾病领域 | 数量 |

|---|---|

| 肿瘤 | 2 |

| 排名前五的药物类型 | 数量 |

|---|---|

| 小分子化药 | 2 |

| 排名前五的靶点 | 数量 |

|---|---|

| CTLA4(细胞毒性T淋巴细胞相关抗原4) | 1 |

| ARFGEF(ARFGEF family) | 1 |

关联

2

项与 Syngenta AG 相关的药物靶点 |

作用机制 ARFGEF 抑制剂 |

在研机构 |

原研机构 |

在研适应症 |

非在研适应症- |

最高研发阶段临床前 |

首次获批国家/地区- |

首次获批日期- |

靶点 |

作用机制 CTLA4抑制剂 |

在研适应症 |

非在研适应症- |

最高研发阶段临床前 |

首次获批国家/地区- |

首次获批日期- |

1

项与 Syngenta AG 相关的临床试验NCT02137317

Prevention of Farmers' Exposure to Pesticides With Relevant Personal Protective Equipment in Chitwan District of Nepal

According to the World Health Organization pesticide poisoning is a major health problem due to the millions of cases annually occurring worldwide. Farmers have a particularly high risk of pesticide poisoning because of their work involving pesticide use to protect crops. The majority of pesticide poisonings occur in developing countries. On a short term it is not realistic to reduce farmers' use of pesticides significantly because it would require that secure and cost-effective alternatives are introduced. This is a lengthy process, which should undoubtedly be supported. However, it becomes as important to make sure that farmers can protect themselves from pesticide exposure meanwhile. Use of personal protective equipment can minimize pesticide exposure on farmers' bodies and consequently reduce their risk of pesticide poisoning. However, the sparse research identified through a systematic literature review shows that we are not in a position to give recommendations on what personal protective equipment farmers should protect themselves with against pesticide exposure suitable to their specific conditions. The purpose of the present study is to examine factors that influence farmers' use of personal protective equipment during their work with organophosphates and, based on this examine the ability of locally adapted personal protective equipment to reduce their organophosphate exposure. The hypothesis is that farmers working in locally adapted personal protective equipment have less acute organophosphate poisoning symptoms, a higher plasma cholinesterase level and find it to be a more feasible solution than farmers working in their daily practice wearing. Examining how locally adapted personal protective equipment (onwards referred to as the LAPPE solution) performs in practice implies testing it in an intervention study. A randomized crossover experiment design is chosen partly because fewer farmers have to be recruited since each farmer will act as his own control and partly because the between farmer variation is strongly reduced. The performance of the LAPPE solution will be tested in one experiment and compared to the performance of the same farmers' daily practice wearing (onwards referred to as the DP solution) in another experiment. The LAPPE solution is expected to have a superior performance. The participation sequence (LAPPE/DP or DP/LAPPE) will be randomized. The study will be conducted among farmers in Chitwan, Nepal.

开始日期2014-08-01 |

申办/合作机构  Bispebjerg Hospital Bispebjerg Hospital [+7] |

100 项与 Syngenta AG 相关的临床结果

登录后查看更多信息

0 项与 Syngenta AG 相关的专利(医药)

登录后查看更多信息

1,685

项与 Syngenta AG 相关的文献(医药)2025-09-01·Plant Genome

New genomic resources to boost research in reproductive biology to enable cost-effective hybrid seed production.

Article

作者: Lage, Jacob ; Bansept-Basler, Pauline ; Schnurbusch, Thorsten ; Cavanagh, Colin ; Olson, Paul D ; Whitford, Ryan ; Rohde, Antje ; Uauy, Cristóbal ; Millán-Blánquez, Marina ; Röhrig, Laura ; Frohberg, Claus ; Maeder, Leah ; Boden, Scott A ; Boeven, Philipp H G ; Dixon, Laura E ; Griffe, Lucie ; Albertsen, Marc C

The commercial realization of hybrid wheat (Triticum aestivum L.) is a major technological challenge to sustainably increase food production for our growing population in a changing climate. Despite recent advances in cytoplasmic- and nuclear-based pollination control systems, the inefficient outcrossing of wheat's autogamous florets remains a barrier to hybrid seed production. There is a pressing need to investigate wheat floral biology and enhance the likelihood of ovaries being fertilized by airborne pollen so breeders can select and utilize male and female parents for resilient, scalable, and cost-effective hybrid seed production. Advances in understanding the wheat genomes and pangenome will aid research into the underlying floral organ development and fertility with the aim to stabilize pollination and fertilization under a changing climate. The purpose of this position paper is to highlight priority areas of research to support hybrid wheat development, including (1) structural aspects of florets that affect stigma presentation, longevity, and receptivity to airborne pollen, (2) pollen release dynamics (e.g., anther extrusion and dehiscence), and (3) the effect of heat, drought, irradiation, and humidity on these reproductive traits. A combined approach of increased understanding built on the genomic resources and advanced trait evaluation will deliver to robust measures for key floral characteristics, such that diverse germplasm can be fully exploited to realize the yield improvements and yield stability offered by hybrids.

2025-08-08·JOURNAL OF ORGANIC CHEMISTRY

Synthesis of 3-Substituted 5,5-Dimethyl Isoxazolines via Unusual Reactivity of Chlorooximes and Di-tert-butyl Dicarbonate (Boc2O) or tert-Butyl Acetate (t-BuOAc)

Article

作者: Phadte, Mangala ; Pal, Sitaram ; Mandadapu, Raghuramaiah ; Wagh, Pramod S. ; Ghorai, Sujit K. ; Hapse, Venunath M.

A convenient method for the synthesis of 3-substituted 5,5-dimethyl isoxazolines from diversely functionalized chlorooximes and di-tert-butyl dicarbonate (Boc2O) has been demonstrated. The reaction proceeds via [3 + 2] cycloaddition of isobutene, generated in situ from Boc2O and nitrile oxides, formed from chlorooximes. tert-Butyl acetate (tert-BuOAc) also proved to be an efficient alternative source of isobutene, providing a comparable yield of desired isoxazolines under similar conditions. It has also been demonstrated that the in situ preparation of chlorooximes from corresponding aldoximes using N-chlorosuccinimide (NCS) and their direct utilization for one-pot synthesis of 3-substituted 5,5-dimethyl isoxazolines can be successfully achieved. This process occurs without the need for metal catalysts or bases. The method features several advantages, including the use of readily accessible reagents, broad substrate scope, high yields, and reliability on a gram scale, making it a valuable approach for the synthesis of 3-substituted 5,5-dimethyl isoxazolines.

2025-07-01·JOURNAL OF HAZARDOUS MATERIALS

Glyphosate and urea co-exposure: Impacts on soil nitrogen cycling

Article

作者: Li, Bingxue ; Liu, Xueke ; Guo, Qiqi ; Wang, Yujue ; Li, Pengxi ; Zheng, Li ; Zhao, Fanrong ; Gu, Yucheng ; Zhai, Wangjing ; Wang, Peng ; Liu, Donghui

Glyphosate, the most widely utilized herbicide, frequently coexists with nitrogen fertilizers such as urea in soil environments. Nitrogen cycling is a key process for maintaining soil ecological functions and nutrient balance. However, the effects of co-exposure to glyphosate and urea on this process have remained unclear. This study investigated the impact of co-exposure to glyphosate (10 mg/kg) and urea (260.87 or 347.83 mg/kg, equivalent to 180 or 240 kg N/ha) on soil nitrogen cycling through a 98-day incubation experiment. Soil nutrients, enzyme activities, bacterial community structure, and functional genes were analyzed. NH4+-N and NO3--N contents significantly decreased by 44.70-53.43 % and 36.74-49.12 %, respectively. Co-exposure reduced bacterial diversity and altered nitrogen cycling genes, decreasing nifH while increasing amoA and nosZ, indicating reduced nitrogen input potential and increased inorganic nitrogen loss. Enzyme analysis confirmed excessive activation of nitrification and denitrification, lowering nitrogen availability. Partial least squares structural equation modeling (PLS-SEM) showed co-exposure indirectly decreased NH4+-N and NO3--N via enhanced nitrate and nitrite reductase activities. The study highlights the complex interactions between herbicides and fertilizers in soil environments and underscores the need for further research to understand the implications for wider soil health and crop production in agriculture systems.

52

项与 Syngenta AG 相关的新闻(医药)2025-08-08

Basel is building a biotech ecosystem that builds on its Big Pharma natives. \n When you think of biotech hubs, the first image that comes to mind may be the sleek waterfront offices of Boston Seaport or the scenic hills of San Francisco’s Bay Area. But a small city in Switzerland has been quietly raising its game in recent years.Basel is already firmly established on the drug development map because of its unique position as a major European city that hosts two of the world’s biggest pharmas—Roche and Novartis. But under their large shadows, a bustling ecosystem of biotechs has been steadily growing.In fact, around 800 life science companies now have a presence in the city, according to Christof Klöpper, Ph.D., CEO of Basel Area Business & Innovation (BABI), which promotes investment in the area. Those companies include CDMO giant Lonza, as well as U.S.-Chinese biopharma BeOne Medicines—the new name for BeiGene—which inaugurated its European headquarters in the city in 2022.The University of Basel, which is known for its life sciences research, has helped secure the city’s regional reputation for medicines development that has, in turn, drawn more companies to the area. This has helped create a “small city that’s totally focused on life sciences,” Klöpper told Fierce Biotech in an interview.“If you go to a bar, the chances are quite high that the person next to you is a life sciences person,” he said. Other factors that have helped draw companies from Europe and further afield are attractive tax incentives by the science-sympathetic regional government and the fact that “it\'s an attractive place to live and to do business,” Klöpper explained.Where once a company like Johnson & Johnson would have swooped in to acquire an appetizing Basel-based biotech, now the Big Pharmas are more likely to “stay here and set up research divisions,” Klöpper explained.In fact, J&J did just that—setting up offices at the Basel Innovation Hub, a new site on the outskirts of the city. The hub’s headquarters were inaugurated in 2022 and, since then, the likes of antibody-drug conjugate-focused Alentis Therapeutics, Versant Ventures-backed Bright Peak Therapeutics, and the Swiss Tropical and Public Health Institute have all moved in. When Fierce met Klöpper inside the headquarters’ wood-paneled modern offices, it was clear that the hub district is still very much in development, with two large empty lots expected to be filled up by further new buildings by 2029.He described the transformation of Basel from primarily hosting large pharma and chemical companies like Roche, Novartis and Sinochem-owned Syngenta into a home for hundreds of biotechs as a “corporate cluster that turned into an ecosystem,” much like Boston did over the past few decades. The key to this ecosystem’s success are the employees. While they might start off being enticed to Basel by a job offer at a Big Pharma, the high quality of living means they are likely to stay and find their next role at another life sciences company in the city.This is where Basel’s “strategic location” comes in, according to Klöpper. Tucked into a corner of north Switzerland that borders both France and Germany, biopharmas can have their pick of talent spread across three countries. In fact, Fierce spoke to one of several Roche employees who commute to work via bike each morning from a nearby village in Germany’s Black Forest.“If an American company comes to Europe, it\'s quite complex to tackle the European market,” Klöpper explained. “Basel has quite a lot to offer to live with this complexity, because it\'s easy to hire people on the German or the French side.” The city’s excellent transport links mean it’s also common for biotech staffers to take the one-hour train into work from Zurich, another hot spot for Swiss science. Maximilien Murone, Ph.D., a liaison officer at the Swiss Biotech Association, is keen to put Basel’s blossoming biotech role in the context of this wider national framework.“I think what makes Switzerland more of a [biotech] hub as a nation is the fact that the country is quite small and everything is quite close,” Murone told Fierce. “So it doesn\'t take much time to go from one location in Zurich to Basel [or] Basel to west Switzerland.”When it comes to securing money, biotechs have the option of regional government funds, as well as the Swiss Innovation Agency, which supports science-based startups with their R&D work. Murone also described Deep Tech Nation Switzerland—a non-profit set up to finance innovation—as “super important” for the national biotech funding landscape.Last year, Swiss biotechs raised more than 2.5 billion Swiss francs ($3 billion) in 2024, a 22% increase on the previous year, according to the association’s most recent report. A total of 800 Swiss francs ($985 million) of this was raised by privately owned biotechs, led by Alentis’ 163 million franc ($200 million) series D and SixPeaks Bio’s $110 million from a combination of a series A round and a deal with AstraZeneca. Both Alentis and SixPeaks are based in Basel.The city has a “long history” in the life sciences, Murone explained. “Centuries ago, they were producing pigments to dye clothes and other things,\" he said. \"And then from there they moved, slowly but surely, to developing medicines.”A key pillar in this history is Roche. The company was founded in Basel in 1896 by Swiss businessman Fritz Hoffmann, whose experience of a devastating cholera outbreak in Germany led him to create one of the first companies specifically set up to manufacture scientifically researched pharmaceuticals.Roche may now have a $260 billion market cap and employees spread across over 150 countries, but the company’s heart remains in Basel. In fact, the twin towers of Roche’s headquarters on the banks of the river Rhine are the tallest buildings in the city, dominating the skyline for miles around. Speaking to Fierce in the company’s recently opened $1.4 billion research and development center within the Basel headquarters, Roche Pharma CEO Teresa Graham explained why it’s easy to entice international talent to move over.“It’s an underrated city,” she said. “If people aren\'t familiar with Basel or Switzerland … we always encourage them to come. We say, ‘Bring your family, stay for a couple days.’ And pretty much everybody is sold by the end of it.”Graham highlighted “really fantastic” international schools for children, “great communities for spouses,” an “amazing amount of green space” and hiking options in the nearby countryside as some of the key selling points.One of Roche’s legacies for the area is the various biotechs that can trace their roots back to the Big Pharma. One of these is Basilea Pharmaceutica, an anti-infection company that spun off from Roche in 2000.The biopharma remained in Roche’s shadow—literally—for 20 years before upping sticks and moving its offices to the Basel Innovation Hub when the site opened in 2022.In the boardroom of Basilea’s headquarters, CEO David Veitch told Fierce that, over time, Basel has become a “real R&D hub.”“Like attracts like,” Veitch explained. “So we came here, J&J came on the floor above us shortly afterwards, and then lots of smaller startups.”For Basilea, which now employs around 170 people, the city’s livability combined with the local talent pool means “we never struggle with recruitment,” the CEO said. “You could put a LinkedIn advert up and you get 300 people per role.”In fact, the density of life science companies around the city means the recruitment options “extend beyond just research and manufacturing” to “ancillary or supportive functions” like experienced biotech auditors and financial experts, Basilea’s Chief Financial Officer Adesh Kaul pointed out. When asked what’s next for the Basel biotech scene, Veitch pointed to the construction work surrounding the company’s offices at the Innovation Hub.“It\'s a building site, and there\'s plans to keep on building,” he said. “It\'s got a way[s] to go to become a Silicon Valley or Boston,” he added. “But I think it\'s certainly a concentration area in Europe—and it\'s only going to grow.”BABI CEO Klöpper cited Boston as having already gone on a similar trajectory of moving from an R&D hub to a self-supporting biotech ecosystem. Perhaps it’s time for Basel to shout about its own success more if it wants to raise its profile to match its U.S. peers?“Communication-wise, I think we should be a bit more pushy,” Klöpper said. “It\'s not in the nature of the Swiss to advertise too strongly.”

2025-08-01

以下文章来源于安徽省科创投资有限公司/安徽国控投资有限公司公众号科创汇投,作者张达今年的政府工作报告中,明确提出“建立未来产业投入增长机制,培育生物制造、量子科技、具身智能、6G等未来产业”,并将生物制造列在未来产业之首,其重要性可见一斑。全球合成生物产业正处在加速发展期,合成生物产业链环环相扣,产业链上游聚焦于底层使能技术开发,中游提供赋能型技术平台,下游为各垂直行业带来的创新应用产品。近年来,相关政策对于合成生物产业的支持力度不断加大,各主要省份均在加紧布局合成生物产业集群,高校和科研院所的创新研发也呈现多点开花的态势,科技与产业的结合吸引了国际及国内资本对于合成生物赛道的关注,而资本的注入正不断加速技术从实验室走向市场的进程,为中国相关产业实现跨越式升级提供契机。安徽省已明确将合成生物作为重点发展的未来产业,并设立了生物制造未来产业先导区,本篇报告作为系列研究之一,旨在对合成生物产业总体概况进行研究。一、合成生物学概况合成生物学(Synthetic Biology)是一门汇集生物学、基因组学、工程学和信息学等多种学科的交叉学科,其利用工程化设计理念,从基因层面对生物体进行有目标的设计、改造乃至重新合成,构建基于人工基因线路的定制化细胞,实现目标化合物、药物或功能材料等大规模生产与应用。合成生物被誉为是继“DNA双螺旋结构”和“基因组技术”之后的第三次生命科学革命,推动物质供给从自然界提取向合成生物制造转型,为下一代生物制造与未来生物经济跨越式发展提供核心驱动力。1980年,Barbara Hobom开始使用“合成生物学”这一概念来表述基因重组技术。20世纪末,基因合成技术、基因测序技术等的不断成熟,为合成生物学的发展奠定了实质性的、全面的物质基础。合成生物在进入21世纪后发展迅猛,2004年美国MIT出版的《技术评论》就把合成生物选为将改变世界的十大技术之一;2013年国际著名咨询机构McKinsey(麦肯锡)将合成生物评为能够引起人类生活以及全球经济发生革命性进展的颠覆性科技。我国合成生物学研究依托相关高校和科研院所形成了北京、上海、天津、深圳等各具特色的研究区域。近年来,合成生物的底层技术与关键核心技术研发不断取得突破,新产品开发速度、过程工艺的低碳环保与规模放大水平大幅度提升,以生物医药、生物基材料与生物基化学品等为代表的新兴产业快速兴起,推动合成生物产业链快速发展。合成生物学从早期“造物致知”(理论基础)到当前“造物致用”(应用示范)体现从技术理论到产业化应用的科学发展规律。据McKinsey(麦肯锡)统计,生物制造的产品可以覆盖60%化学制造的产品,并在继续拓展边界、快速成长,未来对医药、化工、能源、环保、食品、农业等行业带来巨大影响。预计到2025年,合成生物与生物制造的经济影响将达到1000亿美元。根据美国经济分析局的研究显示,合成生物学可能影响的行业占美国GDP的21%(约25.4万亿美元)。图1 合成生物可能影响的产业资料来源:美国经济分析局二、合成生物产业链分析合成生物产业生态覆盖面庞大,多元化技术及其产业已具有相当的市场规模,将合成生物产业按照产业链上中下游分类。图2 合成生物产业链概览资料来源:《中国合成生物学产业白皮书2024》、安徽省科创投资上游为使能技术,主要围绕设计-构建-测试-学习(DBTL)展开,包括DNA元件、DNA合成、测序与组学、数据库与AI机器学习以及微流控、高通量与自动化设备等,关注底层技术颠覆及提效降本。我国在设计和构建环节快速追赶,其中DNA合成、基因编辑及DNA测序方面已经做到与海外同步,国内代表性企业包括华大基因、金斯瑞、中合基因等。但在测试和学习环节仍然与国外存在较大的差距,仍处于行业发展早期。中游是对生物系统及生物体设计、开发的技术平台,核心技术为路径开发,包括菌株鉴定与筛选、菌株构建、基因编辑等的服务平台和中试工艺平台等,注重技术路线的选择以及技术路线/平台的高效可行,潜在具备CRO属性。海外领先企业在体内/体外工程转化平台上均已形成成熟商业模式,已出现以Ginkgo Bioworks为代表的“全能选手”。国内代表企业如恩和生物,其通过利用高通量湿实验平台(Bio-foundry),结合生物信息计算和机器学习建立从生物设计到中试生产的技术平台,为个护、制药、农业、食品与营养等多种领域提供技术解决方案。下游是应用产品落地,涵盖生物医药、农业、食品与营养、消费个护、化工材料和能源等细分领域,核心技术在于大规模生产的成本控制和商业化放量。当前,我国合成生物已具备产业化的基础条件,产业培育进展显著并初具规模。下游应用产品端涌现了一批如邦耀生物、金达威、凯赛生物、华恒生物、微构工场等合成生物代表企业。三、合成生物产业重点领域概况(一)产业重点领域合成生物产业受益于基因测序、合成以及基因编辑等领域的快速发展,逐渐形成了以“设计-构建-测试-学习”(DBTL)循环为核心的研发模式,以宿主设计与基因路线优化和中试平台服务等为主的产业链中游,以及发酵、生物催化和纯化工艺为主导的下游产品放大生产模式。上游使能技术繁多,各企业通常聚焦某一技术领域如DNA测序、DNA合成、基因编辑工具、微流控/高通量设备等。国内中游平台赋能型企业尚处商业模式的早期阶段,主要以产业链上游企业开展产业链中游业务的模式开展,市场规模相对较小。出于知识产权与商业机密保护等因素,国内领先企业已布局依靠上游使能技术贯穿菌株设计构建、中试、规模放大的中下游全链条研发与产业化模式。鉴于当前上中游技术的成熟度和商业运作模式现状以及下游产品应用规模的增长态势,下游应用层面的产品类公司依然是产业链的核心环节,重点关注生物医药、化工材料、食品营养保健、个护消费、农业技术等细分领域。(二)产业细分赛道概况1、生物医药生物医药是当前合成生物应用最广泛的应用领域之一。合成生物在生物医药领域应用主要包括药物及其中间体的绿色生物合成和以新型抗体、基因与细胞治疗(CGT)为代表的创新疗法。化学药物/天然产物及其中间体的生产正在由传统的化学合成和生物提取向更高效低碳的酶法工艺和细胞工厂过渡。通过合成生物技术设计新的生化反应和代谢通路,实现药物及其中间体的低碳环保、绿色生物合成。近年来,合成生物技术在西格列汀、胞磷胆碱钠、红景天苷、紫杉醇、大麻素、新型抗生素、激素等产品展现替代药物传统化学合成或替代传统天然产物提取的潜力。弈柯莱生物和通化东宝合作的中国首个使用合成生物技术的西格列汀仿制药获批,成为国内化学药物生物合成工艺替代的标志性事件。川宁生物主要产品硫氰酸红霉素、青霉素类中间体、头孢类中间体等均通过生物发酵法生产。随着基因编辑、载体递送、干细胞、RNA设计合成等前沿技术的发展,合成生物技术助力以抗体类药物、细胞与基因治疗(CGT)为代表的创新药物不断涌现,在实体瘤、自身免疫性疾病、遗传缺陷性疾病、疫苗等领域提供更加有效的防治方案。中国本土创新药企在资本市场上也受到跨国企业青睐,近年来中国创新药企通过License-out(对外授权)实现历史性突破。2025年5月三生制药PD1/VEGF双抗药物SSGJ-707中国外权益授权给辉瑞制药,以首付款12.50亿美元(7月24日最终生效协议追加首付款1.5亿美元将中国内地商业化权益也打包出售),48亿美元里程碑付款以及双位数百分比的销售分成刷新纪录。邦耀生物依托于基因编辑技术创新平台打造造血干细胞和CAR-T创新疗法,治疗β-地中海贫血药物BRL-101进入关键性临床II期研究,有望成为国内首款地贫CGT上市产品;通用CAR-T治疗自身免疫性疾病药物BRL-303在国际上首次报道异体通用型CAR-T在治疗自身免疫疾病中获得突破,获评2024年度“中国科学十大进展”。嘉晨西海专注于自复制srRNA和常规RNA治疗药物和疫苗开发,通过体外转录工序实现快速、低成本mRNA的大规模制备,多款产品已进入临床开发阶段,研发管线涵盖肿瘤免疫治疗、传染病疫苗等。2、化工材料与传统化工相比,以合成生物为核心的生物化工具有原料可再生、过程清洁高效、低碳环保等特征,从根本上改变化工材料等传统制造产业高度依赖化石原料和高污染、高排放生产模式。合成生物在化工材料领域的应用主要包含大宗化学品和生物基材料等品类。当前合成生物相关研发企业在戊二胺、己二胺、丁二酸、己二酸、羟基脂肪酸酯(PHA)、聚乳酸(PLA)、聚丁二酸丁二醇酯(PBS)、呋喃二甲酸(FDCA)等产品上加速降低规模化生产成本并积极拓展应用场景,未来市场有望打开并进一步抢占传统化工市场份额。在大宗化学品领域,合成生物法可合成的大宗化学品主要是有机酸类、有机醇类和氨基酸类及其衍生物等,在整体基础化学品中的占比有限,未来提升空间大。由于化工领域对降低成本的诉求强烈,关注合成生物技术在成本、低碳环保上的优势,在一些细分领域已经完全替代传统化工,其中杜邦公司以生物发酵法制造1,3-丙二醇,华恒生物以厌氧发酵法生产L-丙氨酸以及凯赛生物长链二元酸生物合成均以成本优势快速占领市场份额。在生物基材料领域,关注新型生物基材料在场景应用中的替代与创新拓展、以及传统化工聚合物材料中间体/单体的低碳环保、低成本生物合成。合成生物在高性能材料上的应用在多种场景中具备商业化潜力,但多数仍处于初期研发阶段。以PHA为代表的新型生物基聚合材料可通过天然微生物代谢途径合成,其性质类似热塑性塑料且兼具环保可生物降解。虽然部分PHA类型其材料性质已展现出媲美当前主流的石油基聚合材料的优点,但PHA的相对较高的生产成本阻碍了其商业化进程。PHA研发代表企业有蓝晶微生物和微构工场,其中微构工场和上市公司恒鑫生活合作开发PHA淋膜纸制产品应用于消费级包装材料。以重组蛛丝蛋白为代表的纤维蛋白类材料,具有强机械性能或生物相容性,在航空航天、特种服饰、医用材料等领域具备应用前景,但产品商业化仍处于早期,技术和成本均有待进一步突破。合成生物成功替代传统制造超过10亿元销售额的产品目前屈指可数,对传统化工产品还没有形成规模性的替代。随着合成生物技术的进步和产业化发展,合成生物必将在化工领域突破更多的大体量产品。3、食品营养保健食品营养保健领域是合成生物最广泛的应用场景之一,有望成为未来增速最快的合成生物应用赛道。具体产品涉及品种多,重点产品包括维生素、氨基酸类、卟啉类、乳清蛋白、天然色素、母乳低聚糖(HMOs)、新型甜味剂、新型食品营养添加剂等。合成生物在食品营养保健的一个方向是应用合成生物技术替代传统生产方式。全球食品营养原料巨头帝斯曼是从化学合成成功转型生物合成的龙头公司,其超过50%的食品营养原料收入为生物基产品。国内上市企业嘉必优采用微生物发酵法生产花生四烯酸(ARA)和二十二碳六烯酸(DHA)获得突破,替代传统从动物肝脏和鱼油中获取的方式。合成生物在食品营养应用的另一个方向是替代蛋白,即采用微生物发酵和细胞培养等非动物来源获得蛋白,但当前市场接受程度较低且产品成本高,目前处于商业化起步阶段。国内企业昌进生物采用合成生物技术生产乳清蛋白,可广泛应用于乳饮、奶油、奶酪、零食等终端市场,由于国内审批尚未开放,公司当前目标放在海外市场。国内对于采用合成生物技术生产的新型添加剂和营养剂持保守态度,申报流程较为繁琐且企业资金付出较大。同时食品添加剂生产菌种必须为已列入国家规定的菌种使用目录。严格的监管体系一方面为产品提供潜在的应用市场和准入保护,另一方面为新型产品研发增添不确定性。当前合成生物技术在食品营养保健领域开发新型产品活跃,但许多在研新品种尚处于研发向商业化转化阶段,正在积极寻求监管体系的突破和批准,拥有待培育的市场潜力,如NMN在食品保健用途上的场景应用扩展、包括Brazzein甜味蛋白在内的新型甜味剂等。4、个护消费在个护消费领域,合成生物主要开发思路为针对高价值产品开发兼具经济性和规模化的生物制造路线。通过改造微生物或生物催化来生产香料、保湿剂和活性成分等用于护肤品,合成生物技术生产透明质酸、胶原蛋白等重磅品种已经率先应用落地,以华熙生物、巨子生物和锦波生物等为代表的企业带动了中国功能性护肤市场的快速增长。从以玻尿酸和胶原蛋白为代表的生物活性成分的开发到角鲨烯和依克多因等新型组分应用,个护消费领域涉及品种多、潜在市场庞大。当前角鲨烯、视黄醇、胶原蛋白、麦角硫因、NMN、辅酶Q10、依克多因、熊果苷、烟酰胺等产品的生物合成路径已打通,生产成本有望进一步突破。相较于食品营养保健,个护消费领域法规限制相对宽松,落地进程有望加速。一方面以传统动植物提取物(如透明质酸传统从鸡冠中提取、角鲨烯从蓝鲸和鲨鱼肝中提取)或化学合成产品(如烟酰胺和视黄醇)为目标,其市场潜力清晰、功效明确,另一方面一些经科学验证的新品种(如NMN和依克多因)因具有商业化开拓潜力受到广泛关注,是目前合成生物在个护消费领域开发的主要方向。5、农业技术在农业技术领域,合成生物重点围绕改良培育优良动植物品种、开发环境友好的生物肥料和生物农药等应用于生物育种、植物营养、植物健康等细分赛道,合成生物在农业技术行业应用整体处于早期,其中部分生物育种、固氮等微生物肥料和微生物农药等已实现商业化。合成生物在生物育种方向能够助力培育抗逆性更强、更高产的作物。合成生物技术通过基因编辑为生物育种提供了更快速和精确的手段,通过精确修改作物的基因,使其具备抗病、抗虫、耐旱、耐盐碱等特性。光自养生物改造是合成生物技术在农业中的一项创新应用,运用包括基因编辑在内的合成生物技术对植物光合作用路径重构设计,提高光能利用效率,该技术具有巨大的潜在价值,但尚未实现商业化。国内企业先正达、隆平高科均有布局通过分子育种和基因编辑技术开发抗逆作物。在植物营养方向,合成生物技术通过改造微生物,使其能够合成和释放植物所需的营养物质,从而开发出环境友好型生物肥料,其中应用合成生物技术对固氮微生物的改造和开发是当前热点方向之一。大北农、轩凯生物均有开发基于合成生物学的微生物肥料和土壤修复产品。在植物健康方向,通过合成生物技术改造微生物或植物自身产生抗虫物质,开发对环境和人类更加安全友好的生物农药,从而减少化学农药的使用量和对环境的破坏。科诺生物通过基因改造提升苏云金芽孢杆菌杀虫性能,领先生物开发靶向特定害虫的RNA干扰(RNAi)生物农药。合成生物以更加低碳环保的方式在减少肥料使用、强化病害防控、提供作物抗性和生长效率等方面展现潜力。当前相对于以化学工业为主导的农业技术领域,合成生物在成本和作用效果等方面优势暂不明显,建议关注生物基(缓释)肥料、微生物菌肥、微生物源杀虫剂、植物源杀虫剂、噬菌体农药等在绿色/有机农业领域的场景应用。四、政策支持国家和省市政府高度关注和支持合成生物产业的发展,以合成生物技术赋能生物制造,通过政策、产业投资与技术创新推动生物制造高效可持续发展。国家发改委《“十四五”生物经济发展规划》明确提出,要大力发展生物制造,推动生物基材料、生物能源、生物医药、生态环保等领域的突破性发展。今年的《政府工作报告》提出,培育壮大新兴产业、未来产业。其中,特别指出要培育生物制造等未来产业。根据不完全统计,除港澳台地区外,我国已有28个省、自治区或直辖市在相关政策中明确提出支持合成生物/生物制造产业发展。安徽省把合成生物作为未来产业发展,并设立产业先导区。2024年11月23日,安徽省政府办公厅发布《安徽省未来产业发展行动方案》,明确提出加快合成生物等技术突破与产业化,在企业培育、产业布局、场景应用、产业先导集聚等方面提供指引与行动措施。2025年安徽省政府工作报告提出,加快培育生物医药产业,加快合成生物等领域技术突破和产业化,加强生物基材料等推广应用。表1 近年国家和安徽重要政策梳理五、总结在政策支持和技术进步的双重驱动下,合成生物产业迎来历史发展新机遇,知名投资机构、创新研发企业纷纷进入合成生物产业赛道。我们认为,当前合成生物应用层面的产品类公司依然是产业重点环节,优秀的合成生物学企业往往是某一领域的“单项冠军”,具有“小而美”的特征,通常单个产品市场规模并不大,新产品在应用场景拓展的潜力决定其市场规模和空间。相关企业在项目筛选上应关注选品逻辑和产能放大能力,以及相关技术突破和应用场景拓展带来的市场变革。平台型投资机构可以资本为纽带,助力合成生物企业挖掘场景需求,通过下游场景需求优化驱动合成生物企业的选品和应用。建议重点关注下游产业链产品类潜在头部创新研发企业,关注企业核心竞争力和产业化赋能能力以及由此在技术和成本上具备的竞争优势。参考资料:1.波士顿咨询、上海合成生物学创新中心与B Capital《中国合成生物学产业白皮书2024》2.招商银行研究院《生物制造系列报告①把握合成生物发展趋势,聚焦产业链上下游突破》3.中信证券《生物制造-新质一极,上领下举》4.中信证券《新材料行业合成生物学专题报告一:拥抱合成生物学产业化加速阶段的成长高确定性》END

医药出海核酸药物

2025-04-22

World Health Organization prequalifies Syngenta’s innovative insecticide Sovrenta ®

BASEL, Switzerland--(BUSINESS WIRE)--Malaria is one of the world’s most deadly diseases, and it is becoming more pervasive – despite decades of effort and some successes on the path to eradicating it. According to the World Health Organization (WHO), malaria infected 263 million people and killed nearly 600,000 people in 2023 – 75% of whom were children under the age of 5. Of those afflicted, 94% are in Africa – where malaria crushes communities and can cripple economies.

Syngenta launches a next-gen insecticide that takes on the toughest, treatment-resistant mosquitoes. With WHO prequalification, this breakthrough could help save thousands of lives. A global health game changer #WorldMalariaDay #InnovationAgainstMalaria

Spread by parasites in infected mosquitoes that are highly adept at evolving, malaria’s rise reflects the reality of insecticide resistance and the difficulties developing new solutions. The effective control of mosquitoes remains a key strategy for reducing disease transmission.

Syngenta, a global leader in agricultural innovation, today announced that its next-generation insecticide Sovrenta® has received pre-qualification by WHO, paving the way for its use in malaria-afflicted countries. For decades, Syngenta has been at the forefront of the fight against malaria, reflecting its commitment to researching new solutions through in-house R&D efforts and working alongside key partners across sub-Saharan Africa; its products such as Actellic® already help avert as many as 100 million cases of malaria in more than 30 countries.

Sovrenta® works by targeting a mosquito’s nervous system, blocking signals that enable the insect’s muscles to relax. The effect paralyzes the mosquito, so that it eventually dies. The ability of Sovrenta® to provide long-lasting, effective control means just one application is required each season, reducing the cost for malaria prevention programs.

Sovrenta® is based on Syngenta’s cutting-edge PLINAZOLIN® technology that features a new mode of action, ensuring effective control of mosquitoes even where the insect’s populations are increasingly resistant to older insecticides. When used in rotation with other products, Sovrenta® can further ensure that important vector control solutions remain effective for longer.

Andy Bywater, Global Head of Marketing for Vector Control at Syngenta Crop Protection, said: “This marks an important milestone in Syngenta’s quest to bring its most advanced innovations to malaria-endemic countries, and to advancing the health and safety of the millions still at risk.”

Bywater said Sovrenta® is a crucial addition to Syngenta’s vector control portfolio that allows for enhanced resistance-management strategies. That is key in regions where mosquitoes are resistant to older insecticides based on pyrethroids – the most common forms of treatment. “Sovrenta® is the only insecticide recognized to provide year-long protection and gives malaria control programs a powerful tool to safeguard communities,” Bywater said. “We are dedicated to collaborating with partners to ensure Sovrenta® is deployed sustainably and effectively.”

The WHO’s Vector Control Product Pre-Qualification (VCPP) is a rigorous process that ensures the safety, efficacy, and quality of vector-borne disease control products. Its list of prequalified vector control products is used by international procurement agencies and by countries to guide bulk purchasing of these products for distribution in resource-limited countries.

Web Resources

Media release image pack

Syngenta Media Library

About Syngenta

Syngenta is a global leader in agricultural innovation with a presence in more than 90 countries. Syngenta is focused on developing technologies and farming practices that empower farmers, so they can make the transformation required to feed the world’s population while preserving our planet. Its bold scientific discoveries deliver better benefits for farmers and society on a bigger scale than ever before. Guided by its Sustainability Priorities, Syngenta is developing new technologies and solutions that support farmers to grow healthier plants in healthier soil with a higher yield. Syngenta Crop Protection is headquartered in Basel, Switzerland; Syngenta Seeds is headquartered in the United States. Read our stories and follow us on LinkedIn, Instagram & X.

Syngenta Vector Control plays a leading role in the prevention of vector-borne disease transmission through its portfolio of mosquito control products. Visit our website and follow us on LinkedIn & X.

Data protection is important to us. You are receiving this publication on the legal basis of Article 6 para 1 lit. f GDPR (“legitimate interest”). However, if you do not wish to receive further information about Syngenta, just send us a brief informal message and we will no longer process your details for this purpose. You can also find further details in our privacy statement.

Cautionary Statement Regarding Forward-Looking Statements

This document may contain forward-looking statements, which can be identified by terminology such as ‘expect’, ‘would’, ‘will’, ‘potential’, ‘plans’, ‘prospects’, ‘estimated’, ‘aiming’, ‘on track’ and similar expressions. Such statements may be subject to risks and uncertainties that could cause the actual results to differ materially from these statements. For Syngenta, such risks and uncertainties include risks relating to legal proceedings, regulatory approvals, new product development, increasing competition, customer credit risk, general economic and market conditions, compliance and remediation, intellectual property rights, implementation of organizational changes, impairment of intangible assets, consumer perceptions of genetically modified crops and organisms or crop protection chemicals, climatic variations, fluctuations in exchange rates and/or commodity prices, single source supply arrangements, political uncertainty, natural disasters, and breaches of data security or other disruptions of information technology. Syngenta assumes no obligation to update forward-looking statements to reflect actual results, changed assumptions or other factors.

©2025 Syngenta. Rosentalstrasse 67, 4058 Basel, Switzerland.

上市批准

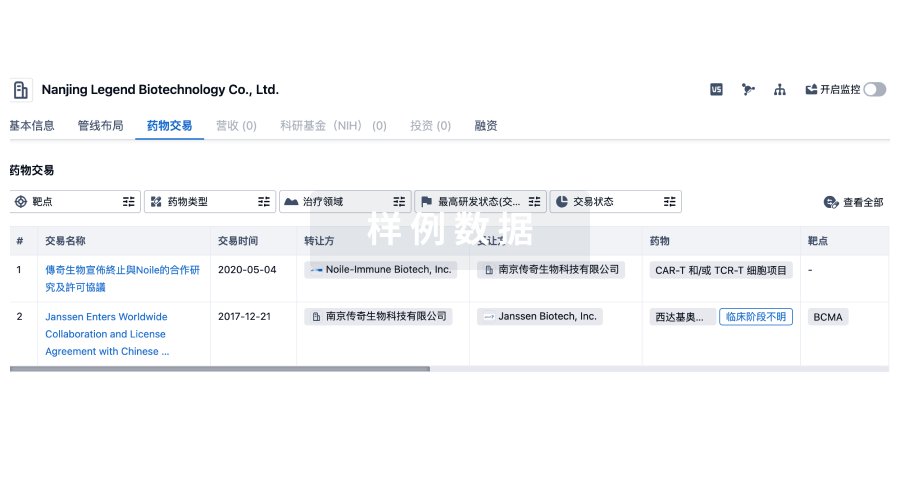

100 项与 Syngenta AG 相关的药物交易

登录后查看更多信息

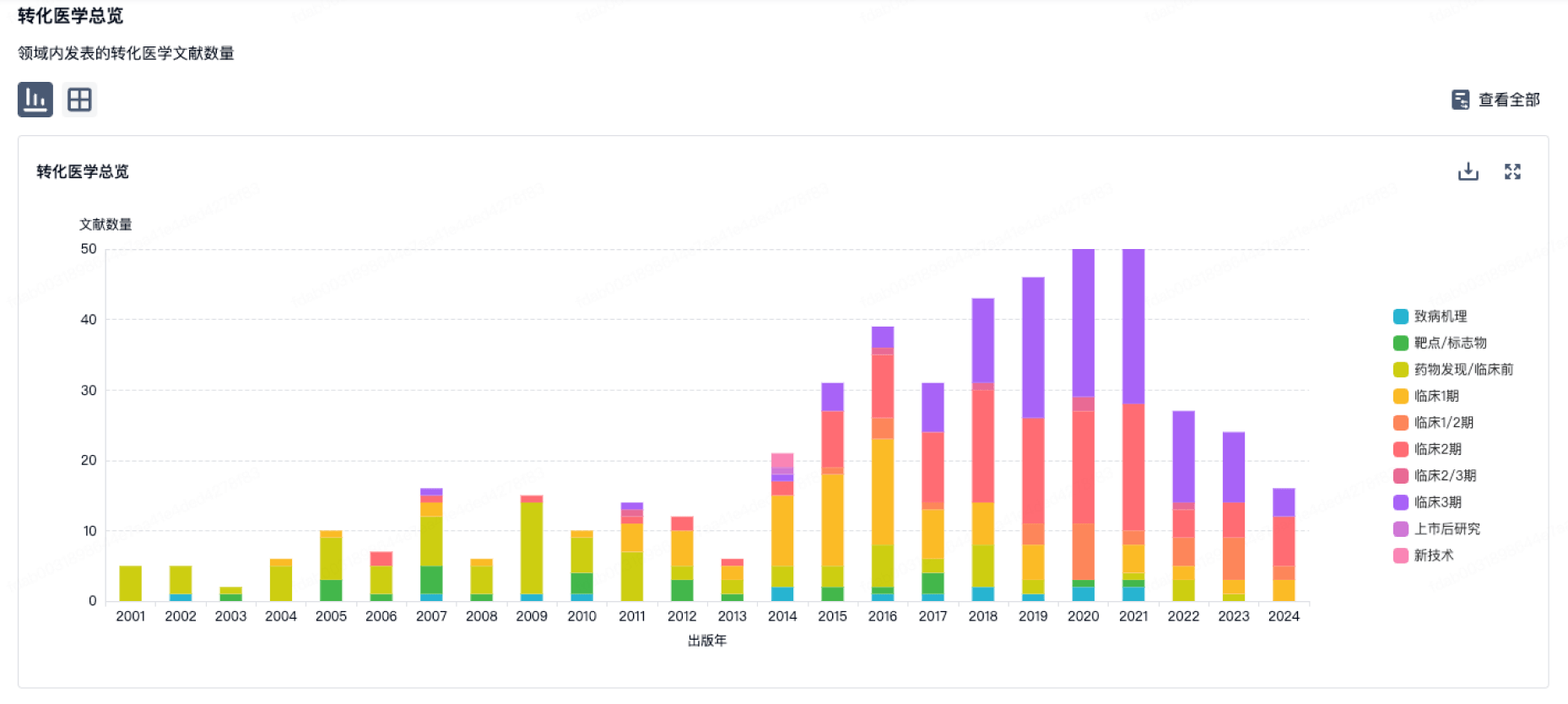

100 项与 Syngenta AG 相关的转化医学

登录后查看更多信息

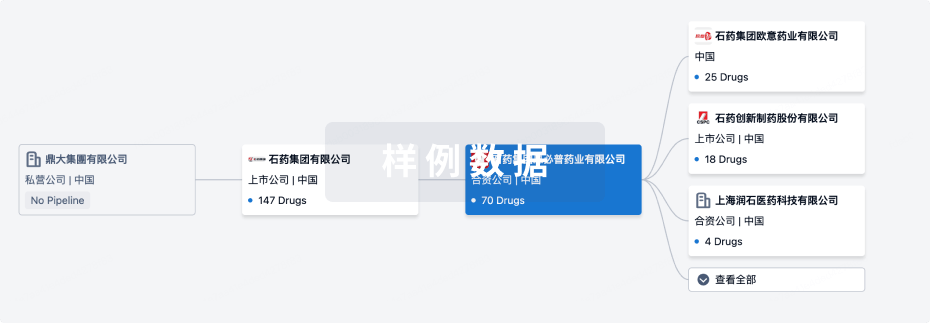

组织架构

使用我们的机构树数据加速您的研究。

登录

或

管线布局

2025年09月28日管线快照

管线布局中药物为当前组织机构及其子机构作为药物机构进行统计,早期临床1期并入临床1期,临床1/2期并入临床2期,临床2/3期并入临床3期

临床前

2

3

其他

登录后查看更多信息

当前项目

| 药物(靶点) | 适应症 | 全球最高研发状态 |

|---|---|---|

CHNQD-01255 ( ARFGEF ) | 肝细胞癌 更多 | 临床前 |

PRS-010(Pieris Pharmaceuticals, Inc.) ( CTLA4 ) | 肿瘤 更多 | 临床前 |

BBI-2000 | 变应性接触性皮炎 更多 | 终止 |

Elarekibep ( IL-4Rα ) | 哮喘 更多 | 终止 |

ROCK inhibitor(Devgen) ( ROCK1 ) | 青光眼 更多 | 终止 |

登录后查看更多信息

药物交易

使用我们的药物交易数据加速您的研究。

登录

或

转化医学

使用我们的转化医学数据加速您的研究。

登录

或

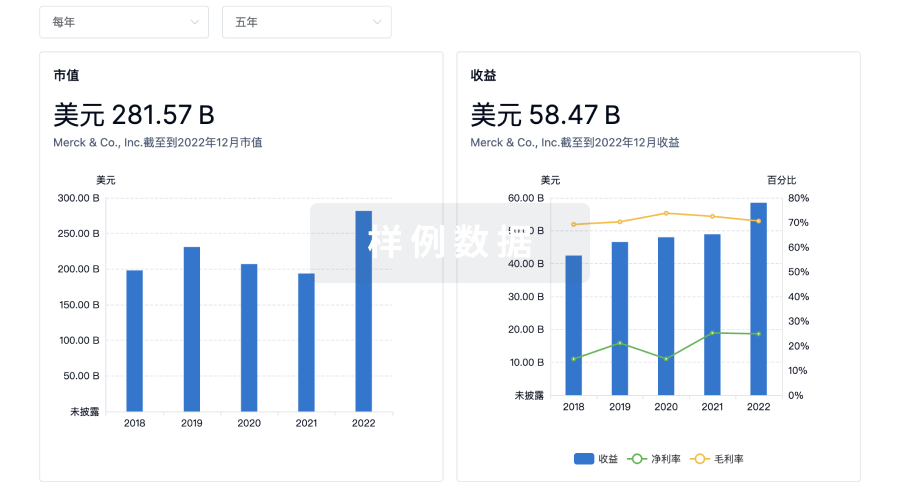

营收

使用 Synapse 探索超过 36 万个组织的财务状况。

登录

或

科研基金(NIH)

访问超过 200 万项资助和基金信息,以提升您的研究之旅。

登录

或

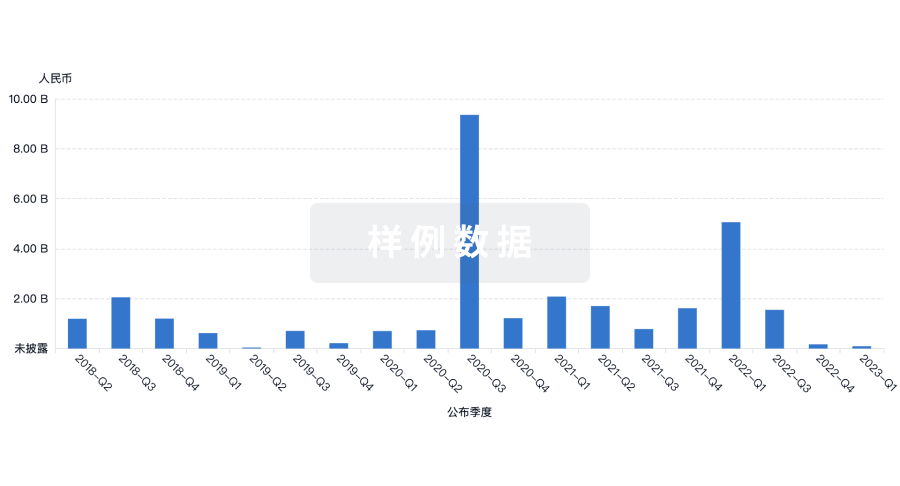

投资

深入了解从初创企业到成熟企业的最新公司投资动态。

登录

或

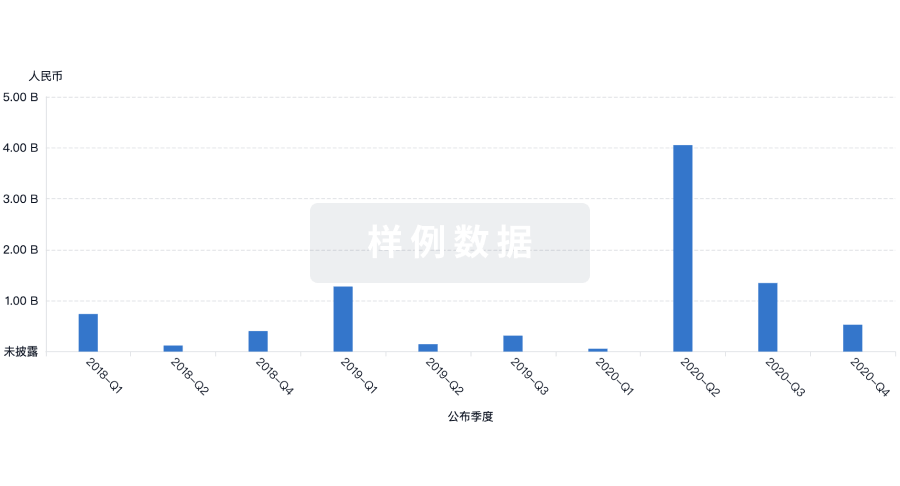

融资

发掘融资趋势以验证和推进您的投资机会。

登录

或

Eureka LS:

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用