预约演示

更新于:2025-05-07

Karadeniz Technical University

更新于:2025-05-07

概览

关联

142

项与 Karadeniz Technical University 相关的临床试验NCT06955962

Examining the Effect of Mandala Art Therapy on Symptoms and Quality of Life in Multiple Sclerosis Patients

This study is a randomized controlled, pre-test-post-test experimental research aimed at examining the effects of mandala art therapy on symptom severity and quality of life in individuals diagnosed with Multiple Sclerosis (MS). The main goal is to determine whether mandala art therapy helps reduce the frequency and severity of MS symptoms while improving participants' overall quality of life.

It is expected that mandala art therapy will support MS patients in managing current and potential symptoms, coping more effectively with the disease, and improving adherence to treatment. As a result, a reduction in healthcare utilization, related costs, MS-related complications, and mortality is anticipated.

The study will be conducted between May and August 2025 at the Neurology Outpatient Clinic of Karadeniz Technical University Practice and Research Center, involving 70 patients-35 in the intervention group receiving mandala art therapy and 35 in the control group receiving standard care.

It is expected that mandala art therapy will support MS patients in managing current and potential symptoms, coping more effectively with the disease, and improving adherence to treatment. As a result, a reduction in healthcare utilization, related costs, MS-related complications, and mortality is anticipated.

The study will be conducted between May and August 2025 at the Neurology Outpatient Clinic of Karadeniz Technical University Practice and Research Center, involving 70 patients-35 in the intervention group receiving mandala art therapy and 35 in the control group receiving standard care.

开始日期2025-05-10 |

NCT06946251

Evaluation of Patients With Reduced and Preserved Ejection Fraction After Transcutaneous Diaphragm Stimulation

The aim of this study is to compare the effects of transcutaneous electrical diaphragm stimulation (TEDS) on diaphragm thickness, duration of mechanical ventilation, length of ICU stay, and right/left heart functions between patient groups with low and high ejection fraction (EF) levels.

This randomized controlled trial will record demographic data, diaphragm ultrasound measurements, and echocardiographic findings of patients who receive or do not receive TEDS for five consecutive days.

Participants will be divided into four groups according to their EF levels and whether or not they receive diaphragm stimulation:

Group 1: Patients with low EF who receive TEDS Group 2: Patients with high EF who receive TEDS Group 3: Patients with low EF who do not receive TEDS Group 4: Patients with high EF who do not receive TEDS

Diaphragm thickness and echocardiographic assessments will be performed at baseline and at the end of the five-day TEDS intervention.

TEDS Application Protocol

In the ICU, TEDS is administered by a physiotherapist once daily for 20 minutes, five days a week, as part of the routine treatment protocol. The stimulation is delivered using the LGT-231 model device from the LONGEST brand.

A transcutaneous current with a frequency of 30-50 Hz and a pulse width of 300-400 microseconds is applied to the diaphragm. The stimulation intensity is increased until visible muscle contraction is achieved.

Electrode placement involves:

The first pair of electrodes placed bilaterally between the 8th and 10th anterior intercostal spaces, lateral to the xiphoid process.

The second pair placed along the mid-axillary line of the thorax, also between the 8th and 10th intercostal spaces.

This randomized controlled trial will record demographic data, diaphragm ultrasound measurements, and echocardiographic findings of patients who receive or do not receive TEDS for five consecutive days.

Participants will be divided into four groups according to their EF levels and whether or not they receive diaphragm stimulation:

Group 1: Patients with low EF who receive TEDS Group 2: Patients with high EF who receive TEDS Group 3: Patients with low EF who do not receive TEDS Group 4: Patients with high EF who do not receive TEDS

Diaphragm thickness and echocardiographic assessments will be performed at baseline and at the end of the five-day TEDS intervention.

TEDS Application Protocol

In the ICU, TEDS is administered by a physiotherapist once daily for 20 minutes, five days a week, as part of the routine treatment protocol. The stimulation is delivered using the LGT-231 model device from the LONGEST brand.

A transcutaneous current with a frequency of 30-50 Hz and a pulse width of 300-400 microseconds is applied to the diaphragm. The stimulation intensity is increased until visible muscle contraction is achieved.

Electrode placement involves:

The first pair of electrodes placed bilaterally between the 8th and 10th anterior intercostal spaces, lateral to the xiphoid process.

The second pair placed along the mid-axillary line of the thorax, also between the 8th and 10th intercostal spaces.

开始日期2025-05-01 |

NCT06950580

Investigation of the Effect of Abdominal Massage on Patients in Intensive Care Unit: Randomised Controlled Trial

Bowel motility can be affected by many factors such as immobilization, lack of fiber in the nutritional formula, inadequate fluid intake, and lack of privacy for toileting in patients hospitalized in the intensive care unit. Abdominal massage, which is one of the effective methods to increase intestinal motility, is accepted as an intervention that can be safely applied in patients.

The aim of our study was to investigate the effect of abdominal massage applied to patients hospitalized in the intensive care unit on sepsis, survival, discharge time from intensive care unit, amount and duration of antibiotic use.

Hypotheses H0: Abdominal massage application has no effect on sepsis, survival, discharge time from intensive care unit, amount and duration of antibiotic use in patients hospitalized in intensive care unit.

H1: Abdominal massage application has an effect on sepsis, survival, discharge time from intensive care unit, amount and duration of antibiotic use in patients hospitalized in intensive care unit.

In this randomized controlled study, the data of patients who received abdominal massage for 5 days during routine physiotherapy applications and those who did not will be recorded. Randomization will be performed in a 1:1 ratio using a computer generated randomization schedule. The effect of abdominal massage on sepsis, survival, discharge time from intensive care, amount and duration of antibiotic use will be examined by using the data of 2 groups with and without abdominal massage in a randomized controlled trial.

The aim of our study was to investigate the effect of abdominal massage applied to patients hospitalized in the intensive care unit on sepsis, survival, discharge time from intensive care unit, amount and duration of antibiotic use.

Hypotheses H0: Abdominal massage application has no effect on sepsis, survival, discharge time from intensive care unit, amount and duration of antibiotic use in patients hospitalized in intensive care unit.

H1: Abdominal massage application has an effect on sepsis, survival, discharge time from intensive care unit, amount and duration of antibiotic use in patients hospitalized in intensive care unit.

In this randomized controlled study, the data of patients who received abdominal massage for 5 days during routine physiotherapy applications and those who did not will be recorded. Randomization will be performed in a 1:1 ratio using a computer generated randomization schedule. The effect of abdominal massage on sepsis, survival, discharge time from intensive care, amount and duration of antibiotic use will be examined by using the data of 2 groups with and without abdominal massage in a randomized controlled trial.

开始日期2025-05-01 |

100 项与 Karadeniz Technical University 相关的临床结果

登录后查看更多信息

0 项与 Karadeniz Technical University 相关的专利(医药)

登录后查看更多信息

13,996

项与 Karadeniz Technical University 相关的文献(医药)2025-12-01·Molecular Genetics and Genomics

Analysis of TSC1 and TSC2 genes and evaluation of phenotypic correlations with tuberous sclerosis

Article

作者: Eser, Metin ; Hekimoglu, Gulam ; Turkyilmaz, Ayberk ; Kutlubay, Busra ; Sager, Safiye Gunes

2025-12-01·Journal of Clinical Immunology

Diverse Clinical and Immunological Profiles in Patients with IPEX Syndrome: a Multicenter Analysis from Turkey

Article

作者: Ozturk, Necmiye ; Cavkaytar, Ozlem ; Bilgic Eltan, Sevgi ; Ozen, Ahmet ; Yakici, Nalan ; Yalcin Gungoren, Ezgi ; Amirov, Razin ; Can, Salim ; Gemici Karaarslan, Betul ; Orhan, Fazil ; Kiykim, Ayca ; Bekis Bozkurt, Hayrunnisa ; Catak, Mehmet Cihangir ; Kasap, Nurhan ; Bayram Catak, Feyza ; Baris, Safa ; Yorgun Altunbas, Melek ; Karakoc-Aydiner, Elif ; Bozkurt, Selcen ; Bal Cetinkaya, Fatma ; Sahin, Ali ; Arga, Mustafa

2025-08-01·The American Journal of Emergency Medicine

ChatGPT-supported patient triage with voice commands in the emergency department: A prospective multicenter study

Article

作者: Imamoğlu, Melih ; Beşer, Muhammet Fatih ; Pasli, Sinan ; Hiçyilmaz, Halil İbrahim ; Karakurt, Büşra ; Kirimli, Esma Nilay ; Şahin, Abdul Samet ; Unutmaz, İhsan ; Yadigaroğlu, Metin ; Ayhan, Asu Özden

100 项与 Karadeniz Technical University 相关的药物交易

登录后查看更多信息

100 项与 Karadeniz Technical University 相关的转化医学

登录后查看更多信息

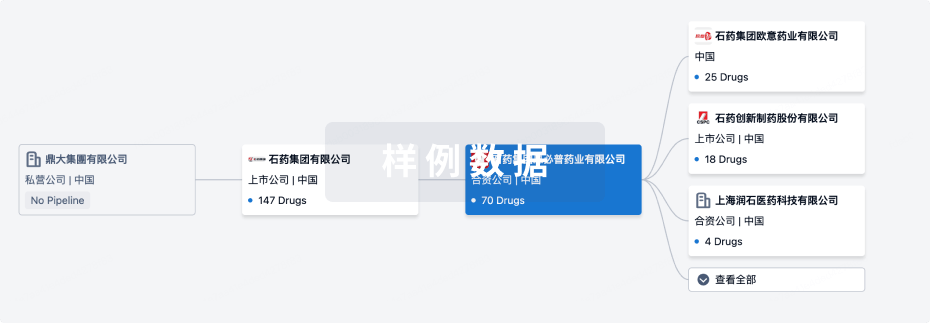

组织架构

使用我们的机构树数据加速您的研究。

登录

或

管线布局

2025年09月20日管线快照

无数据报导

登录后保持更新

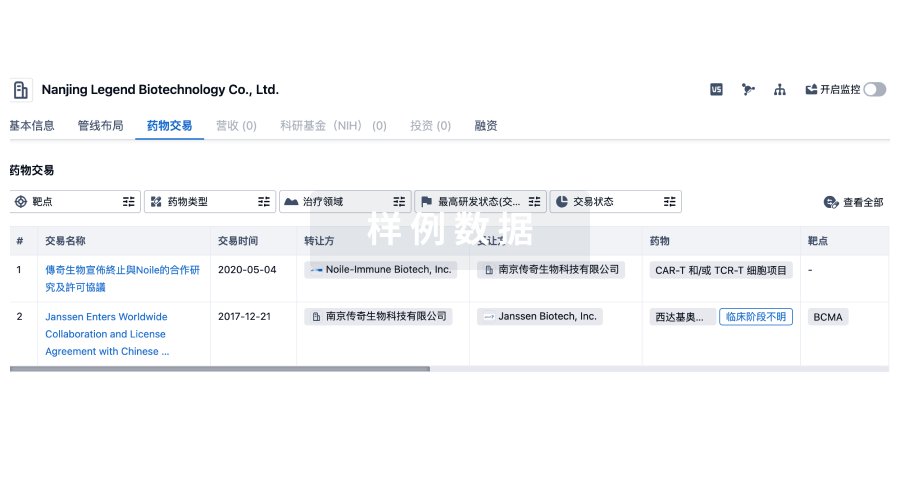

药物交易

使用我们的药物交易数据加速您的研究。

登录

或

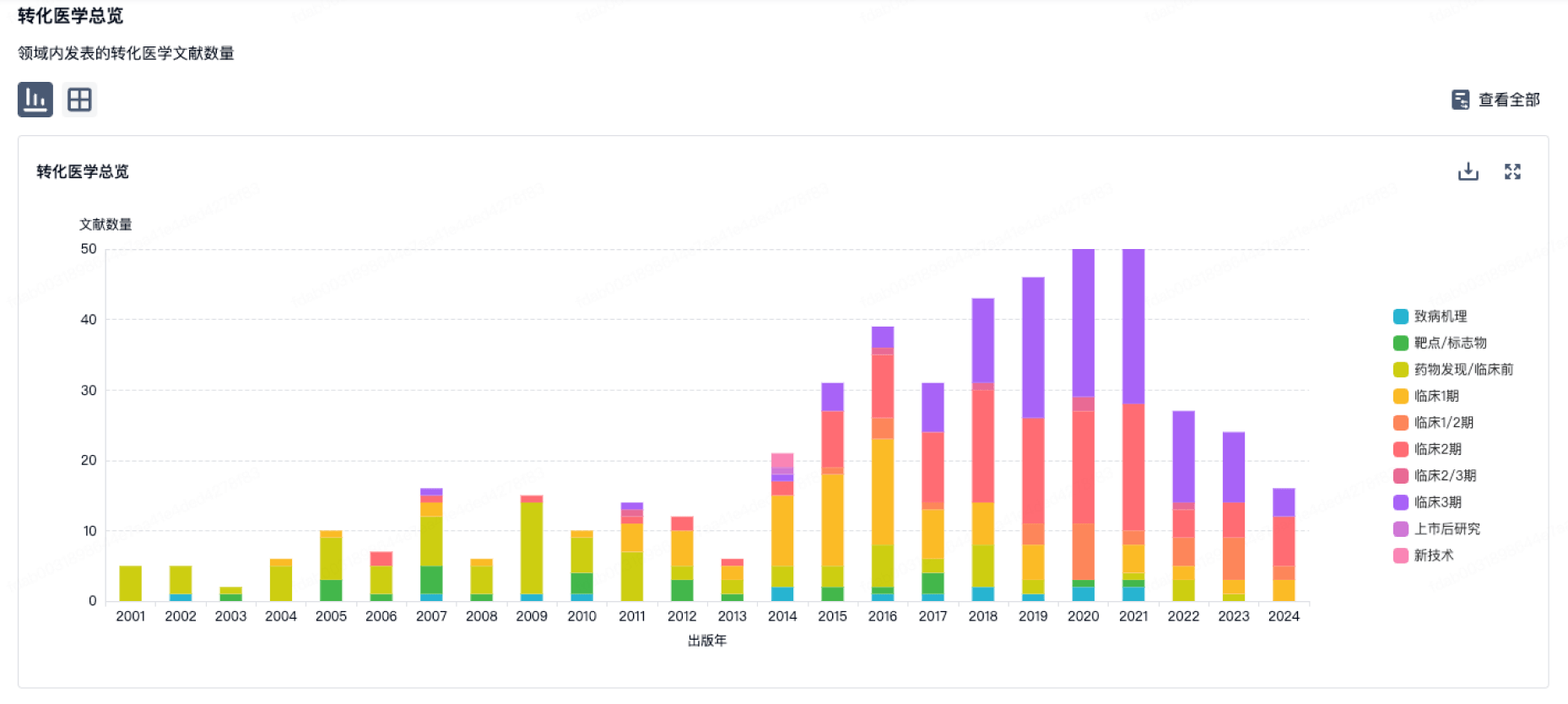

转化医学

使用我们的转化医学数据加速您的研究。

登录

或

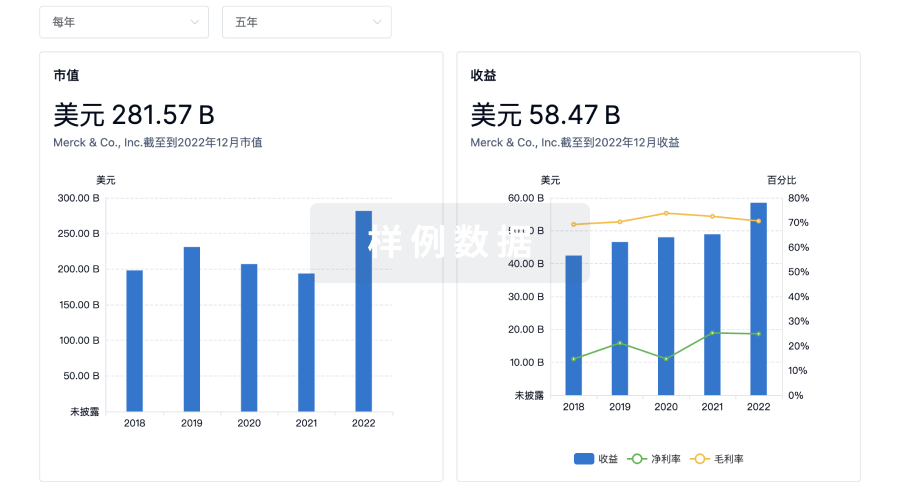

营收

使用 Synapse 探索超过 36 万个组织的财务状况。

登录

或

科研基金(NIH)

访问超过 200 万项资助和基金信息,以提升您的研究之旅。

登录

或

投资

深入了解从初创企业到成熟企业的最新公司投资动态。

登录

或

融资

发掘融资趋势以验证和推进您的投资机会。

登录

或

Eureka LS:

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用