预约演示

更新于:2025-05-07

Dystonia 18

18型肌张力障碍

更新于:2025-05-07

基本信息

别名 DYSTONIA 18、DYSTONIA 18 (disorder)、DYT18 + [13] |

简介 A form of paroxysmal dyskinesia with characteristics of painless attacks of dystonia of the extremities triggered by prolonged physical activities. The prevalence is unknown but 20 sporadic cases and 9 families have been described to date. The attacks last between 5 minutes and 2 hours and are typically restricted to the exercised limbs. The dystonic movements are usually bilateral and are aggravated by cold, psychological stress, fatigue and lack of sleep. The pathophysiology is still unknown but some familial cases were found to be associated with mutations in the SLC2A1 gene (1p34.2). Sporadic and familial cases with autosomal dominant mode of inheritance have been reported. |

关联

2

项与 18型肌张力障碍 相关的药物靶点- |

作用机制 免疫刺激剂 |

最高研发阶段批准上市 |

首次获批国家/地区 美国 |

首次获批日期2021-07-16 |

作用机制 鞘氨醇1磷酸抑制剂 |

在研机构 |

原研机构 |

最高研发阶段临床2期 |

首次获批国家/地区- |

首次获批日期1800-01-20 |

15

项与 18型肌张力障碍 相关的临床试验NCT06386159

Clinical Application of Comprehensive Intervention Scheme for Post-extubation Dysphagia Based on Neuroregulatory Mechanism

This study aims to establish a practical comprehensive intervention program for dysphagia after extubation in adult ICU patients based on the best evidence of its assessment and intervention, through expert panel discussion and Delphi method. In addition, combining the preliminary experimental results of vagus nerve stimulation applied to PED patients, we further develop a comprehensive intervention program for dysphagia after extubation based on neural regulation mechanism. Finally, the implementation effect of this PED comprehensive intervention program based on neural regulation mechanism will be verified through clinical application.

开始日期2024-03-15 |

申办/合作机构 |

JPRN-UMIN000046443

Evaluation of the usefulness of a swallowing electrical stimulator in maintaining swallowing function in patients after extubation: a randomized controlled trial - Evaluation of the usefulness of a swallowing electrical stimulator in maintaining swallowing function in patients after extubation: a randomized controlled trial

开始日期2022-07-01 |

申办/合作机构 |

NCT04112862

Effects of Sodium Lactate Infusion in Patients With Glucose Transporter 1 Deficiency Syndrome (GLUT1DS)

This study investigates the effect of lactate infusion on epileptic discharges on EEG and seizure frequency in glucose transporter 1 deficiency syndrome (GLUT1DS) patients.

开始日期2022-05-20 |

100 项与 18型肌张力障碍 相关的临床结果

登录后查看更多信息

100 项与 18型肌张力障碍 相关的转化医学

登录后查看更多信息

0 项与 18型肌张力障碍 相关的专利(医药)

登录后查看更多信息

1,848

项与 18型肌张力障碍 相关的文献(医药)2025-06-01·Microbial Pathogenesis

Molecular characterization of porcine epidemic diarrhea virus in Sichuan from 2023 to 2024

Article

作者: Yan, Wenjun ; Yang, Xin ; Fan, Hua ; Xie, Bo

2025-06-01·Computer Methods and Programs in Biomedicine

Global-Local Transformer Network for Automatic Retinal Pathological Fluid Segmentation in Optical Coherence Tomography Images

Article

作者: Chen, Yuyang ; Sheng, Xinyu ; Wei, Hao ; Li, Feng ; Zou, Haidong ; Huang, Song

2025-05-01·Acta Ophthalmologica

Correlation of retinal fluid and photoreceptor and RPE loss in neovascular AMD by automated quantification, a real‐world FRB! analysis

Article

作者: Schmidt‐Erfurth, Ursula ; Reiter, Gregor S. ; Bogunovic, Hrvoje ; Leigang, Oliver ; Mares, Virginia ; Gumpinger, Markus ; Barthelmes, Daniel ; Nehemy, Marcio B.

5

项与 18型肌张力障碍 相关的新闻(医药)2024-04-06

▲ 详细日程见文末 ▲摘要:猪流行性腹泻(PED)是由流行性腹泻病毒(PEDV)引起的一种高度传染性肠道疾病,新生仔猪死亡率高达100%。2010年出现了高致病性基因群2 (G2) PEDV,其给全球猪肉产业造成了巨大的经济损失。2013年首次在美国报道并在全国暴发疫情,2013 - 2014年PED给许多猪肉生产商带来了巨大的灾难。怀孕母猪/后备母猪接种减毒活疫苗(LAV)是诱导乳原免疫的最有效策略,并通过初乳和乳汁为哺乳仔猪提供抗PED的被动保护。然而,经过大约十年的努力,仍然没有安全有效的疫苗。最大的困扰之一是LAV在野外的潜在毒力恢复。本文依据PEDV - LAV的发展现状及主要困难进行综述。我们还讨论了转录调控序列在PEDV转录中的功能,有助于重组以及防止LAV逆转的可能策略。关键词:猪流行性腹泻病毒;减毒活疫苗;重组;安全问题1概述猪流行性腹泻病毒(PEDV)是一种主要的猪肠道病原体,可引起新生仔猪严重的肠道感染,导致急性腹泻、呕吐、脱水和死亡。1971年在英国首次发现猪流行性腹泻(PED)的疾病,然后传播到欧洲多个猪肉生产国,当时其背后的病原体仍未被发现。1978年,比利时的研究人员首次确定PEDV为该病的病原体。它在欧洲农场引起了广泛的感染,导致20世纪70年代和80年代乳猪的损失严重。然后,它在20世纪90年代在欧洲变得罕见,在成年猪中零星爆发或在乳猪中出现轻微症状。1982年在亚洲报告了首例PED病例,疫情一直持续到20世纪90年代。然后,它成为地方性流行病,直到2010年,高毒性PEDV毒株在中国出现。2013年PEDV传入美国,并迅速在全国蔓延。在2013-2014年疫情期间,在养猪健康监测项目跟踪的猪场中,超过50%的猪场发生了新的疫情,估计造成9亿-18亿美元的经济损失。PEDV是尼多病毒目冠状病毒科甲型冠状病毒属的一员。它是最大的RNA病毒之一,核苷酸长大约28000个,正义单链RNA基因组,5'帽和3'聚腺苷化尾部。因为RNA基因组很大,包括PEDV在内的冠状病毒已适应了具有校对酶,可以平衡复制保真度和遗传多样性之间的冲突。PEDV通过积累突变和重组事件经历了一条进化路径,从而提高了病毒的适应性。两个大的ORF, ORF1a和ORF1b,编码在PEDV基因组的5'2 / 3处,其次是四个结构蛋白和一个辅助蛋白的ORF:刺突(S),ORF3,包膜(E),膜(M)和核衣壳(N)蛋白。在病毒复制过程中,ORF1a和ORF1b被翻译成两种多蛋白前体,这些前体在翻译后被病毒蛋白酶进一步加工成16种非结构蛋白(nsp1-nsp16)。在PEDV编码的所有蛋白中,S糖蛋白在病毒粒子表面形成三聚体突起,负责附着于宿主受体,并通过膜融合介导病毒进入细胞,启动感染过程。此外,S基因在PEDV株中是一个高变区域。因此,它可以作为确定PEDV遗传多样性的系统发育标记。根据S蛋白的遗传多样性,PEDV可分为两个基因组:基因组1 (G1)和基因组2 (G2),基因组2又可分为G1a、G1b和G2a、G2b、G2c亚群。PEDV G1a包括在比利时发现的原型毒株CV777,所有毒株都与CV777具有较高的遗传同源性。新出现的高毒力毒株被归类为G2。由于冠状病毒在复制过程中会合成一组共用相同5'端的亚基因组RNA (sgRNAs),因此在包括PEDV在内的冠状病毒中,不同毒株之间的同源重组率相对较高。因此,G1a和G2毒株之间潜在的重组事件导致了S-INDEL毒株、G1b和新近定义的G2c毒株的出现,这表明PEDV的进化复杂而快速。尽管群体免疫和生物安全仍然是预防PEDV最有效的方法,但新变种的不断出现,包括来自重组事件的毒株,其已经导致疫苗失败,并阻碍了PEDV的预防和控制。本文综述了PEDV病毒转录的分子基础,以及安全有效的PEDV减毒活疫苗的合理设计。2PEDV复制和转录中的功能元件PEDV感染的第一步是通过S蛋白识别并结合宿主受体。研究人员已经确定了几种冠状病毒的宿主受体用法。中东呼吸综合征冠状病毒(MERS-CoV)识别二肽基肽酶4 (DPP4)受体结合。严重急性呼吸综合征冠状病毒(SARS-CoV)和SARS-CoV-2结合血管紧张素转换酶2 (ACE2)引发感染。而一些冠状病毒,包括传染性胃肠炎病毒(TGEV)及其变体、猪呼吸道冠状病毒(PRCV)、猪德尔塔冠状病毒(PDCoV)、人冠状病毒(HCoV)-229E和猫冠状病毒II型,利用氨基肽酶N (APN)作为受体。APN是一种150kDa的跨膜蛋白水解酶,可从肽中切割中性或碱性NH2末端残基。它是一种在上皮细胞、巨噬细胞和粒细胞中普遍表达的蛋白。APN参与了广泛的生物过程,包括细胞增殖、运动、粘附和内吞作用。以往的研究表明APN是PEDV感染的共受体。PEDV的S蛋白能有效结合人和猪的APN。然后,一种外源表达人或猪APN (pAPN)的非受纳犬肾细胞系MDCK可以支持PEDV感染和连续病毒传代。相反,用抗pAPN抗体预处理易感细胞和允许细胞可阻断PEDV的生产性感染。与此同时,PEDV在表达pAPN的转基因小鼠模型中复制。然而,由于APN缺陷细胞系Vero和pAPN敲除猪支持PEDV复制,因此PEDV一定存在未知受体。在进入宿主细胞后,PEDV基因组被释放到细胞质中,并进入细胞器进行病毒复制。PEDV有一个正链RNA基因组,作为初始病毒蛋白翻译的mRNA。ORF1a和ORF1b编码pp1a和pp1ab两种多蛋白,它们是多蛋白前体,并分别被在nsp3和nsp5中存在的木瓜蛋白酶(PLpro)和3c样蛋白酶(3CLpro)两种病毒蛋白酶自加工成16种非结构蛋白(nsps)(图1)。图1.PEDV基因组结构和它的结构蛋白(S,E,M,和N),非结构(nsp1-16)和辅助(ORF3)蛋白。位于5'UTR或每个ORF上游的绿色和红色条代表领导TRS和TRS主体区域。缩略词:pp1a和pp1ab的数字表示非结构蛋白1-16。PLpro:木瓜蛋白酶样的蛋白酶;3CLpro:糜蛋白酶样蛋白酶;RdRp:RNA依赖的RNA聚合酶;ExoN:核糖核酸外切酶;N7-MTase:N7-甲基转移酶;EndoU:内切核糖核酸酶;2'-O-MTase:2'-O甲基转移酶;S:刺突蛋白;E:包膜蛋白;M:膜蛋白;N:核蛋白。在病毒复制过程中,nsp1在翻译后迅速从多蛋白中产生和释放。它以宿主翻译机制和干扰素(IFN)应答系统为靶点,诱导宿主mRNA降解并拮抗IFN应答。复制-转录复合体(RTC)负责病毒RNA合成,由多种病毒非结构蛋白组成。在参与RTC的蛋白质中,nsp3-nsp6负责调节胞内膜和组装双膜囊泡(DMV),而DMV是病毒RNA合成的地方。此外,剩余的nsps含有RNA合成中涉及的核心酶功能。例如,nsp7和nsp8的异源二聚体启动新生RNA合成并产生用于复制的短RNA引物,与nsp12形成冠状病毒复制复合体的最小核心。Nsp12是一种RNA依赖的RNA聚合酶(RdRp),用于复合体内的RNA合成。在新生RNA的延长过程中,RNA结合蛋白nsp9和解旋酶nsp13也参与其中。此外,双功能蛋白nsp14具有3'-5'外切酶(ExoN)和N7 -甲基转移酶(MTase)活性。外切酶结构域通过在RNA延伸期间去除错误结合的核苷酸来负责校对活动,以保持高复制保真度。而且,N7-MTase结构域与2'-O-MTase nsp16一起介导病毒RNA的加帽过程,nsp10作为辅助因子参与其中。此外,nsp15是一种在冠状病毒中保守的尿嘧啶特异性核糖核酸内切酶(EndoU),它处理病毒的dsRNA以逃避宿主防御系统的检测,从而导致免疫逃避。RTC初始翻译和组装后的病毒RNA合成包括病毒基因组复制和转录。在病毒基因组复制中,RTC可以识别PEDV正链RNA基因组并持续复制,产生互补的负链基因组。然后,负链基因组拷贝作为模板,更多新生的正链基因组RNA被合成并最终整合到子代病毒粒子中。与连续的基因组复制不同,PEDV利用非连续的转录策略。在负链RNA合成过程中,有一组5'和3'共末端亚基因组RNA (sgRNAs)通过中断机制产生,负链sgRNAs随后作为模板产生正链亚基因组信使RNA (sgmRNA)。所有sgmRNAs共享相同的5'区,称为先导序列,位于冠状病毒基因组的开头,而在sgmRNAs的合成过程中,需要一组顺式作用元件,称为转录调控序列(TRS)。TRS是位于每个体ORF上游的同源性较高的短序列(称为“主体TRS”)和5'前导序列下游(称为“前导TRS”)(图1)。在负链RNA合成过程中,当RTC在3'1/3的病毒基因组中遇到N、M、E、ORF3和S基因的主体TRSs时,新生链的延伸被中断。在这种情况下,主体TRS作为RTC的“减速”或“停止”信号,RTC要么通读转录下一个ORF,要么将模板切换到先导TRS,产生sgRNA,携带5'先导序列的反向互补序列(图2)。图2.PEDV的中断复制模型。当RTC遇到TRS区域时,要么“通读”,要么“模板切换”,生成中断的sgRNA。修改自Baker, s.c.,2008。主体TRS的模板切换事件涉及新生负链的RNA反向互补TRS(负义体TRS或新生抗体TRS)与基因组RNA的5 ' UTR(正链先导TRS)之间的相互作用。在TRS区域内,存在一个由6 ~ 8个核苷酸组成的保守核心序列(CS)并在其第5'和3'端两侧有可变序列。基于系统分析,Yang等发现前导TRS-CS在一个属内保守,但在属间不同,但β冠状病毒属的厄贝孔冠状病毒的TRS-L CS与α冠状病毒相似,而与β冠状病毒属不同。例如,α冠状病毒包括TGEV, SADS-CoV和PEDV共享TRS-CS(5'-CUAAAC-3')。对于TRS-CS体,即使是同一属的病毒也表现出多样性。TGEV具有9个高度保守的TRS-CSs体,序列为5'-CUAAAC-3',包括每个ORF的5'端各1个 (1a, S, 3a, 3b, E, M, N和7),以及S基因的内部CS。对于PEDV,CV777株的TRS-CS体的E,M和N基因经实验分别确定为5'-CUAGAC-3',5'-AUAAAC-3'和5'-CUAAAC-3'。推断S和ORF3的TRS-CSs分别是5'-GUAAAC-3'和5'-CCUUAC-3'。PEDV的主体TRS-CS与先导TRS-CS最多可相差3个核苷酸。先前的研究报道了CS在指导碱基配对和新生负链与基因组5'末端的先导TRS位点之间的二链体形成方面的关键作用。控制不同ORF表达的TRS-CSs体与PEDV中的先导TRS-CS表现出多种相似性,这可能是控制不同sgmRNAs丰度以及病毒蛋白的另一种策略。除了序列相似性,RNA二级结构也被认为是RNA合成所需的顺式作用元件。5'UTR在病毒基因组复制中的结构功能首次在基于缺陷干扰RNA (DI RNA)的BCoV系统中得到验证。在BCoV 5'UTR中预测了四个茎环(SLs), SL I, II, III和IV,突变分析表明这些结构对病毒复制至关重要。随后,从甲型冠状病毒(HCoV-NL63、HCoV-229E和TGEV)、乙型冠状病毒(HCoV-OC43、HCoV-HKU1、SARS-CoV和MHV)和γ冠状病毒IBV中鉴定出3个保守的SLs (SL1、SL2和SL4)。MHV作为一个模型,证明了这些保守结构对病毒复制至关重要。SL1与部分展开的构象处于平衡状态。SL1因碱基配对减少而导致的结构不稳定被证明是致命的或导致病毒复制减少,而重新建立SL1碱基配对的补偿性突变使病毒复制恢复到与野生型病毒相似的水平。在保守的SLs中,SL2在所有属间的一致性最高。它有一个U-turn基序,这可能是RNA-RNA相互作用的原因。突变分析报告,突变破坏SL2茎的稳定性,显著削弱MHV的复制,导致感染滴度峰值降低,与野生型MHV相比,斑块更小。此外,具有不稳定SL2的突变体合成的RNA量显著低于野生型病毒。相反,恢复碱基配对的补偿性突变可以将这些不稳定突变体的病毒复制恢复到与野生型病毒相当的水平。另外,携带颠覆性突变的突变体破坏了茎,无法存活。这表明SL2通过调节RNA合成对病毒复制至关重要。SL4是一个位于先导TRS下游的长发夹结构。有人提出,SL4的基底部分处于一种柔性状态,这可能是导致sgRNA合成过程中模板切换的瞬时远程RNA-RNA相互作用的原因。根据Mfold [http://www.unafold.org/mfold/applications/ rna-folding-form.php(于2022年5月26日访问)]的分析,在PEDV CV777毒株的5'UTR范围内预测了SL1、SL2、SL4和SL5四个二级结构(图3)。除了保守的SLs外,在核苷酸(nt)残基123和305之间还发现了一个SL5。它是一个包含三个发夹环的大型结构,延伸到ORF1a。迄今为止,在5'UTR中,这些与先导TRS相邻的二级结构的功能仍然不清楚,需要进一步的实验来证实它们在PEDV RNA复制中的作用。图3.PEDV CV777典型毒株5 ' UTR的二级结构。4个保守茎环(SLs)用Mfold http://www.unafold.org/ mfold/ applications/rnafolding-form.php(2022.05.26),计算了每个SL的吉布斯自由能,并以kcal/mol表示。3PEDV疫苗研制现状产生的感染将基于基因组内各种病毒蛋白和功能元件的协同作用而启动,感染触发宿主对病毒感染的全身和局部粘膜免疫反应。早期的一项研究表明,先前接触引起的免疫反应可以防止断奶仔猪再次感染。此外,PED暴露的后备母猪可以通过肠道-乳腺-分泌IgA轴被动地转移母体免疫,并在攻击后为仔猪提供高达100%的PED保护。这提示病毒复制诱导的宿主免疫,特别是乳原免疫是预防PED的有效途径。Won等和Lv等评论了PEDV疫苗,包括LAV疫苗、灭活疫苗、病媒疫苗和亚单位PEDV疫苗。2010年之前,G1a型PEDV疫苗包括灭活疫苗和减毒活疫苗,其有效地控制了PEDV在亚洲国家的爆发。Ma等人于1994年制备了一种基于细胞适应CV777毒株的灭活疫苗,母猪接种后,3日龄猪的保护率为85.19%,仔猪的被动免疫保护率为85.0%。随后,该团队又于1995年成功研制出TGEV和PEDV二价灭活疫苗,并在中国实现商业化。除了灭活疫苗,Tong等人报道了一种体外连续传代CV777生产的LAV。对3 - 6日龄仔猪的保护率为95.52%,被动免疫保护率为96.2%。1999年成功开发了PEDV和TGEV的二价LAV,对PEDV的主动和被动保护率分别高达97.7和98%。这两种二价疫苗在2010年PEDV高毒变株突发事件之前,在中国广泛使用,有效控制了PEDV和TGEV的传播。日本和韩国也开发了疫苗。一种日本毒株PEDV 83P-5在Vero细胞中连续传代后被减毒,并作为LAV在市售。重要的是,母猪接种83P-5被动保护了80%的仔猪免受G2 PEDV攻击的死亡。两株韩国毒力毒株SM98-1和DR-13也进行了体外传代和减毒。SM98-1毒株已被用作肌肉注射LAV或灭活疫苗,DR-13毒株可作为口服LAV。Song等人的研究表明,晚期妊娠母猪口服DR-13后,87%的哺乳仔猪能抵抗同源病毒的攻击。从2010年底开始,由于出现了G2分支的高毒力PEDV变体,中国经历了严重的PED疫情,对养猪业造成了毁灭性的破坏,并传播到其他亚洲和北美国家以及欧洲(乌克兰)。2015年,两种多价疫苗在中国正式获批上市。一种是由TEGV、PEDV (CV777株)和猪轮状病毒减毒研制的三价疫苗,另一种是含有TGEV和PEDV的二价减毒疫苗(ZJ08株,G1b),但由于G1和G2株之间的交叉保护较差,其有效性值得怀疑。一项比较研究评估了G1b和基于G2b的疫苗对2周龄断奶仔猪G2b毒株攻击的效果。这表明,基于G1b毒株的灭活疫苗对G2b的攻击提供了足够的保护,证明了粪便中PEDV RNA在高峰脱落期间减少了3-4个logs,病毒脱落持续时间更短,但G1b毒株衍生的疫苗失败了。在韩国和泰国也观察到类似的现象,在这两个国家,市售的G1毒株衍生的疫苗未能对目前流行的G2毒株提供完全的保护。迄今为止,高毒力PEDV是中国主要的猪病毒病原体之一(占世界生猪存栏量> 50%)。因此,迫切需要针对G2株的有效疫苗来预防和控制仔猪的这种致命病毒感染。大多数许可的PEDV疫苗是灭活疫苗或由已被杀死或完全减毒的整个病原体组成的LAV。这种全病原体疫苗可以引起强烈的保护性免疫反应。Collin等人研制了一种G2b型灭活疫苗,基于分离的美国变异NPL-PEDV 2013 P10.1株。根据基于细胞的病毒中和试验,该疫苗通过肌肉注射引起了相当水平的针对PEDV的体液免疫。然而,灭活疫苗不能复制,只能诱导较小范围的免疫反应。此外,由灭活疫苗诱导的免疫不像LAV那样持久,导致需要多次剂量才能增强。此外,由LAV触发的强被动乳原免疫是保护新生哺乳仔猪免受包括PEDV在内的肠道疾病侵害的最有希望和最有效的方法。在非自然寄主组织培养中进行连续传代导致病毒致弱是培养LAV的常规方法。迄今为止,已经报道了几种细胞减毒的G2毒株,包括美国分离株PC22A,亚洲株YN, Pingtung-52和KNU-141112。Hou等人对四种减毒毒株的突变模式和分子机制进行了全面的评论。一般来说,细胞适应毒株在仔猪中是减毒的,并且通过激发高水平的中和抗体而具有高度的免疫原性。然而,对于这些报道的G2 PEDV为基础的LAV候选物的保护功效存在一些担忧。首先,由于最脆弱的种群是乳猪,在它们遇到病毒之前没有足够的时间诱导主动免疫,因此PEDV疫苗接种的理想策略是在母猪/后备母猪中诱导保护性乳原免疫反应。由于在母猪/后备母猪中进行PEDV攻击研究是昂贵且劳动密集型的,科学家们利用基于细胞的病毒中和试验来测试疫苗接种诱导的保护性免疫反应,这可能作为保护的指标。此外,新生儿猪模型用于测试病毒致弱,保育猪(或断奶猪)用于评估病毒的免疫原性和筛选有希望的候选病毒。例如,在细胞培养适应的PC22A株的研究中,100代(P100)和P120在断奶仔猪中完全减毒,但在新生仔猪中部分减毒。然而,与P120病毒相比,PC22A的P100在毒力毒株攻击后诱导了更高的血清PEDV IgA、IgG和病毒中和(VN)抗体滴度和更多的PEDV IgA抗体分泌细胞。这些结果表明PEDV的致弱是一把双刃剑。在仔猪中完全减毒的LAV候选物不能在母猪中诱导足够的乳原免疫。年龄较大的猪比仔猪更能抵抗PEDV感染和疾病。因此,仔猪中PEDV的完全衰减通常会导致减毒病毒的低效复制和老龄猪中病毒免疫原性的降低,从而导致保护性免疫的低效。其次,G1组和G2组之间的交叉保护程度较低,G2a组和G2b组之间的交叉保护效率也是疫苗开发中需要关注的问题。Liu等证实CH/HBXT/2018 (G2a)和CH/HNPJ/2017 (G2b)灭活疫苗对异源株的VN抗体滴度均显著低于同源株。因此,在进一步的疫苗研究中,异种毒株的攻击对阐明交叉保护具有重要意义。4PEDV减毒活疫苗毒力逆转的风险与预防除了保护效率之外,阻碍PEDV LAVs应用的最大问题之一是安全问题。减毒毒株携带突变,通过系列传代或分子工程引入,使其没有致病性。然而,一些疫苗毒株通过(1)病毒基因组内突变的积累和(2)重组在初级疫苗受体传代过程中表现出毒力的逆转。在本节中,我们将回顾抵制PEDV - LAV发展的逆转事件的策略。有两种方法可以产生有希望的减毒候选疫苗:(1)经典方法是通过在非自然宿主或环境中连续传代病毒,使其适应新条件并减少在自然宿主中的复制,或者(2)通过对多种基因进行遗传修饰的反向遗传学方法,这些基因对于降低病毒的致病性是必不可少的。然而,由于宿主的免疫功能低下或弱病毒的遗传不稳定,衰减突变的逆转和基因组中其他地方的补偿性突变都可能导致毒力的逆转。nsp16的2'-O-MTase在冠状病毒中高度保守,在复制和转录过程中介导病毒基因组RNA和sgmRNAs的加帽过程。在老龄小鼠模型中,一种SARS-CoV nsp16突变体(dNSP16)在异源攻击后显示出作为候选疫苗的有效性。然而,在缺乏功能性B细胞和T细胞(RAG - / -)的小鼠模型中,接种dNSP16突变体后,62.5%(5/8)的免疫受损小鼠表现出体重减轻和死亡,而这在老年小鼠模型中是不存在的,表明毒力的逆转。同时,虽然引入的靶向nsp16的突变在逆转录物中被保留,但在nsp3、nsp12和nsp15中发现了6个突变,这些突变可能具有代偿性突变的作用。先前的一项研究表明,PEDV nsp14-ExoN突变体E191A显著减弱,但具有高度的遗传不稳定性,并且在体外和体内均观察到反向突变。重组MHV和SARS-CoV携带断裂的nsp14外显子结构域也有类似的报道。在体外连续传代(250次)后,尽管在外显子(-)活性位点未发现反向突变,但具有工程外显子的MHV突变体(MHV-ExoN(-)- p250)积累的突变比野生型MHV多8倍,并且显示出更高的复制保真度,这表明在病毒复制过程中出现了外显子功能的代偿突变。为了减轻这种突变驱动的逆转,可以将针对不同基因的多个突变结合到病毒基因组中,并通过不同的机制减弱病毒。例如,我们形成了重组PEDV icPC22A-KDKE4A-SYA,携带失活的nsp16 2' -O-MTase和S蛋白的内吞信号。如上所述,nsp16 2'-O-MTase功能障碍在小鼠中减弱了SARS-CoV、MHV和MERS-CoV。S蛋白胞质尾部的保守基序YxxΦ调节被感染细胞表面S蛋白的水平,并作为毒力因子发挥作用。重组突变体icPC22A-KDKE4A-SYA在猪体内传代三次后保留了引入的突变,表明其体内遗传稳定性。在SARS-CoV LAV发展过程中也采用了类似的方法,通过将失活的nsp14-ExoN和nsp16-2'-O MTase结合产生dNSP16/ExoN。与dNSP16突变体不同,dNSP16/外显子不会引起重大疾病,并且在接种后30天免疫功能低下小鼠模型中被清除,而不会恢复到毒力形成。总的来说,多种突变的组合通过各种途径减弱病毒,为对抗突变驱动的冠状病毒LAV的逆转提供了答案。此外,重组是许多RNA病毒的重要进化因素,特别是冠状病毒,在密切相关毒株的混合感染中,其重组率高达20%。重组驱动的逆转,即当一种疫苗毒株与产生新变体的野毒毒株重组时,对LAV的应用构成了障碍。几种动物病毒的LAV包括犬细小病毒、传染性法氏囊病病毒、牛疱疹病毒1,以及冠状病毒、IBV和PEDV的成员,他们都发生了由重组驱动的逆转引起的疫苗失败。在中国几个养猪业大省发现了PEDV重组变异体。一种在田间表现出高致病性的变异源于低致病性疫苗与强毒田间菌株之间的重组事件。病毒基因组重组的一般机制有三种:(i) DNA基因组中的断裂和修复,(ii) RNA基因组中的聚合酶模板切换,以及(iii)分段RNA基因组中的片段重组。作为一种非分段RNA病毒,冠状病毒可以在两个不同分子之间进行分子间重组,也可以通过模板切换在同一分子内进行分子内重组。分子内重组是指复制酶在先导区和主体TRS区之间切换,产生一组sgmRNAs,而分子间重组是指复制酶从不同亲本毒株的供体模板跳到受体模板,在RNA合成过程中共享同源序列,偶尔会产生重组病毒子代。对冠状病毒TRS位点和重组事件的系统分析表明,近10%的主体TRS区域参与断点热点,重组热点经常与主体TRS相关。因此,TRS线路成为阻止冠状病毒复制过程中分子间重组的主要靶点。通过将3-nt引入与野生型TRS序列有三个核苷酸差异的重组TRS中,设计了一种抗重组SARS- CoV。重装TRS线路与野生型线路不相容,它是导致携带混合野生型和重装的TRS的拯救嵌合病毒失败的原因。随后,同一组的研究人员进一步优化了调控回路,设计了一个7-nt重组的TRS,该TRS具有增强的遗传稳定性,基因组可作为SARS - CoV减毒疫苗开发的有效抗重组平台。对于PEDV来说,重装TRS线路有两个困难:(1)与在大多数冠状病毒中观察到的TRS-CSs的高度一致性不同,PEDV的TRS-CSs表现出令人难以置信的多样性,其中,TRS-CS主体与先导TRS-CS可以有三个核苷酸的差异(见第2节);(2)在PEDV基因组中,所有的体TRS-CSs都与上游ORF重叠。因此,将突变引入TRS区域可能会改变上游ORF的氨基酸序列,导致不利突变。需要新的方法而不是直接重新编码TRS-CSs来防止TRS相关的重组驱动的逆转。第一种可能的方法是通过引入沉默突变来重新设计与上游ORF重叠的TRS-CSs,以保留原始氨基酸序列。除了沉默突变外,保守氨基酸替代策略也可用于设计TRS-CSs,该策略将蛋白质中的一个氨基酸替换为具有相似生化特性的另一个氨基酸。除了序列相似性方面,TRS区域的RNA二级结构被认为是顺式作用元件,也调节RNA转录。正如我们在第2节中所讨论的,PEDV基因组5'UTR的二级结构在病毒复制中起着重要作用。然而,对于二级结构的准确预测信息有限,尤其是对主体TRS的预测。研究人员根据SARS-CoV-2报道了冠状病毒基因组在其生命周期中的二级结构动态。例如,与感染细胞中的病毒RNA相比,病毒粒子内的RNA基因组经历了主要的构象改变,并表现出过渡的密封。虽然预测了PEDV CV777毒株5'UTR的二级结构(图3),通过靶向主体TRS区域内的关键结构元件来重新编码PEDV TRS系统的更多方法将受益于PEDV基因组结构在病毒复制过程中的动态说明。这些区域的结构变化也可能调节RTC的模板开关事件,导致转录回路的改变。使用这些方法,重新编码的主体TRS-CSs将与野生型不兼容,但保留上游ORF的保守置换。最后,我们可以通过人为引入间隙,将PEDV的TRS区域与上游ORF区分离,从而破坏原有的TRS位点,重组PEDV的基因组。因此,引入的间隙有望作为调节PEDV转录的功能元件,我们可以像之前在SARS-CoV中描述的那样重新连接转录回路。目前,我们正在设计一个改造的TRS,几乎没有改变原来5'的二级结构UTR。一个重塑的PEDV突变体RMT已经被拯救,在体外和体内有效地复制。RMT在新生仔猪中显示出部分减毒表型和诱导的部分保护作用(未发表的数据)。它也显示出与野生型S-INDEL PEDV菌株的重组减少。因此,它可以作为未来开发抗重组PEDV LAV的平台。5结论新出现的高毒力PEDV在哺乳仔猪中引起了大规模暴发,死亡率很高,给猪肉工业造成了重大损失,但很少有疫苗能有效预防这种疾病。由于新生仔猪的脆弱性,最有效的疫苗接种策略是在妊娠母猪体内诱导强乳原免疫,通过初乳和乳汁将保护性中和抗体被动传递给哺乳仔猪。同时,保护母猪肠道的主动粘膜免疫在这一过程中起着至关重要的作用。先前对另一种猪肠道冠状病毒TGEV的研究表明,只有用活病毒免疫,而不是灭活疫苗或亚单位疫苗免疫,才能触发足够的乳原免疫。因此,在母猪体内使用易于触发乳原免疫的LAV是预防和控制PED的一种很有前途的方法。未来需要同源和异源挑战来证明疫苗对野外流行毒株的交叉保护作用。此外,弱毒株的毒力回复的安全性问题仍未得到解决,这阻碍了LAV的应用。利用反向遗传学和新开发的方法,结合几种不降低病毒免疫原性的致弱突变(例如,nsp1突变)和重新连接的TRSs,可以帮助提高候选疫苗的遗传稳定性,从而可能对突变和重组驱动的逆转更具抵抗力。参考资料:Niu X, Wang Q. Prevention and Control of Porcine Epidemic Diarrhea: The Development of Recombination-Resistant Live Attenuated Vaccines. Viruses. 2022 Jun 16;14(6):1317. doi: 10.3390/v14061317. PMID: 35746788; PMCID: PMC9227446.为了推动兽用生物制品行业交流,共同探讨该领域的最新研发进展、产业化现状及未来发展趋势,生物制品圈联合四叶草会展、乘风济海将于2024年4月17日-18日在南京共同举办“兽用生物制品研发和产业化大会”,系中国医药全产业链新资源大会(CBC大会)中的一个分领域专业会议。诚邀全国相关领域研究者共享学术盛会。名称:兽用生物制品研发和产业化大会时间:2024年4月17日-18日(周三-周四)地点:南京国际展览中心主办单位:生物制品圈、抗体圈、四叶草会展,乘风济海媒体支持:药时空、细胞基因研究圈报名方式:扫描下方二维码→ 填写表格 → 报名成功组委会获得报名信息后,根据报名信息进行初筛,并进一步与报名者沟通确认,实现精准邀请(严格审核通过)。注:大会日程以会议现场为准。中国医药全产业链新资源大会(CBC大会)是一场将“政、产、学、研、用、管、投”各方精英围绕全产业链新资源展开的合作大会,将于2024年4月16日-18日在南京国际展览中心举办。大会将主打“全产业链新资源对接”,围绕投资、立项、临床前研发、临床研究、生产、供应链国产化、销售、MAH合作、国际品种合作、公司股权合作与并购全产业链,为中国医药同仁带来全新的资源与商机。大会下设的同期会议包括:宠物药品、食品、保健品新资源大会兽用生物制品研发和产业化大会透皮技术研发生产与注册开年分享会吸入制剂研发、生产与注册新机遇新进展分享会中国改良新药与缓控释制剂全产业链合作大会多肽产业创新与发展大会新药典新型辅料与包材产品与技术交流会新药典新型实验室仪器与耗材实操演示交流会陆续更新中......识别微信二维码,添加生物制品圈小编,符合条件者即可加入生物制品微信群!请注明:姓名+研究方向!版权声明本公众号所有转载文章系出于传递更多信息之目的,且明确注明来源和作者,不希望被转载的媒体或个人可与我们联系(cbplib@163.com),我们将立即进行删除处理。所有文章仅代表作者观点,不代表本站立场。

疫苗

2024-03-21

▲ 详细日程见文末 ▲1摘要在过去的三十年里,猪流行性腹泻病毒(PEDV)对韩国国内养猪业构成了重大的金融威胁。PEDV感染主要导致受累分娩至育肥(FTF)猪群持续流行,导致地方性猪流行性腹泻(PED),随后全年反复暴发。本综述旨在鼓励养猪生产者、兽医和研究人员之间的合作,以提供答案,以加强我们对PEDV的理解,以预防和控制地方性PED并为下一次流行病或大流行做好准备。我们发现,合作实施PED风险评估和定制的基于四支柱的控制措施对于阻断受影响畜群中的地方性PED链至关重要:前者可以识别农场风险因素,而后者旨在通过畜群免疫稳定和病毒消除来弥补或改善弱点。在地方性PED下,病毒在浆液中的长期存活。无症状感染的后备母猪(“特洛伊猪”) 可以将病毒传播到产房,这是FTF猪场根除PEDV的主要挑战,并突出了在猪群及其环境中主动监测和监测病毒的必要性。本文强调了目前对分子流行病学和市售疫苗的了解,以及控制PEDV的风险评估和定制策略。这稳定群体免疫和消除病毒传播的干预措施可能是建立区域或国家PED根除计划的基石。关键词:猪流行性腹泻病毒,地方性PED,疫苗接种,风险评估,控制策略。2介绍冠状病毒(CoVs)是一大类病毒,可引起人类和动物的呼吸道和消化道疾病,从而对人类和动物健康构成重大威胁。冠状病毒是最大的单链RNA病毒,可分为四个属:冠状病毒家族中的Alpha-、Beta-、Gamma-和德尔塔冠状病毒属。迄今为止,已鉴定出七种猪冠状病毒:传染性胃肠炎病毒(TGEV)、猪呼吸道冠状病毒、猪流行性腹泻病毒(PEDV)、嵌合猪肠道冠状病毒和猪急性腹泻综合征冠状病毒。在阿尔法冠状病毒属中,猪血凝性脑脊髓炎病毒在Beta冠状病毒属中,猪德尔塔冠状病毒在德尔塔冠状病毒属中。猪冠状病毒的流行困扰着全世界的猪群,并造成了跨物种传播的潜在风险。近几十年来,猪流行性腹泻(porcine epidemic diarrhea, PED)的病原体PEDV成为全球养猪业的严重威胁,造成重大经济损失。PEDV目前被归类为经典基因型1a(G1a),最初出现在英国,并在1970年代摧毁了许多欧洲国家的养猪业。然而,在1980至1990年代,欧洲的急性PEDV流行明显下降,此后仅报告了零星的疫情。PEDV(G1a)在1980年代初进入亚洲,损害了亚洲猪肉产业,并构成了巨大的金融威胁。与欧洲的PEDV相比,亚洲的PEDV疫情更为残酷,导致哺乳仔猪死亡率高且该病在多个亚洲国家经常转为地方病。尽管在过去四十年中,PEDV主要在亚洲猪肉生产国肆虐,但这种猪冠状病毒的威胁并未得到全球认可。然而,2013年初,随着高致病性(HP)-PEDV(分为基因型2b(G2b))在美国的突然爆发,PEDV的声誉发生了翻天覆地的变化,每年给美国生猪养殖企业造成了9-18亿美元的损失。美国出现的HP-G2b毒株传播到邻近国家,包括加拿大、墨西哥、哥伦比亚和秘鲁,并最终传播到东亚国家和欧洲,导致2013-2014年PEDV大流行。在韩国,HP-G2b PEDV于2013年底出现并席卷全国,包括济州岛,在全国范围内引起PED灾难。这篇综述的重点是韩国PEDV的现状和控制措施,结合疫苗接种、病毒和群体监测,以根除韩国流行性PED。3病因学3.1 PEDV结构和基因组PEDV属于网巢病毒目、冠状病毒科、α冠状病毒属的佩德科病毒亚属。PEDV有包膜,大致呈球形或多形性,直径为95-190 nm,包括长度为18-23nm的棒状三聚体化突起(图1A)。PEDV基因组和病毒粒子结构详见其他报道。该病毒具有约28kb 的单链正义RNA基因组,基因组末端包含5'-帽和 3'-多聚腺苷酸化尾部结构。PEDV基因组由七个典型的冠状病毒基因组成,包括开放阅读框(ORF)3,其排列顺序为5‘非翻译区(UTR)-ORF1a-ORF1b-S-ORF3-E-M-N-3’UTR(图1B)。ORF1a 和 1b 包含基因组的 5‘-近端三分之二,编码 16 种非结构蛋白 (nsp)。基因组 3’近端区域中剩余的ORF编码四种典型的冠状病毒结构蛋白和一个辅助基因 ORF3。这4种结构蛋白包括3个包膜相关的150-220 kDa糖基化刺突蛋白(S)、20-30 kDa膜(M)和7 kDa包膜(E)蛋白以及58 kDa核衣壳(N)蛋白,后者包裹基因组形成长螺旋螺旋结构。图1. PEDV结构和基因组组织的示意图。(A)PEDV结构模型。PEDV病毒粒子结构如左图所示。病毒粒子内的RNA基因组与核衣壳相互作用,(N)蛋白质形成长螺旋核糖核蛋白(RNP)复合物,该复合物被脂质双层包膜包围,其中嵌入刺突蛋白(S)、包膜(E)和膜蛋白(M);括号中表示每种结构蛋白的预测分子大小。一组相应的亚基因组 mRNA (sg mRNA;2-6),通过这些 mRNA 表达经典结构蛋白或非结构ORF3蛋白,通过这些右图还描绘了共末端不连续转录策略。(B)PEDV基因组RNA的结构。顶部显示了大约28kb的5'-封端和3'-多聚腺苷酸化基因组。病毒基因组的两侧是 UTR,并且是多顺反子的,携带复制酶ORF1a 和1b,然后是编码包膜(S、E 和 M)、N 和辅助 ORF3 蛋白的基因。ORF1a和1b表达通过−1 程序性RFS 产生两种已知的多蛋白(pp1a 和 pp1ab);这些多蛋白通过共翻译或翻译后加工成至少16种不同的非结构蛋白,命名为NSP1-16(底部)。UTR,未翻译区域;ORF,开放式阅读框;RFS,核糖体移码;A(n),多聚腺苷酸化尾部;PLP,木瓜蛋白酶样半胱氨酸蛋白酶;3CLpro,主要的3C样半胱氨酸蛋白酶;RdRp:RNA依赖性RNA聚合酶;HEL, 解旋酶;ExoN,3′→5′核酸外切酶;NendoU,尼多病毒尿苷酸特异性核糖核酸内切酶;2′OMT,核糖-2′-O-甲基转移酶;PEDV,猪流行性腹泻病毒。改编自Lee。3.2 PEDV基因型与其他CoV S蛋白一样,PEDVS糖蛋白(由S1和S2亚基组成)通过与细胞受体相互作用介导病毒进入和通过诱导中和抗体。基因突变,包括插入(insert,IN)和缺失(insert,DEL)以及S基因的重组,可驱动病毒致病性和组织或物种趋向性的改变。考虑到表型和基因型特征,S基因是研究PEDV遗传相关性(即基因分型)和分子流行病学的合适测序位点。因此,使用基于S基因的系统发育学,PEDV可以在基因上分为两种主要基因型和两种亚基因型:低致病性(LP)-G1(经典G1a和重组G1b)和HP-G2(局部流行性G2a和全球流行或大流行性G2b)。G1a毒株包括原型CV777和几种组织培养适应病毒,而G1b代表新型重组变异株,这些变异株首先在中国报道 ,然后在美国、韩国和多个欧洲国家被发现。G1b毒株起源于次要G1a病毒和主要亲本G2b病毒的自然同源重组。G2包括最近的现场分离株,分为两个亚组:G2a和G2b,前者覆盖了亚洲过去和当前的区域流行病,后者包括导致2013-2014年大流行的当代优势毒株以及目前在美洲和亚洲大陆的疫情。3.3 PEDV生命周期PEDV表现出有限的组织趋向性,与其他CoV一致,并且主要在猪小肠绒毛上皮细胞或肠上皮细胞中复制。尽管猪氨肽酶N(pAPN)长期以来一直被认为是PEDV的假定细胞受体 ,但目前已知情况并非如此,这表明存在参与病毒进入的真实细胞受体。此外,与细胞表面暴露的唾液酸结合通过促进病毒初始附着在细胞受体上,在PEDV感染中发挥作用。然而,PEDV的复制首先通过S蛋白与小肠绒毛状上皮细胞的未知表面受体结合,然后在内吞作用后通过pH非依赖性融合(病毒和质膜之间)或pH依赖性融合(病毒和内体膜之间)将病毒内化到靶细胞中(图3)未包被的病毒基因组释放到胞质溶胶中,通过不连续合成开始病毒mRNA生物合成和基因组复制。ORF1a和1b立即被翻译成复制酶多蛋白pp1a和pp1ab:前者通过最初的ORF1a翻译产生,而后者由ORF1b翻译表达,具体取决于C末端将pp1a延伸为pp1ab的-1核糖体移码,该移码C末端将pp1a延伸到pp1ab。这些pp1a和pp1ab通过内部病毒蛋白酶蛋白水解成熟,产生 16 种加工终产物,命名为 nsp1-16,其包括复制和转录复合物,该复合物首先使用正链基因组 RNA 参与负链RNA合成。产生全基因组和亚基因组(sg)长度的负链,并用于合成全长基因组 RNA 和3’-共末端sg mRNA。每个sg mRNA仅被翻译成由sg mRNA的 5′-most ORF编码的蛋白质。包膜S、E 和 M蛋白插入内质网(ER)并锚定在高尔基体中。N蛋白与新合成的基因组RNA相互作用,形成螺旋核糖核蛋白(RNP)复合物。子代病毒通过在内质网-高尔基体中间区室预形成的螺旋RNP出芽组装,然后通过光滑壁、含病毒粒子的囊泡与质膜的胞吐样融合释放。因此,PEDV感染会破坏目标肠细胞并损害肠上皮,导致严重的绒毛萎缩和空泡化。这些临床结果会干扰乳汁的有效消化和吸收,导致急性消化不良和吸收不良性水样腹泻,最终导致哺乳新生儿致命性脱水(图2)。图2. PEDV复制周期和发病机制概述。左图显示了病毒从初始进入(pH 依赖性或-非依赖性融合)并将病毒基因组释放到成熟病毒粒子的胞吐作用(光滑壁、含病毒粒子的囊泡与质膜融合)。右图描述了 PEDV 如何引起消化不良和吸收不良的水样腹泻,最终导致致命的脱水。RTC:复制和转录复合物;ER:内质网;ERGIC:ER-高尔基体中间室;PEDV:猪流行性腹泻病毒。改编自Lee。3.4 PEDV的传播PEDV可以感染任何年龄的猪;然而,疾病的严重程度和死亡率与受感染动物的年龄成反比。该病毒具有高度传播性,对出生后1周内的新生仔猪有致死性,发病率和死亡率高达100% 。口服的最小感染剂量(0.056 中位组织培养感染剂量感染5日龄哺乳猪所需的HP-G2b PEDV的[TCID50]/mL)远低于3周龄断奶仔猪(56 TCID 50/mL)。在断奶仔猪和育肥猪(包括后备母猪和母猪)中,在发病后第1周内,临床症状是自限性的,不如2周龄以下哺乳仔猪严重。表1总结了实验感染PEDV的不同日龄猪腹泻和粪便病毒脱落的发生和持续时间。表1.经口接种感染HP-G2b PEDV株不同日龄猪的临床体征及粪便病毒RNA脱落总结PEDV的主要传播途径是通过直接或间接接触临床或亚临床感染的猪或腹泻粪便和/或呕吐物的粪-口途径(图4)。PEDV 可以通过接触受污染的设备、车辆(运输猪或尸体、运送饲料或运输粪便)、人类(穿着受污染的工作服的农场员工或访客,包括养猪从业者或拖车司机)或野生动物(包括鸟类、流浪猫和老鼠)侵入农场。其他受污染的物资,如饲料或饲料添加剂成分(如喷雾干燥的猪血浆),可能是病毒的潜在传播源。PEDV也可以通过母猪的乳汁垂直传播给后代。在精液中也会出现PEDV脱落,这表明病毒可通过受污染的精液在猪群中传播。此外,PEDV颗粒被雾化后可通过粪鼻途径进行空气传播,这些颗粒在特定条件下对哺乳仔猪具有传染性。在PED流行之后,由于猪场管理不当(例如消毒不当和生物安全松懈),病毒可能会消退,留在分娩舍或在断奶至育肥场(WTF)持续存在(图 4)。在分娩到育肥(FTF)猪场中,如果一旦感染,PEDV可以传播到WTF设施并污染WTF设施,但存活的仔猪会转移到保育舍和生长育肥猪舍,这会产生地方性PED,其中病毒通过污染-传播-感染循环继续在受影响的猪群中传播。在PED流行时,如果新生仔猪由于多种原因(例如疫苗接种不当、母猪替代或泌乳缺陷(例如乳腺炎或无乳症))而无法从母猪那里获得足够水平的母体保护性免疫力,则在猪场内传播的常驻病毒将感染这些抗体缺失的易感仔猪,并随着大量脱落而繁殖该病毒是复发的来源,最终导致新生仔猪死亡率显著增加。PEDV的感染在断奶和生长育肥猪中没有症状;然而,病毒可以在其粪便中传播,污染猪舍并以亚临床方式复制。因此,PEDV可以通过污染-传播-感染循环,继续亚临床感染地方性猪场的生长猪和育肥猪,这增加了后备母猪在受污染猪舍驯化期间感染病毒的机会。一旦被感染,无症状的后备母猪可以充当“特洛伊猪”,它们没有临床症状,但其粪便中会携带病毒,这些病毒将成为隐性且不可战胜的携带者,将病毒传播到产房。因此,由“特洛伊猪”携带的PEDV可以传播并感染脆弱的新生仔猪,从而成为反复暴发的源头,在临床上影响被动免疫力低下或无被动免疫的哺乳猪。因此,加强后备母猪的感染监测和疫苗接种计划是控制地方性PEDV感染猪群的关键措施。图3.猪流行性腹泻病毒在流行性和地方性PED病例中的传播来源和途径。L/K/K,异源初免-加强免疫方案,包括一剂初免接种G2b口服活疫苗(L)和两剂加强剂 G2b灭活(K)疫苗。PED,猪流行性腹泻。4韩国的PEDV流行病学4.4.1 PEDV从经典G1a到G2a的基因型转变一项回顾性研究表明,早在1987年,韩国就存在PEDV[76],尽管国内G1a PEDV的出现最早是在1992年。此后,该病毒在国内猪群中流行,因为在2007年检测的猪场中,有90%的猪场检测到PEDV传播(即断奶和育肥猪血清阳性)。然而,2010年使用国内分离株进行的一项遗传多样性研究表明,2007-2009 年期间鉴定的主要 PEDV 的分离株被归类为与G1a亚群在系统发育上关系较远的G2a菌株。与G1a原型CV777菌株相比, G2a PEDV菌株包含一个遗传特征,即S插入-缺失(S INDEL)分别在55/56、135/136和160–161位包含三方不连续的4-1-2 IN-IN-DEL。韩国在2010年之前缺乏遗传和分子流行病学数据,这意味着G2a毒株何时或如何出现尚不清楚。然而,在20世纪90年代或21世纪初,G1a PEDV 似乎是初始显性基因型,随后,从G1a到G2a的基因型 的转变可能发生在2000年代中后期。这种基因型转变事件可能反映了在当时的韩国,在接种疫苗的猪场中反复发生着PEDV的情况,这引发了对自21世纪初以来上市的基于G1a国产分离株的PED疫苗的保护效力的质疑。4.2 HP-G2b PEDV的出现与演变2010-2011年,韩国PEDV疫情状况发生了巨大变化,当时全国范围内出现了灾难性的口蹄疫疫情,导致在两年内大规模扑杀了300多万头猪(占国内生猪总数的三分之一)。2010-2011年口蹄疫疫情期间,韩国PEDV的流行率很低,仅零星暴发,PEDV疫情持续到2013年底。然而,韩国并没有逃脱被在2013-2014年期间在美国暴发的HP-G2b PEDV株感染的命运,并从2013年11月开始在全国范围内爆发严重疫情。HP-G2b PEDV席卷了韩国大陆近50%的养猪场,4个月后(2014年3月),该病毒袭击了济州省(也称为济州岛),该省自2004年以来一直保持无PEDV状态。由于HP-G2b PEDV入侵的灾难性影响,据推测,在2013-2014年疫情期间,韩国养猪业损失超过了国内生猪数量的10%,估计有100万头仔猪。虽然HP-G2b PEDV入侵朝鲜半岛的源头尚未确定,但在2013年4月美国出现前所未有的猪源性腹泻病毒期间或之后,进口种猪或饲料是导致PEDV入侵韩国可能的传染源。然而,回顾性研究从 2012年11月和2013年5月采集的腹泻样本中独立鉴定出两株具有S INDEL遗传特征的韩国HP-G2b分离株,表明第二个基因型在2010年初从G2a转移到G2b。因此,在PEDV引入美国之前,HP-G2b PEDV就已经作为次要谱系存在于韩国,随后的有利环境可能有助于G2b毒株的处于主导地位。到目前为止,HP-G2b PEDV感染仍未得到控制,导致全国范围内全年出现各种规模的疫情,给国内养猪业带来了相当大的经济成本(图5)。此外,大量受PED影响的猪场在一两年内反复暴发疫情。这种情况表明,PEDV在农场中已经形成地方性的持续性存在,从而使疾病控制变得困难,并加剧了经济损失。G2b 继续独立进化,并经历具有特定地理聚类的遗传多样性,与HP-G2b韩国原型毒株(KNU-141112)的氨基酸(aa)同源性从96.3%到99.4%不等。特别是一些国产HP-G2b菌株(除了一个在S1/S2连接区具有200-aaΔ的分离株)在S1的N端结构域(NTD)中具有较小的IN或/和DEL,这与日本、台湾和美国报道的在S1 NTD中具有大的194-216-aaΔ的G2b变体不同。根据地理起源,国内分离株在系统发育上分为六个分离分支,包括全国(NW分支,具有1.3%-1.9%的aa变异)、庆南省和全南省(KJ分支,具有2.2%-2.9%的aa变异性)、忠清省和庆北省(CK分支,具有0.6%-1.7%的aa变异率)、济州-哈利姆省(JH分支,具有1.8%-2.6%的aa变异度),济州-大田(JD分支具有1.6%-2.7%的aa变异),并且未在地理上分类(NC分支具有0.9%-3.7%的aa变化)(图5A)。图4. 2013年至2023年(截至6月)韩国的 PED 病例数。(A) 自 2013 年以来每年发生 PED 的次数。韩国九个省份(包括位于大陆西南部的济州岛)的热图(上图)显示了每年(2013 年至 2023 年 6 月)各省 PED 病例的全国分布情况,图例从红色到白色,从多到少。粗(天蓝色)箭头(下面板)代表表明G2b猪流行性腹泻病毒疫苗在国内市场的发布年份的时间表。(B) 自 2013 年以来每月 PED 累计病例数。折线图显示了按月划分的累计病例数,表明季节性 PED(通常发生在每年 11 月至 3 月)转变为 PED 的全年发病率的现象。KV:灭活疫苗;LAV:减毒活疫苗;PED:猪流行性腹泻。JH和JD这两个济州分支分别在相应的地理起源地济州岛(省)的翰林和大井地区普遍存在,但在韩国大陆尚未发现。同样,大陆分支 NW、KJ 和 CK 直到 2022 年初才进入济州岛,尽管它们已经跨越了大陆的不同地理起源。2022年2月下旬至3月上旬,韩国南部和济州岛同时发生大规模暴发PEDV。遗传和系统发育分析证实了两个CK变异的出现,即CK.1(0.9%–1.1%aa变异)和CK.2 (1.1%–1.4%aa变异)分支(图6B)。进一步的时空调查表明,2021年初左右首次出现在庆南地区(朝鲜半岛东南部地区)的CK.1和CK.2分支几乎同时出现,通过不明来源传入猪群密度低的济州岛东北部地区,随后传播到猪密度高的翰林区(济州岛西北部)。从那时起,这些CK.1和CK.2分支包括与KNU-141112相比变异最小的流行毒株,现已在济州岛占据主导地位, 并进一步扩大了其在整个大陆的分布。图5.基于2022年(A)和(B)之前全球鉴定的PEDV菌株的完整S基因的系统发育分析。有四种基因型,G1a(红色)、G1b(蓝色)、G2a(绿色)和G2b(紫色)。蓝色 (G1b) 分支上的不同颜色三角形表示在韩国发现的重组 LP-G1b PEDV 菌株。深橙色三角形表示 2018-2019 年 G1b 毒株,靛蓝色三角形表示 2017 年 G1b 毒株,绿色三角形表示 2014 年发现的韩国 G1b 原型毒株。紫色 (G2b) 分支上的不同颜色点表示在韩国全国范围内发现的 HP-G2b PEDV 菌株,这些菌株聚类为六个地理分离分支(2022 年之前),同时分为八个分支和两个亚分支(2022 年之后):NW 分支(橙色)、KJ(深红色)、CK(浅绿色)、JH(天蓝色)、JD(霓虹灯)和未地理分类的 NC(紫色)分支。导致2022年大规模爆发的CK变种,即CK.1和CK.2分支,分别用红点和蓝点表示。黑点表示HP-G2b韩国原型菌株(KNU-141112)。PEDV:猪流行性腹泻病毒。4.3 LP-G1b PEDV的出现与演变先前在中国和美国报道的新型重组LP-G1b PEDV株在2014年3月在韩国首次报道,随后在几个欧洲国家报道。G1b PEDV毒株起源于2010年代初作为次要亲本的G1a和作为主要亲本的G2b之间的重组事件。因此,S基因具有典型的遗传和系统发育特征:与经典的G1a CV777菌株相比,S基因大小相同,没有S INDEL,并且基于S基因或全基因组的系统发育分类(G1b或G2)不同。尽管我们不能排除G1b病毒在首次发现之前在韩国存在的可能性,但美国和韩国G1b毒株之间的密切遗传相关性表明,每个G1b和G2b祖先都可能同时从美国引入韩国。与占主导地位的HP-G2b毒株不同,近年来,LP-G1b病毒很少在大陆引起小规模的、经济损失较小的疫情。有趣的是,2017年,通过将S1的NTD从现有G1b(次要)重组到国内流行的G2b(主要)的骨架中,然后进行遗传漂移,出现了亚群间G1b变体(图5)。这项研究进一步表明,S1基因的NTD是不同PEDV基因型之间自然重组的共同靶点,从而提供了一些可能使病毒逃避宿主免疫防御的优势。4.4 未来方向(基因型转变或出现变异)在韩国,G1b和G2b毒株在过去十年中一直在传播:前者是通过宿主环境中的重组进化而来的,而后者是大多数国内暴发的原因,通过基因突变继续进化,例如S1 NTD中的IN或/和DEL。由于病毒被认为经历了一个进化过程来积累突变或/和重组,以确保病毒在该区域的适应性,因此新的基因型转变的出现或可能来自国内分支的变异的出现将是不可避免的。此外,这些情况可能比预期更早到来,造成局部或全球疫情。因此,进行主动监测和监视(MoS)对于寻找迄今尚未确定的具有独特抗原和致病特性的PEDV变异(或其他基因型)至关重要,这些变异可能通过遗传性突变(例如非沉默突变,包括IN或/和DEL)或基因转移(例如重组事件)在局部或全球范围内出现,以预测和准备下一次流行或大流行。5韩国的PEDV疫苗仔猪出生时患有丙种球蛋白缺乏症,在断奶前存在免疫缺陷,因为猪胎盘的上皮层性质导致胎儿中抗体产生不足,并阻止母体免疫球蛋白通过胎盘转移至后代。因此,新生仔猪不能及时建立保护性免疫力来对抗各种感染,只能完全依靠在生命早期通过摄入初乳和含有免疫球蛋白的乳汁以获取母体抗体,来进行疾病保护。IgG被认为是初乳和血清中最普遍的抗体,可预防全身感染,而分泌型IgA(secretular IgA,sIgA)在乳汁中占主导地位,在整个哺乳期持续存在,并在局部(黏膜)防御系统中发挥作用。另一种破坏性肠道冠状病毒TGEV的疫苗开发的早期进展为肠道疾病提供了乳源性免疫和疫苗接种策略的基本思路。从过去的TGEV疫苗研究中得出的一个关键发现是,仔猪的保护率与母乳中大量的sIgA抗体(即产乳免疫)有关,而与血清和初乳中的IgG抗体无关:sIgA在自然感染或口服接种活TGEV的母猪中产生,而IgG在用灭活TGEV非肠道免疫的母猪中产生。同样,肠外PEDV疫苗接种的母猪也会特异性免疫反应,但不能对其仔猪提供完全保护,这在小猪模型中是可重复的。此外,病毒在母猪肠道中复制的载量和程度可能有助于在产乳分泌物(即初乳和奶水)中产生充足水平的IgA和中和抗体。因此,疫苗免疫途径可能是诱导母猪粘膜免疫的关键因素。因此,被动产乳免疫仍然是保护新生乳猪免受肠道冠状病毒(包括PEDV)感染的最有前途和最有效的方案,并且依赖于肠道-哺乳动物y-sIgA轴(IgA免疫细胞从肠道运输到乳腺)。尽管对PEDV的保护主要取决于仔猪肠粘膜中是否存在sIgA抗体,但通过在接种疫苗的母猪的初乳和乳汁中保留高滴度的IgA和针对PEDV的中和抗体,可以维持疫苗的效力。因此,PEDV疫苗接种的核心策略必须包括:1)通过口服疫苗刺激最佳粘膜免疫反应,在母猪的乳汁中诱导相当水平的sIgA抗体,从而为仔猪肠道中的靶肠细胞提供局部被动保护;2) 在出生时将优质的被动母乳免疫转移到哺乳仔猪;3)在整个护理期间维持新生仔猪的sIgA抗体以及中和抗体的保护水平。为了在韩国实现上述策略,自21世纪初以来,在怀孕母猪和后备母猪的PEDV疫苗接种中广泛应用了异源初免-加强免疫方案,即L/K/K,在产仔或分娩前间隔2-3周接种一剂活疫苗(初次免疫)和两剂灭活疫苗(加强免疫)。 5.1 G1a(第一代)PEDV疫苗在20世纪90年代,由于每年都会大规模发生PEDV疫情,韩国政府集中精力开发PEDV疫苗。第一种PEDV灭活疫苗是使用韩国G1a PEDV SM98-1株研制的,并于2004年在国内市场上市。随后,两个韩国G1a PEDV株SM98-1和DR-13在Vero细胞中培养,通过连续传代独立减毒:前者作为肠外减毒商业化活疫苗(LAV),而后者可口服LAV。此外,使用细胞培养减毒的日本G1a 83P-5株肠外LAV(P-5V)在韩国进口并上市。这些国产和进口的G1a疫苗在独立试验中为仔猪提供了保护,它们在全国范围内的实施后导致PEDV的流行率与往年相比有所下降。然而,尽管在全国范围内开展了广泛疫苗接种,但PEDV可在接种疫苗的畜群中的持续存在引发了一场关于其有效性及其在现场使用的利弊问题的辩论。最后,韩国爆发的HP-G2b型PEDV结束了关于上述G1a型PEDV疫苗疗效的争论;第一代G1a疫苗现在韩国几乎没有继续使用,因为它们针对异源G2b PEDV毒株仅提供部分保护。5.2 G2b(第二代)PEDV灭活疫苗G1a PEDV疫苗的有效性不足在一定程度上是可以预料到的,因为在2013-2014年大流行后,韩国占主导地位的田间菌株与现有的G1a疫苗相比出现了基因和抗原变异。因此,G2b PEDV毒株被认为是开发下一代疫苗的种源毒株。尽管由于难以在细胞培养中获得现场分离株,G2b PEDV疫苗的研发受到阻碍,但2015年底和2017年,国内疫苗制造商独立上市了三种使用不同G2b PEDV分离株第二代灭活灭活疫苗(其中一种是美国株和另外两种是韩国株)。尽管供应了新的G2b灭活疫苗,但事实证明,当地的PEDV疫情是不可阻挡的。2018年PEDV发生率不降反增,自 2014 年以来全国官方报告的 PED 病例数最高(https://www.kahis.go.kr/ home/lkntscrinfo/selectLkntsOccrrnc.do)(图4)。这种相互矛盾的状态可能反映了同源的分娩前初免-加强免疫方案[单独接种2剂或3剂肠外G2b灭活疫苗(即K/K或K/K/K)]在实现上述核心策略(即诱导和维持保护性黏膜免疫)和降低疾病发病率(即PEDV粪便脱落)方面的局限性。在这一时期,为了弥补这种同源 K/K/(K)方案的缺点,G1a(第1代)口服或肠外LAV或口服主动感染(返饲)用于在国内实施传统的异源 L/K/K 策略。然而,由于对G1a疫苗的极度不信任,改良的异源初免-加强方案和返饲和G2b灭活疫苗(即返饲/K/K)或单独返饲被大多采用而作为控制 PED 的替代方案。虽然口服暴露于自体PEDV(即从感染PEDV的新生仔猪身上采集的粪便或切碎的肠道组织)可能有益,但由于自体病毒材料中感染性PEDV的数量无法定量且特异性不强,这可能无法诱导完全的母体产乳免疫并防止PEDV脱落。此外,在接受这种方法之前,我们应该考虑的是,如果不加以控制,这可能会成为病毒传播到附近养猪场或受影响农场的地方性PED的潜在来源,导致粪便或肠道内容物中存在的其他病毒和细菌病原体在猪群中广泛传播,加速PEDV的进化和多样化,以及PEDV变体或重组新型冠状病毒的出现,它们可以跨越物种屏障感染其他动物或人类。5.3 G2b(第二代)PEDV口服活疫苗目前对影响乳源性免疫诱导的因素(如注射剂量、疫苗株、后备母猪/母猪的年龄或胎次)以及其他变量的认知仍然有限。然而,多项研究证据强调了初次免疫的口服途径,即使用活疫苗或通过有控制的返饲来有效的启动、增强和维持母猪粘膜免疫,为后代提供局部被动免疫保护。因此,需要使用HP-G2b毒株开发新型的口服LAV,以克服G1a活疫苗的缺陷,并取代粗糙的返饲做法。通过努力的协同研发,全球首个G2b口服LAV于2020年底推出。在实验和现场条件下被证明是安全且具有免疫原性的。此外,结合G2b口服活疫苗和肠外灭活疫苗的异源分娩前G2b L/K/K免疫方案,通过完成上述PEDV疫苗接种的核心策略,为哺乳期新生仔猪提供了针对HP-G2b PEDV的完全被动乳源保护。然而,为了最大限度地提高疫苗有效性,如果疫苗接种与其他控制措施相结合,包括严格的生物安全/消毒措施、定期分子(病毒)和血清学(宿主)监测以及最佳的猪场管理和卫生管理,则分娩前口服 L/K/K 计划将是预防和/或控制 PEDV 的最有利实用工具。5.4 未来方向(快速响应和适用的疫苗平台)尽管韩国的HP-G2b毒株具有遗传多样性(包括8个可能的分支在内的2个亚分支),但其抗原性保持不变,这意味着G2b疫苗对导致近期暴发的同源显性毒株仍然有效。然而,PEDV的新基因型或变异将不可避免地出现,这些基因型或变异可以逃避G2b疫苗的保护,这可能需要更换现有的G2b疫苗。考虑到基于G2b的新型灭活疫苗(2015年底和2017年)和LAV(2020年底)在国内上市的时间表,若想快速开发针对潜在变异株的新疫苗,应克服病毒分离和减毒过程中的艰巨挑战。现在,通过使用基于反向遗传学的PEDV平台,可以克服这些障碍。该疫苗平台被证明是携带未来任何流行毒株的异源S基因的合理支柱,可以作为快速生成新疫苗的模板,并为未来的PEDV疫苗研究铺平道路。6PEDV的控制策略6.1 PEDV风险评估PEDV一旦被引入猪群,可能会在FTF猪场中持续存在,因此,该疾病的流行特性使在猪FTF生产系统中的PED控制更具挑战性。此外,由于猪的连续流动性和环境污染水平,从FTF猪群中清除PED是一项复杂的任务。因此,为了防止PEDV重复感染猪场,有必要监测PED控制策略中的四个主要措施,包括:1)生物安全;2)疫苗接种;3)快速诊断和主动监测;4)猪群管理(生产管理和卫生)。当怀疑PED流行时,应从四个主要措施开始协调控制,打破疾病在猪场的传播链。包括:1)加强生物安全措施和消毒措施;2)对猪群进行病毒和血清学检测,检查分娩猪群的免疫水平,并识别暴露于PEDV的生长猪或育肥猪;3)必要时为母猪和后备母猪接种疫苗;4)改善猪场管理。为了加强这些策略,建立了PED风险评估,以确定猪场是否开始流行并面临复发风险(或在未感染PEDV的猪场中发生),并根据猪场状况提供定制好干预措施。因此,进行猪场评估的目标是通过以下方式收集有关病毒、畜群(宿主)和猪场(环境)的信息:1)积极的生物安全监测,以了解生产者或员工遵守生物安全协议的程度;2)在疫情暴发时进行快速病毒筛查,包括基因诊断,以获取该病毒是从猪场外新引入的还是在最初爆发后在猪场内持续存在的;3)对分娩猪群进行血清学筛查,以获取有关母猪及其后代的猪群免疫力的信息;4)WTF猪群的病毒和血清学筛查,以获取病毒在猪场内传播的信息。对各个猪场进行风险评估,以评估其自身的生物安全性能、群体免疫力和病毒传播等级,具体如下:一)生物安全监测控制流行病或地方性PED的最关键措施之一是严格的生物安全,必须对农场内外追踪到的任何东西或任何人采取严格的生物安全措施,通过尽量减少与任何受病毒污染的材料或人员的接触,降低PEDV进入猪群或病毒在农场内或农场之间或地区之间传播的风险。因此,主观监测通过面对面交流来审查和评估内部和外部生物安全协议,评分范围为0-5(0=最差;5=最佳)。生物安全问卷分别由院子、员工和猪舍部分的13、7和6份清单组成。每个问题都对猪场如何完成三个部分的所有生物安全实践进行评分,每个部分的平均得分是独立计算的。二)群体免疫评估由于被动泌乳免疫是新生仔猪对抗PEDV的关键保护策略,因此确定母猪群中是否存在PEDV特异性抗体并不重要。相反,监测母猪体内PEDV特异性抗体的稳定性和数量(或滴度)对于监测母猪群体免疫水平是必要的,母猪群体免疫力可以在泌乳期间为新生仔猪提供被动保护。因此,建议使用基于 S1 的间接酶联免疫吸附试验 (S1-iELISA) 和/或病毒中和试验 (VNT) 方法评估乳汁中 IgA 和中和抗体的保护能力,以及母猪(包括低胎次和高胎次)和18-20日龄仔猪血清中的中和抗体 (NAb) 滴度。S1-iELSIA 测量初乳和奶水中 IgA 抗体的稳定性,而 VNT 可量化 NAb 在乳汁和血清样品中的稳定性。根据母猪初乳IgA和NAb的稳定性以及母猪和哺乳仔猪血清NAb的稳定性特征,以0-5评分量表(0 = 最差;5 = 最好)估计群体免疫水平(表2)。表2.使用0-5 评分量表系统对母猪群体免疫概况进行评分三)病毒循环(地方性PED)状况WTF群中PEDV或抗体或两者均存在,可用于确定病毒是否在农场内传播。实时定量RT-PCR(rRT-PCR)可以使用粪便(单个)和泥浆(围栏)样本检测和定量PEDV RNA 。同时,使用VNT对保育猪和生长猪进行血清学检查,以确定曾经或最近接触过PEDV的猪。病毒循环状态是根据个体粪便和围栏浆液粪便样本中是否存在PEDV和/或断奶和生长肥育猪中是否有PEDV血清转阳来评估的,评分为0-5(0=最佳;5=最差)(表3)。表3.使用0–5评分系统评估猪场PEDV循环(地方性PED)概况四)五边形轮廓系统使用从生物安全问卷中独立获得的三个部分的评估分数(0=最差;5=最佳)、群体免疫水平(0=最低;5=最好)和地方性PED(病毒循环)状态(5=最差;0=最佳),风险评估的结果可以通过五边形轮廓系统可视化,用五个参数(院子、员工和栏舍生物安全、群体免疫和病毒传播)的单独得分用于构建风险五边形的五个分支。如果猪场没有PEDV,则风险五边形图会呈现出PEDV复发或发生的风险分别为低、中和高三类,如图6所示。图6.使用风险五边形剖面图确定受 PED 影响的猪场(或无 PED 猪场的发生)中猪流行性腹泻病毒复发的潜在风险的图解示例。风险五边形系统评估了五个参数的水平:院子生物安全、员工生物安全、猪舍生物安全、母猪免疫和病毒传播(地方性感染)。每个因素的得分为 0-5,由相对于五边形总面积的阴影区域表示。第一张黄色五边形图描绘了一个标准模型,没有复发或发生 PED 的风险。绿色五边形图表示 PED 复发无风险或风险较低(秒)。蓝色五边形图表示由于不稳定的群体免疫力(第三)或病毒传播(第四)导致PED复发的中等风险。红色五边形图表示由于生物安全性差、群体免疫力不稳定和病毒传播(第五和第六)导致PED爆发的高风险。PED,猪流行性腹泻。6.2 量身定制的干预措施根据风险评估结果,通过识别可能影响猪场PEDV复发的危险因素,评估的猪群可分为非地方性(WTF 猪群中病毒阳性和/或血清阴性)和地方性(WTF 猪群中病毒阳性和/或血清阳性)受影响的猪场。主要因素可分为四类,包括母猪免疫力不稳定、生物安全性差、产房病毒污染和WTF猪舍病毒传播。一旦确认了猪场的弱点,就可以根据流行状况量身定制防控措施,以消除和改善相应的危险因素,从而在FTF农场建立稳定的群体免疫和消除PEDV。其中包括:1)严格的内部和外部生物安全条件(先决条件);2) 初免-加强产前口服L/K/K疫苗接种和母猪及其后代保护性免疫的纵向监测;3)安全的清洁/消毒/消毒措施,与全进全出管理并行,如有必要,在产房中对猪群(粪便)和环境(粪便)中进行PEDV的纵向监控;4)WTF猪舍的消毒和后备母猪管理,包括感染监测和疫苗接种,结合断奶仔猪和生长育肥猪的猪群(粪便)和环境(泥浆)中的纵向PEDV监控和血清学监测。由于PEDV在环境(泥浆)中的低剂量感染和高存活率(在−20°C至4°C环境中存活28天),因此从受污染的猪舍中消除该病毒是一个重要的挑战。因此,应通过定期收集和检测WTF猪舍的泥浆样本来追踪和清除环境中的PEDV。图7展示了一个决策模型,描述了在非地方性或地方性感染的FTF农场中感染PEDV后采取定制控制措施的基本原理。图7.针对非地方性和地方性影响猪场的定制PED控制策略和措施图。在流行性PED发生时,建议受影响的猪场进行复发风险评估,以确定必须管理的猪场弱点,以控制和防止再次感染。导致FTF猪舍PED复发的主要危险因素包括:1)母猪免疫力不稳定,2)生物安全性差,3)产房中的病毒污染,4)WTF猪舍中的病毒传播。实施基于四大支柱的协同控制策略,以增强畜群免疫稳定性和/或消除病毒,以根除FTF畜群的PEDV。L(口服)/K/K,口服活疫苗(一针)和灭活灭活疫苗(两针加强针)的异源初免-加强免疫方案;AI-AO,全进全出;WTF,断奶至育肥;PED, 猪流行性腹泻;FTF,从分娩到育肥。改编自Jang等。在2021-2022年期间,我们在10个有G2b PEDV爆发史的FTF农场中使用五角大楼概况系统进行了PED风险评估。所有猪场都实施了以下免疫计划之一:同源 K/K 或 K/K/K 制度(G2b 灭活单独接种疫苗)、改良的异源反馈/K/K 方案(反馈和 G2b 灭活 疫苗)或单独反馈。如前所述,每隔3-4个月收集一次猪个体样本(粪便、血清和初乳)和围栏旁样本(泥浆)。粪便和泥浆样本分别从FTF站点的腹泻或非腹泻猪和粪坑中收集。从不同年龄(20日龄、40日龄、70日龄、100日龄、130日龄和160日龄)的猪中以及一胎母猪和二胎或更多胎次母猪中抽血。初乳是在初产母猪和经产母猪分娩当天采集。粪便和泥浆样本采用rRT-PCR检测PEDV RNA,而血清和初乳样本则使用VNT或/和S1-iELISA检测,如前所述。外部和内部生物安全检查表由猪场兽医进行评估。每个猪场的风险五边形系统是根据上述因素的评估分数创建的,表明所有猪场都存在中度或高风险的PEDV复发风险,因为存在生物安全性差,母猪免疫力不稳定,初乳(或血清)中IgA和/或NAb水平低,和/或WTF猪舍中的病毒传播(图9)。在风险评估之后,通过实施以下定制的控制措施,确定并改善了每个猪场的复发弱点,以控制 PEDV:1)改善内部和外部生物安全的漏洞,加强生物安全管理; 2) 初免-加强分娩前口服 L/K/K 疫苗接种,然后进行纵向免疫监测,稳定了群体保护免疫力,具有高持续水平初乳和血清中的IgA和NAb; 3)加强连续消毒措施,同时对粪便和泥浆进行纵向PEDV监控,以及对断奶和生长育肥猪的血清进行平行监测,减少或消除病毒传播(图8)。图8. 2021-2022年期间,在有G2b猪流行性腹泻病毒暴发史的10个分娩到育肥猪场(猪场 A-J)实施定制控制措施之前和之后的PED风险五边形概况图示。初步风险评估(之前)表明,所有猪场的PED复发风险均为中等(蓝色)或高(红色)。第二次评估(之后)显示,所有猪场的PED复发风险均有所改善,因此被归类为中(蓝色)或低(绿色)。PED,猪流行性腹泻。7讨论本报告概述了韩国流行性PEDV持续存在的现状和控制措施。自2013-2014年PED大流行以来,HP-G2b PEDV已成为该国的主要毒株,并继续进化,在该领域创造了小规模的遗传多样性。直到最近(2022年初),国内HP-G2b菌株在基因型上被分为六个地理特异性的分支,包括大陆的三个分支和济州岛的两个分支,现在被分为八个分支,其中有两个亚支CK.1和CK.2,几乎同时出现在大陆和济州岛内。尽管直到最近,HP-G2b PEDV缺乏有效的口服疫苗仍是四大支柱控制策略中缺失的部分,但口服型G2b LAV在国内市场的推广使我们能够完成四大支柱(生物安全、疫苗接种、诊断和监控以及农场管理)。基于第二代G2b疫苗的口服 L/K/K 疫苗接种可增强并维持母猪免疫力(或群体免疫稳定)的强度和持续时间,以超过猪群或环境中的病毒载量。此外,进行持续有效的监控对于追踪新的PEDV基因型或变体是必要的,这些基因型或变体可以逃避当前疫苗的效力覆盖范围,并为它们占主导地位并导致局部或全球暴发做好准备。随着流行性PED的出现,相当多的FTF猪场可能会受到地方性的影响,从而增加了全年复发的可能性,并为病毒传播到邻近农场甚至更远的农场提供了传播源。在受影响的FTF猪舍中,WTF猪舍中的病毒可以作为病毒循环和再感染的孵化器,因此,必须进行净化清除,否则猪场将受到地方性PED的影响,并在污染-传播-感染循环中面临重复感染的风险。因此,在受污染的WTF猪群中消除病毒是根除地方性PED的主要任务。因为PEDV在泥浆中可以持续存活一个多月,因此对WTF猪舍的泥浆样本进行联合消毒和病毒监测是从环境中消除病毒的不可或缺的措施。当病毒在WTF猪舍中循环时,病毒可能会在后备母驯化过程中悄悄感染后备母猪,然后这些母猪会在粪便中长期亚临床传播PEDV。因此,合适的后备母猪管理计划对于控制感染很重要,因为无症状感染的后备母猪可以充当“特洛伊木马猪”,将病毒传播到产房,以导致PED的反复爆发。这篇综述强调了在地方性感染猪场场中稳定群体免疫和消除PEDV所需的艰苦和合作工作的重要性。本研究还强调了研究人员、兽医、生产者、养猪业专家、生产商协会和当局之间综合协调合作的重要性,以最大限度地发挥预防策略的效果,战胜地方性PED。风险评估和协调干预工具有望帮助制定区域或国家预防和控制政策,并为在其他国家制定地方性PED的定制控制策略提供见解。参考资料:Jang G, Lee D, Shin S, Lim J, Won H, Eo Y, Kim CH, Lee C. Porcine epidemic diarrhea virus: an update overview of virus epidemiology, vaccines, and control strategies in South Korea. J Vet Sci. 2023 Jul;24(4):e58. doi: 10.4142/jvs.23090. PMID: 37532301; PMCID: PMC10404706.为了推动兽用生物制品行业交流,共同探讨该领域的最新研发进展、产业化现状及未来发展趋势,生物制品圈联合四叶草会展、乘风济海将于2024年4月17日-18日在南京共同举办“兽用生物制品研发和产业化大会”,系中国医药全产业链新资源大会(CBC大会)中的一个分领域专业会议。诚邀全国相关领域研究者共享学术盛会。名称:兽用生物制品研发和产业化大会时间:2024年4月17日-18日(周三-周四)地点:南京国际展览中心主办单位:生物制品圈、抗体圈、四叶草会展,乘风济海媒体支持:药时空、细胞基因研究圈报名方式:扫描下方二维码或点击文章最底部“阅读原文”→ 填写表格 → 报名成功组委会获得报名信息后,根据报名信息进行初筛,并进一步与报名者沟通确认,实现精准邀请(严格审核通过)。大会日程注:大会日程以会议现场为准。中国医药全产业链新资源大会(CBC大会)是一场将“政、产、学、研、用、管、投”各方精英围绕全产业链新资源展开的合作大会,将于2024年4月16日-18日在南京国际展览中心举办。大会将主打“全产业链新资源对接”,围绕投资、立项、临床前研发、临床研究、生产、供应链国产化、销售、MAH合作、国际品种合作、公司股权合作与并购全产业链,为中国医药同仁带来全新的资源与商机。大会下设的同期会议包括:宠物药品、食品、保健品新资源大会兽用生物制品研发和产业化大会透皮技术研发生产与注册开年分享会吸入制剂研发、生产与注册新机遇新进展分享会中国改良新药与缓控释制剂全产业链合作大会多肽产业创新与发展大会新药典新型辅料与包材产品与技术交流会新药典新型实验室仪器与耗材实操演示交流会陆续更新中......识别微信二维码,添加生物制品圈小编,符合条件者即可加入生物制品微信群!请注明:姓名+研究方向!版权声明本公众号所有转载文章系出于传递更多信息之目的,且明确注明来源和作者,不希望被转载的媒体或个人可与我们联系(cbplib@163.com),我们将立即进行删除处理。所有文章仅代表作者观点,不代表本站立场。

疫苗引进/卖出

2024-01-05

摘要:PEDV引起的产房仔猪腹泻是目前危害养猪业的主要三大传染病(ASF、PRRS、PED)之一,给养猪业造成巨大的经济损失。疫苗免疫仍然是防控PEDV的主要手段。为了提高疫苗的保护效果,本研究小组对PEDV疫苗在病毒含量、佐剂等方面进行了大幅改进,本文总结了改进后的疫苗免疫怀孕母猪,初生仔猪从母猪乳汁获得被动保护的研究结果,表明该疫苗为产房仔猪PED的防控提供了更有效的措施。 自2011年底,由猪流行性腹泻病毒(porcine diarrhea virus,PEDV)GⅡ群引起的猪流行性腹泻(PED)在我国河南暴发以来,PEDV GⅡ快速蔓延至全国各大小猪场,目前已成为危害养猪业的三大传染病(ASF、PRRS、PED)之一。该病主要危害新生仔猪,临床表现为呕吐、水样腹泻、脱水、消瘦、死亡,以7日龄内仔猪发病最为严重,发病率与死亡率可高达100% 。生长育肥猪感染后偶有发病,死亡率低,但可能长期排毒,是猪场发病的病毒来源之一。对于PED的防控,疫苗免疫是比较经济实惠的手段。猪流行性腹泻病毒属冠状病毒科(coronaviridae)冠状病毒属(coronavirus),核酸为线性单股正链RNA,基因组约为28 kb,病毒粒子大小约为 90~195 nm。PEDV 基因组编码的结构蛋白包括纤突蛋白(S)、小膜蛋白(E)、膜糖蛋白(M)和核衣壳蛋白(N)。纤突蛋白(S)为主要的免疫原性蛋白,能诱导高水平的保护性抗体。为了给客户提供更加安全高效的产品,本研究小组坚持持续对猪病毒性腹泻灭活疫苗产品进行改进升级。目前通过提升抗原含量、保存抗原完整免疫原性及优化佐剂等,并评价其对仔猪的保护效力,结果表明其免疫效力显著提升,表明大华农公司将为客户提供更加优质的产品。1 材料与方法1.1 试验材料1)疫苗:竞品1与竞品2是从市场购买的同类产品;止泻威1、止泻威2由广东温氏大华农生物科技有限公司提供。2)细胞及主要试剂:Vero细胞为本实验室保存,DMEM培养基、胰蛋白酶和胎牛血清公司为Gibco产品。3)试剂盒:IgA试剂盒为IDEXX产品。 4)实验动物:怀孕70日龄一胎母猪(二元杂),从新兴某种猪场购得。5)攻毒毒株:MJ毒株,P5代,由广东温氏大华农生物科技有限公司提供。1.2 方法1)试验分组:试验分组与免疫方案如表1。2)攻毒方案:对止泻威1、竞品1、竞品2免疫组及对照组所产5~7日龄哺乳仔猪进行攻毒,攻毒剂量为105.0 TCID₅₀/头,攻毒方式为口服。3)中和试验:①生长良好的Vero细胞用胰蛋白酶消化,用DMEM生长液分散后,稀释至5~8×10⁵个/mL,最后将细胞加入96孔板中,0.1 mL/孔,96孔板置于37℃、5% CO₂培养;②待96孔板中Vero细胞生长至致密,用DMEM培养液洗涤细胞3次,200 μL/孔/次,最后加入含适量胰蛋白酶DMEM培养液,100 μL/孔;③用DMEM2倍系列稀释血清或乳汁至210,分别取100 μL与100 μL病毒液(1 000 TCID₅₀/mL)混合,置于37 ℃、5% CO₂孵育1 h,每个稀释度重复4次;④去除步骤②中96孔板中培养液,每孔加入200 μL血清或乳汁稀释液与病毒混合液,置于37 ℃、5% CO₂孵育1 h后,弃除,用DMEM培养液洗涤细胞3次,200 μL/孔/次,最后加入含适量胰蛋白酶DMEM培养液,200 μL/孔,置于37 ℃、5% CO₂培养5~7 d;⑤同时设病毒对照和阴性血清对照;⑥每日观察细胞病变效应 (CPE);⑦50%血清中和终点的计算:50% 细胞不产生细胞病变 (CPE) 的血清稀释度。根据细胞病变程度,用 Reed-Muench 法计算 50%血清中和滴度。4)抗体检测:IgG抗体与IgA抗体检测按照试剂盒说明书进行。2 结果2.1 抗体检测结果1)血清中和抗体:该批母猪为一胎母猪,免疫前抗体小部分母猪为阳性(见图1中免疫前中和抗体结果)。将母猪随机分组进行2次免疫后,在二免后2周,从诱导血清中和抗体水平(见图2)来看,止泻威诱导中和抗体高于400,最高达512,且离散度低(小于10%),显著优于同时对比的两个竞品。2)初乳中SIgA抗体:采集产仔当天的乳汁(初乳),用IDEXX试剂盒检测其中的SIgA抗体,从检测结果(见图2)可以看出,止泻威诱导了高水平SIgA抗体,显著高于两个竞品。3)初乳中和抗体:通过检测初乳中和抗体(见图3)发现,止泻威免疫后诱导的中和抗体平均值达400以上,最高可达512,显著高于两个竞品。2.2 哺乳仔猪被动保护在仔猪哺乳5~7 d,采用野毒MJ株对其进行攻击,每头仔猪口服105 TCID₅₀。在攻毒后24~48 h,对照组、竞品1组、竞品2组仔猪均出现不同程度呕吐、腹泻,止泻威组在攻毒后3~5 d仅个别仔猪出现轻微腹泻,各组仔猪腹泻率、存活率及存活弱仔率见表2。综合腹泻发病率、存活率及存活弱仔率,止泻威的保护效果显著高于两个竞品。3 讨论与结论目前市售的疫苗主要有猪传染性胃肠炎、猪流行性腹泻二联活疫苗及猪传染性胃肠炎、猪流行性腹泻二联灭活疫苗两类疫苗,近几年为PED的防控做出了巨大的贡献。但是活疫苗免疫后能不同程度地排毒,排毒时间不一,增加猪场环境中的病毒载量,且活疫苗免疫后,猪只带毒时间长,虽然在配怀舍,不排毒,但进入产房后可能迅速排毒,导致初生仔猪感染发病。采用传统工艺制备的病毒性腹泻灭活疫苗,抗原含量有限(108.0~9.0 TCID₅₀/mL),且常用的灭活剂能一定程度地破坏抗原表位,导致免疫原性降低(数据未发表),因此,病毒性腹泻灭活疫苗的免疫效力仍有提升的空间。本研究小组通过工艺改进有效提高了PEDV有效抗原含量,达200 μg/mL,且筛选到能完整保留病毒结构的灭活剂,以此制备的疫苗经本研究评估验证,无论从二免疫后2周血清中和抗体、初乳中SIgA抗体及初乳中和抗体三项指标评价,还是从哺乳5~7 d初生仔猪的攻毒保护(仔猪腹泻率、存活率及存活弱仔率)评价,猪病毒性腹泻灭活疫苗免疫效力显著优于竞品疫苗,初生哺乳仔猪能获得更优于竞品疫苗的被动保护。参考文献:略作者:何玲,钟采灵,王凤国,梁桂益,孙华,陈瑞爱,黄红亮/广东温氏大华农生物科技有限公司;麦凯杰/广东精捷检验检测有限公司来源:《中国动物保健》2023年12月刊识别微信二维码,添加生物制品圈小编,符合条件者即可加入生物制品微信群!请注明:姓名+研究方向!版权声明本公众号所有转载文章系出于传递更多信息之目的,且明确注明来源和作者,不希望被转载的媒体或个人可与我们联系(cbplib@163.com),我们将立即进行删除处理。所有文章仅代表作者观点,不代表本站立场。

疫苗临床研究诊断试剂

分析

对领域进行一次全面的分析。

登录

或

Eureka LS:

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

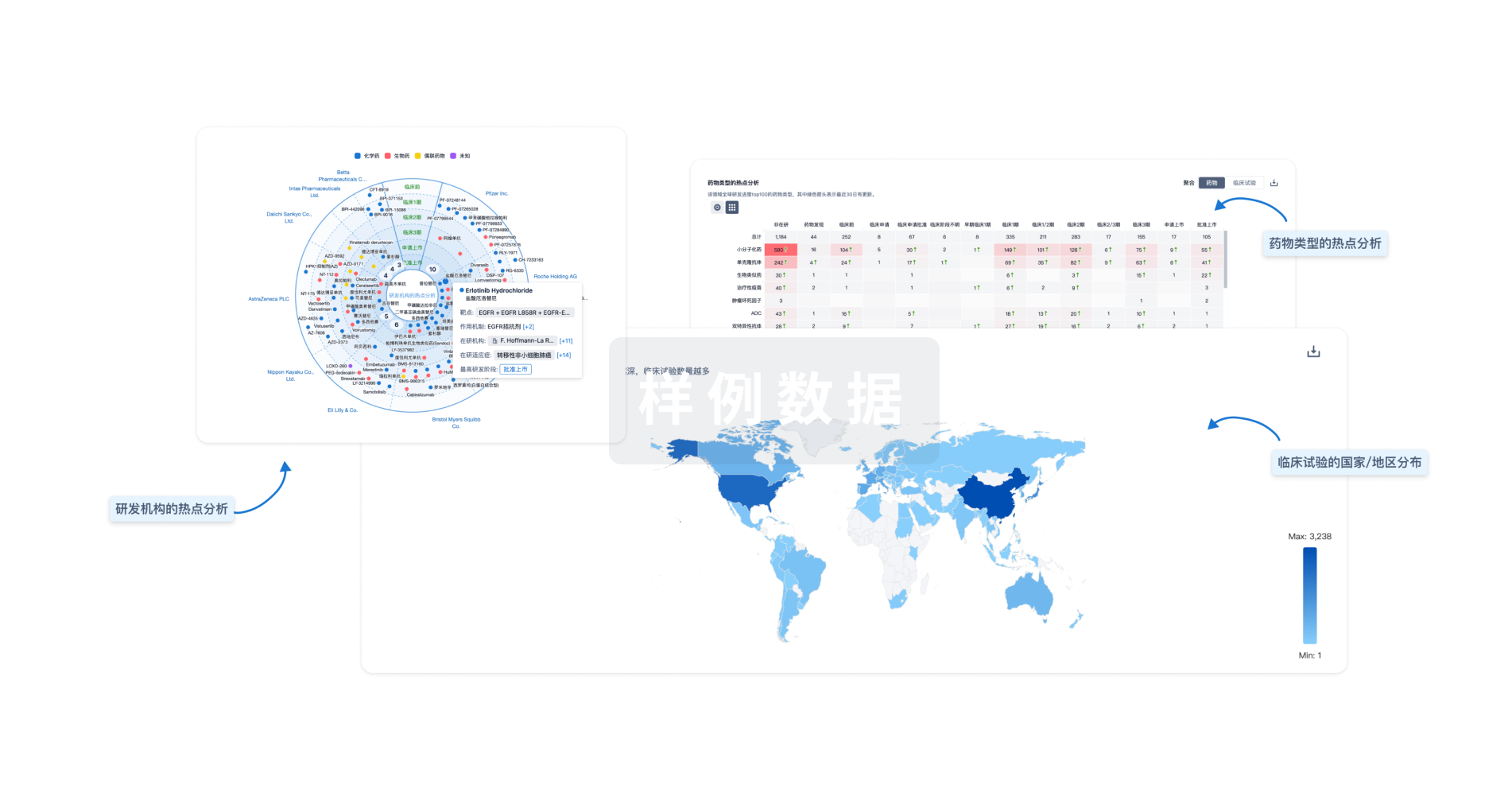

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用