预约演示

更新于:2025-05-07

JD Health International, Inc.

更新于:2025-05-07

概览

关联

100 项与 JD Health International, Inc. 相关的临床结果

登录后查看更多信息

0 项与 JD Health International, Inc. 相关的专利(医药)

登录后查看更多信息

9

项与 JD Health International, Inc. 相关的文献(医药)2025-03-01·IEEE Transactions on Medical Imaging

Debiased Estimation and Inference for Spatial–Temporal EEG/MEG Source Imaging

Article

作者: Geng, Xiaokun ; Ding, Yuchuan ; Chen, Song Xi ; An, Shan ; Tong, Pei Feng ; Ding, Xinru ; Yang, Haoran ; Wang, Guoxin

2024-12-20·Proceedings of the 2024 2nd Asia Symposium on Image and Graphics

Multi-Dimension-Embedding-Aware Modality Fusion Transformer for Psychiatric Disorder Diagnosis

作者: Wang, Guoxin ; Yu, Feng ; Wang, Jinsong ; Wang, Zhiren ; Fan, Fengmei ; Cao, Xuyang ; An, Shan

2024-11-01·2024 China Automation Congress (CAC)

LLM-Augmented Deep Reinforcement Learning for Complex Task Planning

作者: Song, Yunpeng ; Du, Wentao ; Hao, Ziming ; Wang, Haoming ; Wang, Changyu ; Cai, Zhongmin

758

项与 JD Health International, Inc. 相关的新闻(医药)2025-04-29

·米内网

精彩内容近日,中成药贴膏剂市场再迎重磅新品,健民药业集团的中药1.1类创新药小儿牛黄退热贴膏获批上市。米内网数据显示,中成药贴膏剂在近年中国零售药店(城市实体药店和网上药店)终端市场规模持续扩容,销售额均超过50亿元,2024年同比增长2.01%。从TOP20来看,10个独家产品“霸屏”,9亿大品种领跑,妇科药大涨150%,羚锐5大品牌亮眼。近年中国零售药店终端中成药贴膏剂销售情况(单位:亿元)来源:米内网格局数据库米内网数据显示,中成药贴膏剂在近年中国零售药店(城市实体药店和网上药店)终端市场规模持续扩容,销售额均超过50亿元,2024年同比增长2.01%。其中,城市实体药店是主力销售渠道,网上药店保持双位数增速。从治疗大类来看,骨骼肌肉系统疾病用药位居第一,市场份额占比超过80%;儿科用药、消化系统疾病用药分别位居第二、三。从在销品种数量来看,涉及超过产品160个、品牌430个。从集团TOP20来看,羚锐制药、云南白药、奇正集团位居前三,逢春制药、沈阳管城制药增速翻倍,黄石卫生材料药业、哈药集团、湖南金寿制药、湖南杏林春药业均有双位数增速。10个独家产品“霸屏”!妇科药大涨150%,奇正、羚锐、云南白药上榜2024年中国零售药店终端中成药贴膏剂产品TOP20(单位:亿元)来源:米内网格局数据库注:销售额低于1亿元用*表示产品TOP20中,消痛贴膏多年来稳居“销冠”宝座,通络祛痛膏和云南白药膏分别排在第二、三位,销售额均超过5亿元。妇科用药养血调经膏凭借150.73%的增速首次上榜,麝香壮骨膏则是竞争最激烈的产品,在销企业数量达46家,消炎镇痛膏、关节止痛膏、伤湿止痛膏均超过20家。10个独家产品中,消痛贴膏(西藏奇正藏药)、通络祛痛膏(河南羚锐制药)、云南白药膏和云南白药创可贴(云南白药无锡药业)、丁桂儿脐贴(亚宝药业集团)、骨通贴膏(桂林华润天和药业)销售额均超过1亿元,而云南白药膏(云南白药无锡药业)则是增速最快的独家产品。2024年中国零售药店终端中成药贴膏剂品牌TOP20(单位:亿元)来源:米内网格局数据库注:销售额低于1亿元用*表示品牌TOP20中,西藏奇正藏药的消痛贴膏、河南羚锐制药的通络祛痛膏、云南白药无锡药业的云南白药膏位居前三。哈药世一堂制药的养血调经膏、九寨沟天然药业的壮骨麝香止痛膏、安徽金马药业的消炎镇痛膏为新上榜品牌。从企业数量来看,河南羚锐制药最多,通络祛痛膏、壮骨麝香止痛膏、麝香壮骨膏、舒腹贴膏、伤湿止痛膏5个上榜;桂林华润天和药业有3个,分别是骨通贴膏、麝香壮骨膏、天和追风膏;云南白药无锡药业有云南白药膏和云南白药创可贴上榜。消痛贴膏是奇正藏药的独家产品,用于急慢性扭挫伤、跌打瘀痛、骨质增生、风湿及类风湿疼痛、落枕、肩周炎、腰肌劳损和陈旧性伤痛。该产品在2024年中国零售药店终端销售额超过9亿元,是中成药贴膏剂TOP1产品和品牌。奇正藏药表示,消痛贴膏作为公司明星产品,融合藏药经典验方和先进的真空冻干和低温粉碎现代制剂技术,凭借专利技术发明和产业化能力,提升产品的质量和疗效,改善患者使用体验,正式拉开了藏药工程化的大幕。未来,公司在骨骼肌肉领域,坚持围绕核心产品消痛贴膏,稳定其市场份额,并加快骨骼肌肉领域产品矩阵推广,重点推动铁棒锤止痛膏、青鹏软膏、白脉软膏、如意珍宝片等品种的增长。羚锐制药有5个品牌上榜,通络祛痛膏是其独家产品,该产品近年在中国零售药店终端市场规模持续扩容,2023年突破5亿元后,2024年再度增长近8%;此外,壮骨麝香止痛膏、麝香壮骨膏、舒腹贴膏、伤湿止痛膏在2024年中国零售药店终端羚锐制药的市场份额均位居第一。羚锐制药表示,公司研发创新将立足发展需要,以“中药现代化+高端制剂”为核心,聚焦骨科、疼痛管理、慢性病领域,推动研发创新工作提质增效。其中,核心产品升级方面,持续推进通络祛痛膏等已上市产品的二次开发,在循证医学证据、适应症范围扩大等方面进行深入研究,进一步做强大品种。健民拿下重磅1类新药,康缘、赛立克……火热来袭来源:米内网一键检索近日,健民药业集团的中药1.1类创新药小儿牛黄退热贴膏获批上市,该药品处方源自全国名中医的临床经验方,贴于大椎穴和神阙穴,具有退热解表、清热解毒功效,用于小儿急性上呼吸道感染风热证所致的发热(38.5℃及以下),该药品的上市为急性上呼吸道感染风热证所致的1至5岁发热儿童患者提供了新的治疗选择。健民药业集团表示,小儿牛黄退热贴膏获批上市将进一步丰富公司产品线,有利于提升核心竞争力,对公司未来发展产生积极影响。2019年至今,中成药贴膏剂1类新药仅有健民药业集团的小儿牛黄退热贴膏获批上市,暂无产品报产在审,江苏康缘药业的栀黄贴膏和海南赛立克药业的马钱子碱凝胶贴膏获批临床。其中,江苏康缘药业的栀黄贴膏进入Ⅱ期临床阶段,拟用于急性软组织损伤。资料来源:米内网数据库、公司公告注:米内网《中国城市实体药店药品终端竞争格局》,统计范围是:全国地级及以上城市实体药店,不含县乡村药店;《中国网上药店药品终端竞争格局》,统计范围是:全国网上药店所有药品数据,包括天猫、京东等第三方平台及私域平台上所有网上药店药品数据;上述销售额以产品在终端的平均零售价计算。如有疏漏,欢迎指正!免责声明:本文仅作医药信息传播分享,并不构成投资或决策建议。本文为原创稿件,转载文章或引用数据请注明来源和作者,否则将追究侵权责任。投稿及报料请发邮件到872470254@qq.com稿件要求详询米内微信首页菜单栏商务及内容合作可联系QQ:412539092【分享、点赞、在看】点一点不失联哦

上市批准医药出海

2025-04-29

4月29日,中国生物制药(1177.HK)下属企业北京泰德制药自主研发的国产首款妥洛特罗贴剂(德瑞妥®)实现京东健康及线下连锁药店的全渠道供应。德瑞妥以国产透皮技术突破,规避了消化道和肝脏的首过效应,药物利用率显著提升,并将适用年龄下探至儿童,推动慢性呼吸疾病管理迈入“贴时代”!呵护全龄段患者健康,打造家庭常备新趋势在我国,以慢性阻塞性肺疾病(简称慢阻肺)、支气管哮喘(简称哮喘)为代表的慢性呼吸系统疾病患者近1.5亿人,但传统呼吸疾病治疗长期面临两大技术瓶颈:口服制剂因经过胃肠道吸收而出现首过效应,导致生物利用度降低且易引发消化道不良反应;吸入制剂则对患者操作技巧要求较高,儿童和老年患者往往难以掌握正确使用方法。临床数据显示,约40%的患者因吸入装置操作复杂或吞咽困难而难以坚持规范治疗。其中,儿童患者占比超过30%,老年患者则因吞咽功能退化而增加呛咳风险。德瑞妥采用透皮给药技术,为用药依从性较差的群体提供更多便利。泰德制药副总裁、研发负责人赵焰平表示,德瑞妥获批适用人群为9岁以上的患者,北京泰德正在加快将适用年龄下探至9岁以下的儿童,预计下半年获批后将进一步打开儿科市场,成为守护中国全年龄段患者的家庭常备健康用药。目前,德瑞妥已全面覆盖京东健康及全国连锁药店,助力患者能够更便捷地获取这一创新疗法。公司将携手合作电商平台,结合在线客服、科普短视频等形式,普及慢性呼吸系统疾病的干预知识及贴剂使用方法,全面打通用药最后一公里,助力全民畅快呼吸。突破技术瓶颈,减轻“晨降”难题呼吸功能也有“时刻表”,很多哮喘的发作都被认为是由“晨降”引起的。一天内呼吸功能在16点时迎来高峰,早晨4点时降到最低,即所谓“晨降”,该时间段不便于患者治疗和用药[1]。COPD患者同样存在难以维持持续睡眠、睡眠质量差等问题。晨降现象严重影响了患者第二天的工作、学习和生活,因此,控制早晨疾病的发作对于提高患者生活质量具有积极意义。德瑞妥创新性采用缓释技术控制渗透速率,使药物经皮肤持续吸收,不仅避免传统呼吸疾病治疗长期面临的技术瓶颈,更将药物利用率较传统剂型大幅提升,降低了不少哮喘患者都会面临的“晨降”难题——只需睡前将德瑞妥贴在胸部或者背部,可通过缓释技术,在“晨降”高危时段持续舒张支气管,降低夜间发病风险。3.3亿贴产能+全链体系,全球贴剂市场的“中国方案”作为国内透皮贴剂产业的头部企业,泰德制药始终将推动行业技术突破和产业升级为己任。由于透皮贴剂工艺复杂、研发难度大、技术壁垒高,长期以来北美和欧洲是全球透皮贴剂的主要市场,国产透皮制剂始终难以形成规模化、多元化突破。泰德制药历经十余年技术攻关,成功构建起覆盖凝胶贴膏、热熔胶贴剂、透皮贴剂及微针贴剂的四大技术平台,打通了从核心辅料研发到终端产品生产的全产业链体系,并带动上下游产业链走上国产替代的“快车道”。作为国产首款妥洛特罗贴剂,德瑞妥的上市标志着中国透皮技术的又一突破。该产品通过突破功能性辅料与工艺技术壁垒,已获多项国家发明专利,其创新技术平台更成功拓展至阿尔茨海默病、帕金森病等神经退行性疾病领域。依托年产能3.3亿贴的智能化生产基地,泰德制药正加速将实验室成果转化为惠及全民的临床价值。透皮给药技术因其血药浓度平稳、使用便捷等优势,正成为慢性病管理的革新力量。随着“一带一路”医药合作的深化,企业将通过技术输出带动国内透皮制剂产业规模的进一步增长。随着我国在该领域从“跟跑”到“并跑”的跨越,泰德制药将持续加大研发投入,为全球透皮制剂研发提供更多“中国方案”。参考文献:[1] TURNER-WARGARET M.On observing patterns of airflow obstruction in chronic asthma[J].Br J Dis Chest,1977,71(2): 73-86.声明:1.本新闻稿旨在促进医药信息的沟通和交流,仅供医疗卫生专业人士参阅,非广告用途。2.本公司不对任何药品和/或适应症作推荐。3. 本新闻稿中涉及的信息仅供参考,不能以任何方式取代专业的医疗指导,也不应被视为诊疗建议。若您想了解具体疾病诊疗信息,请遵从医生或其他医疗卫生专业人士的意见或指导。前瞻性声明:本新闻稿中包含若干前瞻性陈述,包括有关【妥洛特罗贴剂(德瑞妥®)】的临床开发计划、临床获益与优势的预期、商业化展望、患者临床获益可能性,以及潜在商业机会等声明。“预期”、“相信”、“继续”、“可能”、“估计”、“期望”、“有望”、“打算”、“计划”、“潜在”、“预测”、“预计”、“应该”、“将”、“拟”、“会”和类似表达旨在识别前瞻性陈述,但并非所有前瞻性陈述都包含这些识别词。这些前瞻性陈述为公司基于当前所掌握的数据和信息所做的预测或期望,可能因受到政策、研发、市场及监管等不确定因素或风险的影响,而导致实际结果与前瞻性陈述有重大差异。请现有或潜在的投资者审慎考虑可能存在的风险,并不可完全依赖本新闻稿中的前瞻性陈述,该等陈述包含信息仅及于本新闻稿发布当日。除非法律要求,本公司无义务因新信息、未来事件或其他情况而对本新闻稿中任何前瞻性陈述进行更新或修改。 内容来源:中国生物制药有限公司官网

2025-04-29

4月29日,国产首款妥洛特罗贴剂(德瑞妥®)实现京东健康及线下连锁药店的全渠道供应。正大天晴携手线上、线下全渠道,守护全年龄段呼吸疾病患者健康。德瑞妥以国产透皮技术创新突破,规避了消化道和肝脏的首过效应,药物利用率显著提升,并将适用年龄下探至儿童,通过全渠道供应,有望突破地域限制、打破信息壁垒,推动慢性呼吸疾病管理迈入“贴时代”。呵护全龄段患者健康,打造家庭常备新趋势在我国,以慢性阻塞性肺疾病(简称慢阻肺)、支气管哮喘(简称哮喘)为代表的慢性呼吸系统疾病患者近1.5亿人,但传统呼吸疾病治疗长期面临两大技术瓶颈:口服制剂因经过胃肠道吸收而出现首过效应,导致生物利用度降低且易引发消化道不良反应;吸入制剂则对患者操作技巧要求较高,儿童和老年患者往往难以掌握正确使用方法。临床数据显示,约40%的患者因吸入装置操作复杂或吞咽困难而难以坚持规范治疗。其中,儿童患者占比超过30%,老年患者则因吞咽功能退化而增加呛咳风险。德瑞妥通过透皮给药技术,为用药依从性较差的群体提供便利。此外,呼吸功能也有“时刻表”,往往在16点时迎来高峰,凌晨4点时降到最低,即所谓“晨降”,发生“晨降”的患者常因突如其来的喘息或呼吸困难而被惊醒。德瑞妥则突破技术瓶颈,极大减轻“晨降”难题——只需睡前贴在胸部或者背部,可通过缓释技术,在“晨降”高危时段持续舒张支气管,降低夜间发病风险。目前,德瑞妥获批适用人群为9岁以上的患者,北京泰德正在加快将适用年龄下探至9岁以下的儿童,预计下半年获批后将进一步打开儿科市场,有望成为守护中国全年龄段患者的家庭常备健康用药。德瑞妥已全面覆盖京东健康及全国连锁药店,助力患者能够更便捷地获取这一创新疗法。公司将携手合作电商平台,结合在线客服、科普短视频等形式,普及慢性呼吸系统疾病的干预知识及贴剂使用方法,全面打通用药最后一公里,助力全民畅快呼吸。3.3亿贴产能+全链体系,全球贴剂市场的“中国方案”由于透皮贴剂工艺复杂、研发难度大、技术壁垒高,长期以来北美和欧洲是全球透皮贴剂的主要市场,国产透皮制剂始终难以形成规模化、多元化突破。作为国内透皮贴剂产业的头部企业,泰德制药历经十余年技术攻关,已成功构建起覆盖凝胶贴膏、热熔胶贴剂、透皮贴剂及微针贴剂的四大技术平台,打通了从核心辅料研发到终端产品生产的全产业链体系,并带动上下游产业链走上国产替代的“快车道”。作为国产首款妥洛特罗贴剂,德瑞妥的上市标志着中国透皮技术的又一突破。该产品通过突破功能性辅料与工艺技术壁垒,已获多项国家发明专利,其创新技术平台更成功拓展至阿尔茨海默病、帕金森病等神经退行性疾病领域。依托年产能3.3亿贴的智能化生产基地,泰德制药正加速将实验室成果转化为惠及全民的临床价值。透皮给药技术因其血药浓度平稳、使用便捷等优势,正成为慢性病管理的革新力量。随着“一带一路”医药合作的深化,企业将通过技术输出带动国内透皮制剂产业规模的进一步增长。随着我国在该领域从“跟跑”到“并跑”的跨越,泰德制药将持续加大研发投入,为全球透皮制剂研发提供更多“中国方案”。声明:1.本新闻稿旨在促进医药信息的沟通和交流,仅供医疗卫生专业人士参阅,非广告用途。2.本公司不对任何药品和/或适应症作推荐。3. 本新闻稿中涉及的信息仅供参考,不能以任何方式取代专业的医疗指导,也不应被视为诊疗建议。若您想了解具体疾病诊疗信息,请遵从医生或其他医疗卫生专业人士的意见或指导。 ● 全国人大常委会副委员长武维华一行到正大天晴调研● 派安普利单抗两大适应症获美国FDA批准!● 12项口头报告创中国药企最高纪录,正大天晴多瘤种创新管线闪耀2025ASCO● 解锁双抗ADC药物研发突破,正大天晴TQB2102在中国生物制品大会亮相

医药出海

100 项与 JD Health International, Inc. 相关的药物交易

登录后查看更多信息

100 项与 JD Health International, Inc. 相关的转化医学

登录后查看更多信息

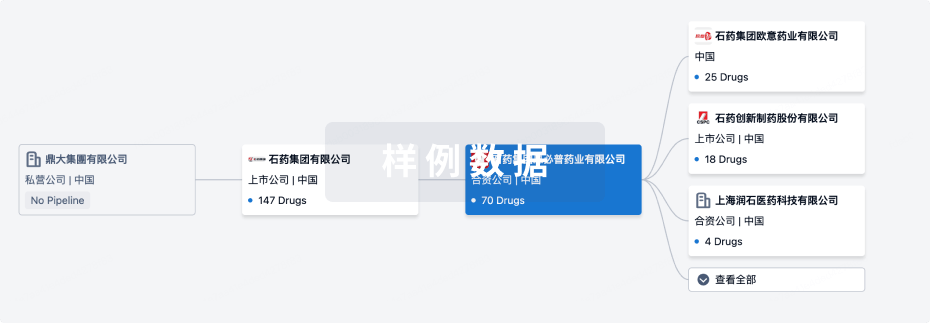

组织架构

使用我们的机构树数据加速您的研究。

登录

或

管线布局

2025年09月21日管线快照

无数据报导

登录后保持更新

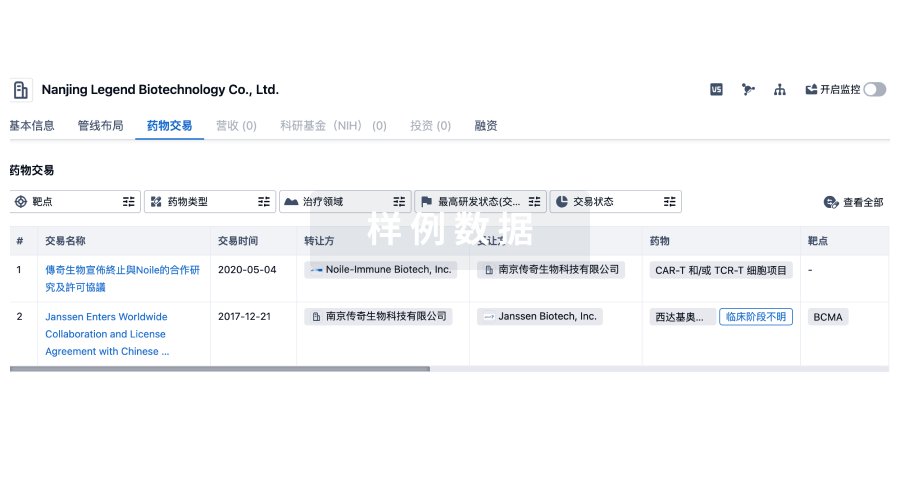

药物交易

使用我们的药物交易数据加速您的研究。

登录

或

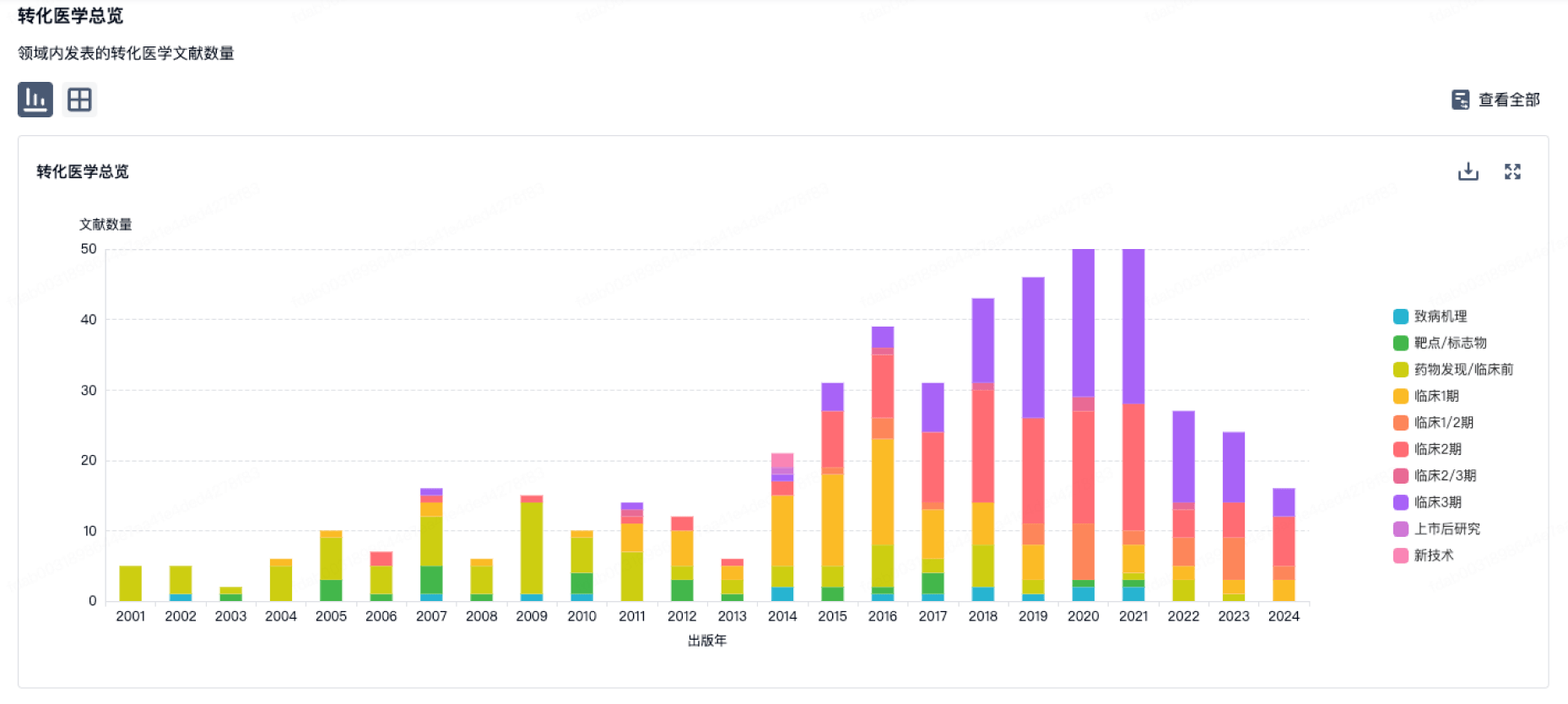

转化医学

使用我们的转化医学数据加速您的研究。

登录

或

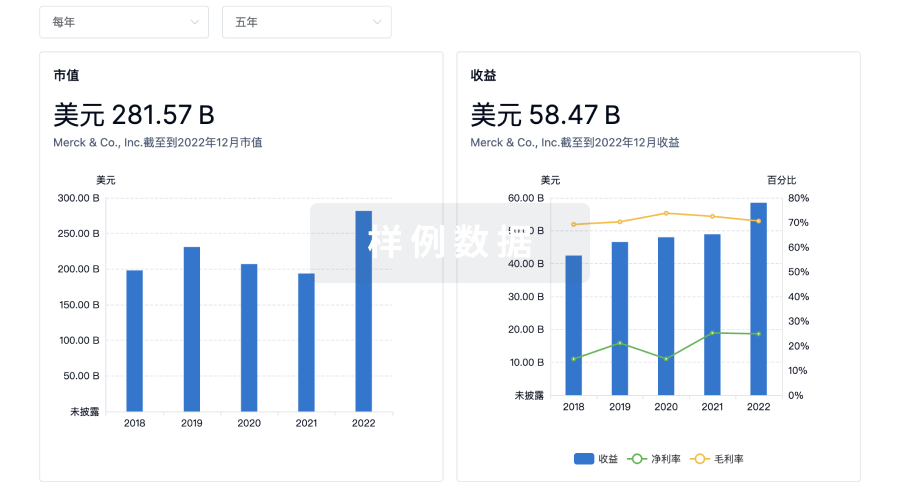

营收

使用 Synapse 探索超过 36 万个组织的财务状况。

登录

或

科研基金(NIH)

访问超过 200 万项资助和基金信息,以提升您的研究之旅。

登录

或

投资

深入了解从初创企业到成熟企业的最新公司投资动态。

登录

或

融资

发掘融资趋势以验证和推进您的投资机会。

登录

或

Eureka LS:

全新生物医药AI Agent 覆盖科研全链路,让突破性发现快人一步

立即开始免费试用!

智慧芽新药情报库是智慧芽专为生命科学人士构建的基于AI的创新药情报平台,助您全方位提升您的研发与决策效率。

立即开始数据试用!

智慧芽新药库数据也通过智慧芽数据服务平台,以API或者数据包形式对外开放,助您更加充分利用智慧芽新药情报信息。

生物序列数据库

生物药研发创新

免费使用

化学结构数据库

小分子化药研发创新

免费使用